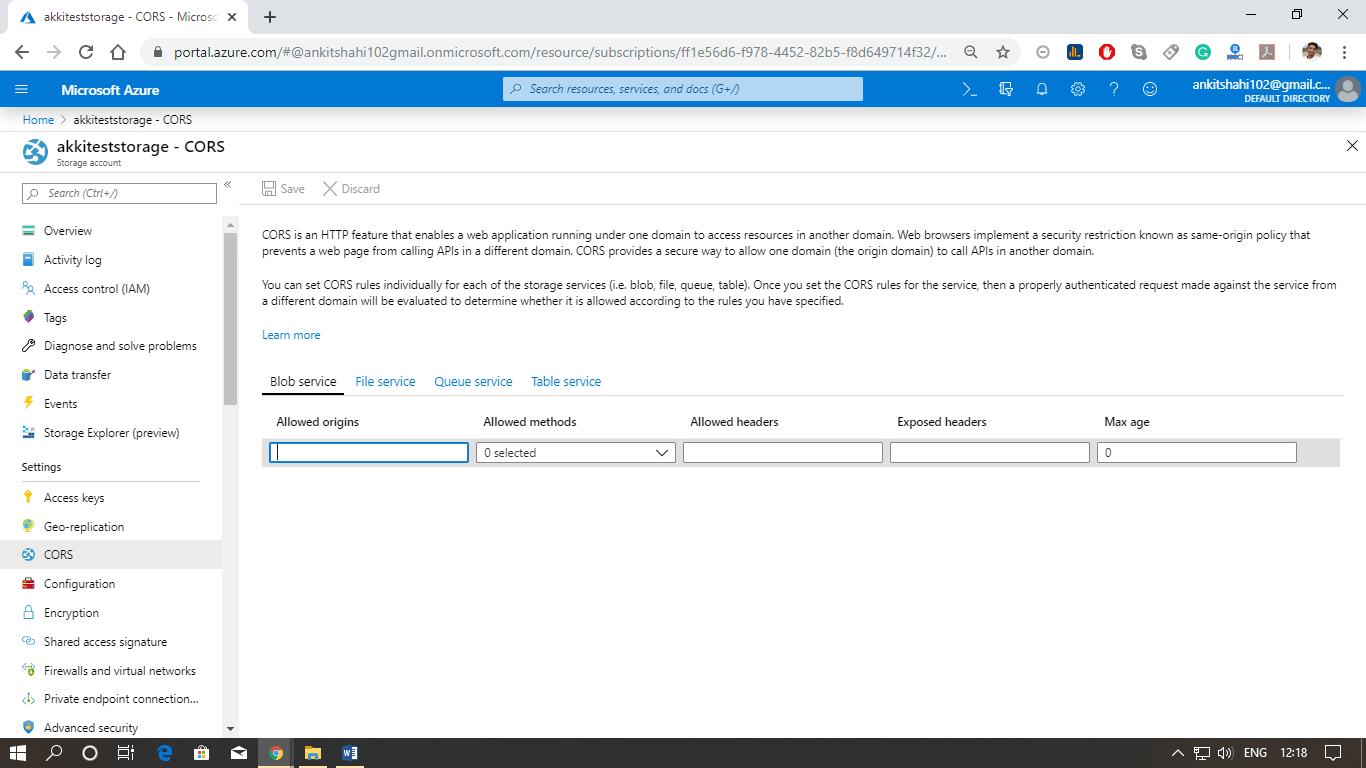

Azure Network Service

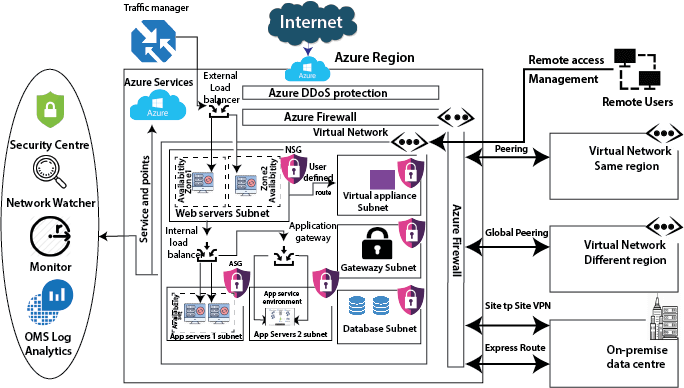

The most fundamental building block of Azure network services is the virtual network. Using a virtual network, we can deploy our isolated network on Azure. And we can divide the virtual network into multiple parts using subnets. For example - webserver subnet, App servers1 subnet, App servers2 subnet, Database subnet, Gateway subnet, Virtual Appliance subnet, etc. These are the typical examples, but we can create different kinds of subnets based on our requirements.

And once we create subnets, we can deploy different types of Azure services into these subnets. We can deploy a virtual machine into these subnets. But in addition to virtual machines, we can also deploy some specialized environments. i.e., some PaaS environments that are capable of being implemented into a virtual network. For example - in an app service environment, we can able to deploy in its own subnet. Similarly, there is something called managed SQL instance and also managed integration environment, all these kinds of environments we can able to deploy within a virtual network.

We can deploy different kinds of the appliance in a virtual appliance subnet like a firewall.

Service Protection: After the deployment of all these services, we need to protect these services. Azure provides several protection strategies.

DDoS Protection: The DDoS protection will protect our workload in the virtual network from DDoS attacks. There is a two-tier available in DDoS protection. One is the basic, which is free and enabled automatically. If we need the advance capability, then we can go for the DDoS standard tier.

Firewall: When we need network security, we use a firewall. Azure provides a firewall service which you can centrally manage inbound and outbound firewall rules. We can able to create network firewall rules, application firewall rules, inbound SNAT rules, outbound DNAT rules, etc.

Network Security Groups: If you think the firewall is too costly for you, then we can use Network security groups. We can filer the inbound and outbound traffic using network security groups. We can attach the network security group at two levels, one at the subnet level and other we can attach to a virtual machine.

Application Security Groups: Microsoft introduces the application security group to put all the server related to one application in one application security group and use that application security group in network security group inbound and outbound rules. The primary purpose of the Application Security Group is to simplify the rule creation in NSG's.

Service Availability

We have to make sure that our application is highly available and resilient to regional failures, data center failure, and rack failures. Azure provides some services to make our application highly available; these are:

Traffic Manager: Microsoft Azure traffic manager controls the distribution of user traffic for service endpoints in different regions. Service endpoints supported by Traffic Manager include Azure VMs, Web Apps, Cloud services, etc. It uses DNS to direct the client request to the right endpoint based on a traffic-routing method and the health of endpoints.

Load Balancer: Load balancer is used to distribute the traffic evenly between a pool of web servers or application servers. There are two types of the load balancer, one is external load balancer which sits outside the virtual network and the second one is an internal load balancer that sits inside the virtual network.

Application Gateway: Using the application gateway, we can achieve URL path-based routing, Multi-site hosting, etc.

Availability Zones: By deploying our virtual machines into different availability zones, we can route our application traffic to virtual machines that are located in different availability zone in case of failure of datacenter within any region.

Communication

The basic idea behind creating a virtual network is to enable communication between workloads using default system routes. These system routes will be deployed by Azure automatically. But we can also override these system routes and configure our user-defined routes; then, we can do that too.

Peering: To enable communication between two virtual networks, we can establish peering. We can do this peering with virtual networks within the same region. If we have an Azure virtual network in another region, then we can use global peering. And for the on-premises data center, we have two options, and one is the site to site VPN, which will get established over the Internet. But for private connectivity, we have to use the express route.

Monitoring: Once we deployed all the services from the networking perspective, we need to start monitoring them. Azure provides some services to monitor traffic.

Security Center: It is a central security monitoring tool using which we can view the Security score of your overall deployment, and any recommendation generated by Azure based on the security policies we have applied. Both from networking and also the service deployed on that virtual network.