How I solved Dynamic Task Scheduling using AWS DynamoDB TTL, Stream and Lambda

How AWS DynamoDB TTL, Stream and Lambda Works

TTL stands for time to live. In DynamoDB, you can specify a time for each record in a table independently which denotes the time when the item will expire called TTL. DynamoDB Stream is another service by AWS that acts upon any changes made in the DynamoDB table. We can use DynamoDB Stream to trigger an action when an item is inserted, modified or deleted. Along with TTL we can use DynamoDB Stream to trigger an AWS Lambda function when a record’s TTL expires in DynamoDB table.

Limitations of this DynamoDB TTL

Firstly, the biggest drawback is DynamoDB TTL does not exactly maintain the expiry time. After an item expires, when exactly the item will be deleted depends on the nature of workload and size of the table. At worst case scenario, it may take up to 48 hours for the actual deletion event to take place as explained in their documentation.

DynamoDB typically deletes expired items within 48 hours of expiration. The exact duration within which an item truly gets deleted after expiration is specific to the nature of the workload and the size of the table. Items that have expired and have not been deleted still appear in reads, queries, and scans. These items can still be updated, and successful updates to change or remove the expiration attribute are honored.

So, if your task needs to be executed at exactly the time specified, this solution won’t work for you. But, if your tasks need to be executed after a certain time, but not too constrained on how much later like sending email or sending notification, then this might work for you. There is a nice article that tried to benchmark the TTL performance of AWS DynamoDB.

Secondly, DynamoDB Stream gets triggered on all kinds of events like insertion, modification and deletion. Currently, there is no way to trigger DynamoDB Stream for only a specific event, say deletion. So, we need to handle all kinds of events and then decide upon the type of the event when to execute the task.

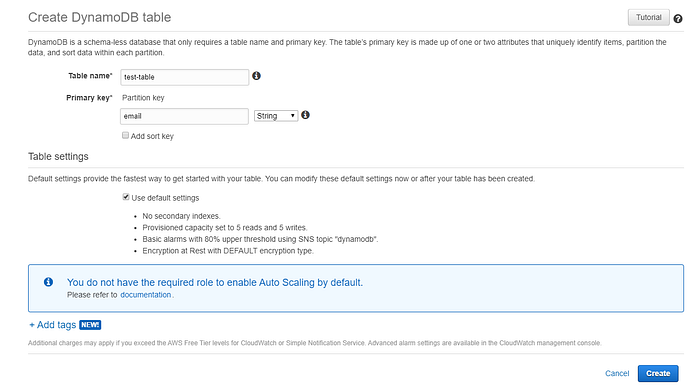

Create AWS DynamoDB table

First of all, we need to create a DynamoDB table. So, head on to AWS DynamoDB and create a table named ‘test-table’ and add a primary key named ‘email’ as following. For simplicity, we are keeping all other configurations to default.

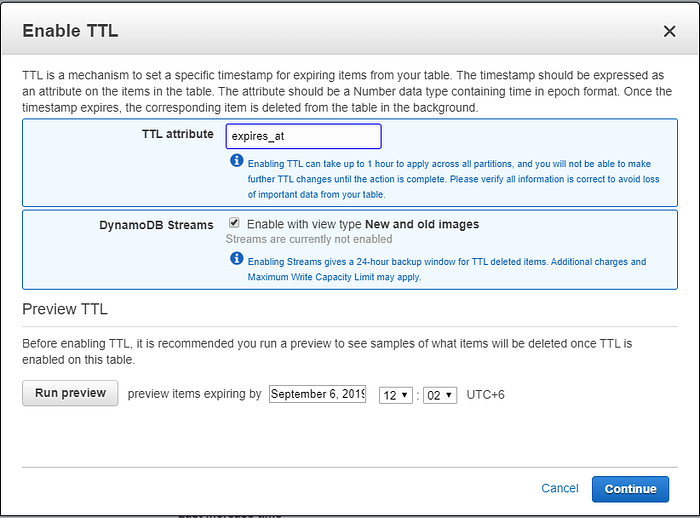

Now, we need to enable TTL in the DynamoDB table. For this, select the table, go to the overview section and under Table details you will find Time to live attribute, click on Enable TTL. A dialogue box will appear. In the TTL attribute section, type a field name which denotes the time each item will be deleted. In our case, it is ‘expired_at’. Then, in the DynamoDB Streams section, enable with view type New and old images by checking it. Then click continue. Now you are all set with the DynamoDB Table and Stream.

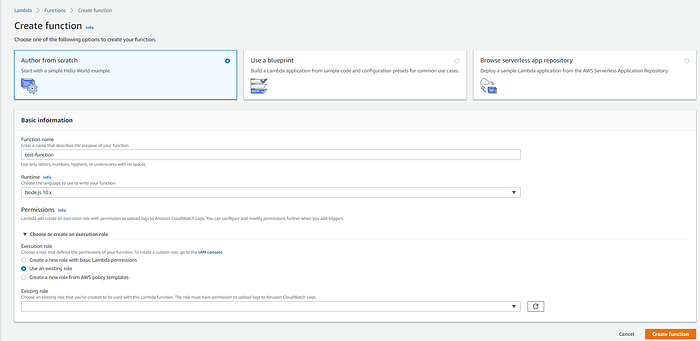

Create AWS Lambda function

Now, head over to AWS Lambda. Before creating a Lambda function create an IAM role that has access to DynamoDB. If you are not sure how to do it, follow this article. Then, from AWS Lambda console, create a Lambda function, provide a name like ‘test-function’ and select the runtime. I am using Nodejs, but you can use any runtime as you like. For execution role, select the previously created role and click on create function.

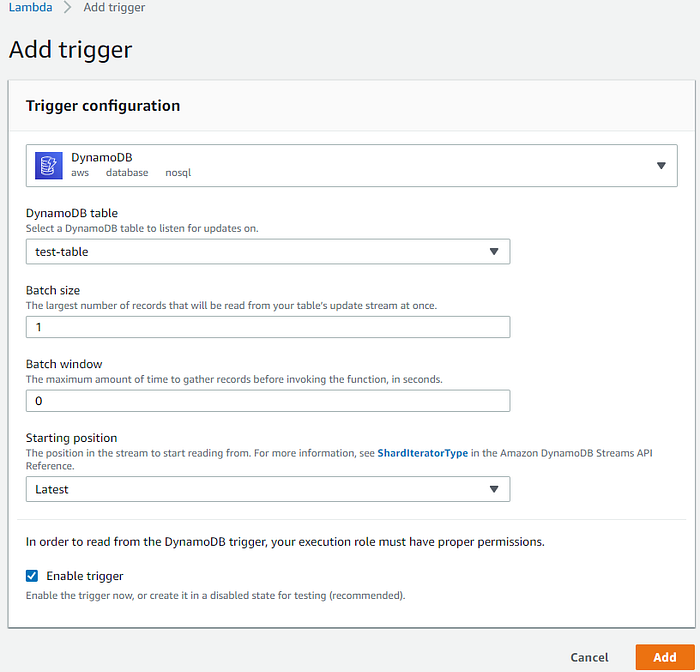

Add AWS DynamoDB Stream trigger to AWS Lambda function

After the function is created, click on add trigger, then select your DynamoDB table, in our case ‘test-table’. Set the batch size to 1, as we want to process only 1 record at a time. Set batch window to 0, as we don’t want any delay in lambda execution after expiry of the record. Set starting position to Latest and then click add. Now your DynamoDB Stream Trigger is set.

No comments:

Post a Comment