Introducing data products in Amazon DataZone: Simplify discovery and subscription with business use case based grouping

- Data product creation and publishing – Producers can create data products by selecting assets from their project’s inventory, setting up shared metadata, and publishing these products to make them discoverable to consumers.

- Data discovery and subscription – Consumers can search for and subscribe to data product units. Subscription requests are sent within a single workflow to producers for approval. Subscription approval processes, such as approve, reject, and revoke, ensure that access is managed securely. Once approved, access grants for the individual assets within the data product are automatically managed by the system.

- Data product lifecycle management – Producers have control over the lifecycle of data products, including the ability to edit them and remove them from the catalog. When a producer edits product metadata or adds or removes assets from a data product, they republish it as a new version, and subscriptions are updated without any reapproval.

Solution overview

To demonstrate these capabilities and workflows, consider a use case where a product marketing team wants to drive a campaign on product adoption. To be successful, they need access to sales data, customer data, and review data of similar products. The sales data engineer, acting as the data producer, owns this data and understands the common requests from customers to access these different data assets for sales-related analysis. The data producer’s objective is to group these assets so consumers, such as the product marketing team, can find them together and seamlessly subscribe to perform analysis.

The following high-level implementation steps show how to achieve this use case with data products in Amazon DataZone and are detailed in the following sections.

- Data publisher creates and publishes data product

- Create data product – The data publisher (the project contributor for the producing project) provides a name and description and adds assets to the data product.

- Curate data product – The data publisher adds a readme, glossaries, and metadata forms to the data product.

- Publish data product – The data publisher publishes the data product to make it discoverable to consumers.

- Data consumer discovers and subscribes to data product

- Search data product – The data consumer (the project member of the consuming project) looks for the desired data product in the catalog.

- Request subscription – The data consumer submits a request to access the data product.

- Data owner approves subscription request – The data owner reviews and approves the subscription request.

- Review access approval and grant – The system manages access grants for the underlying assets.

- Query subscribed data – The data consumer receives approval and can now access and query the data assets within the subscribed data product.

- Data owner maintains lifecycle of data product

- Revise data product – The data owner (the project owner for the producing project) updates the data product as needed.

- Unpublish data product – The data owner removes the data product from the catalog if necessary.

- Delete data product – The data owner permanently deletes the data product if it is no longer needed.

- Revoke subscription – The data owner manages subscriptions and revokes access if required.

Prerequisites

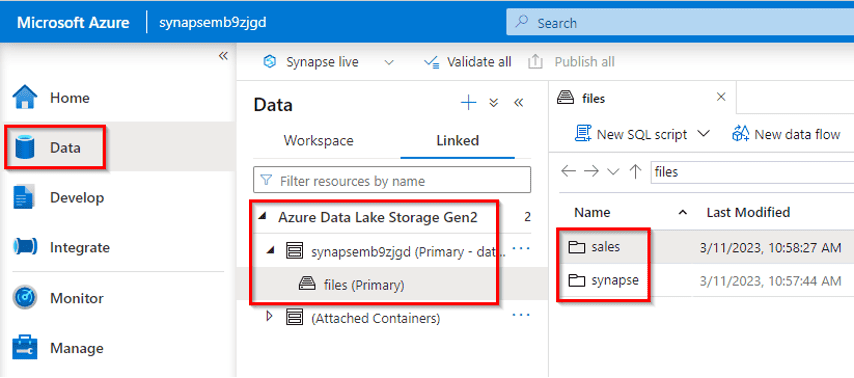

To follow along with this post, ensure the publisher of the product sales data asset has ingested individual data assets into Amazon DataZone. In our use case, a data engineer in sales owns the following AWS Glue tables: customers, order_items, orders, products, reviews, and shipments. The data engineer has added a data source to bring these six data assets into the sales producer project inventory, ingesting the metadata in Amazon DataZone. For instructions on ingesting metadata for AWS Glue tables, refer to Create and run an Amazon DataZone data source for the AWS Glue Data Catalog. For Amazon Redshift, see Create and run an Amazon DataZone data source for Amazon Redshift.

On the producer side, a sales product project has been created with a data lake environment. A data source was created to ingest the technical metadata from the AWS Glue salesdb database, which contains the six AWS Glue tables mentioned previously. On the consumer side, a marketing consumer project with a data lake environment has been established.

Data publisher creates and publishes data product

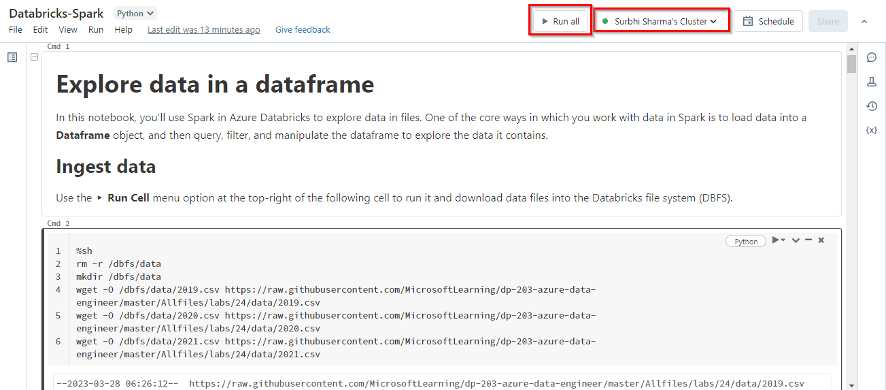

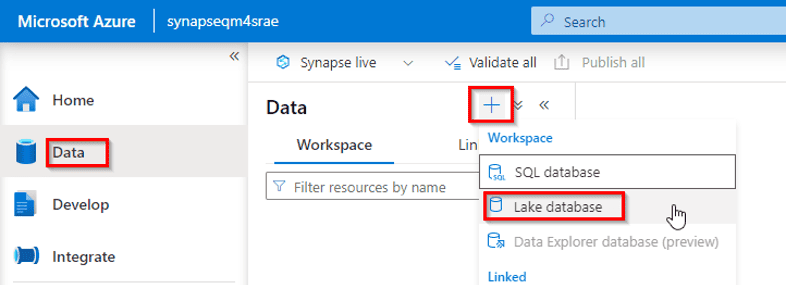

Sign in to Amazon DataZone data portal as a data publisher in the sales producer project. You can now create a data product to group inventory assets relevant to the sales analysis use case. Use the following steps to create and publish a data product, as shown in the following screenshot.

- Select DATA in the top ribbon of the Sales Product Project

- Select Inventory data in the navigation pane

- Choose DATA PRODUCTS to create a data product

Create data product

Follow these steps to create a data product:

- Choose Create new data product. Under Details, in the name field, enter “Sales Data Product.” In the description, enter “A data product containing the following 6 assets: Product, Shipments, Order Items, Orders, Customers, and Reviews,” as shown in the following screenshot.

- Select Choose assets to add the data assets. Select CHOOSE on the right side next to each of the six data products. Be sure to go to the second page to select the sixth asset. After all are selected, choose the blue CHOOSE button at the bottom of the page, as shown in the following screenshot. Then choose Create to create the data product.

Curate data product

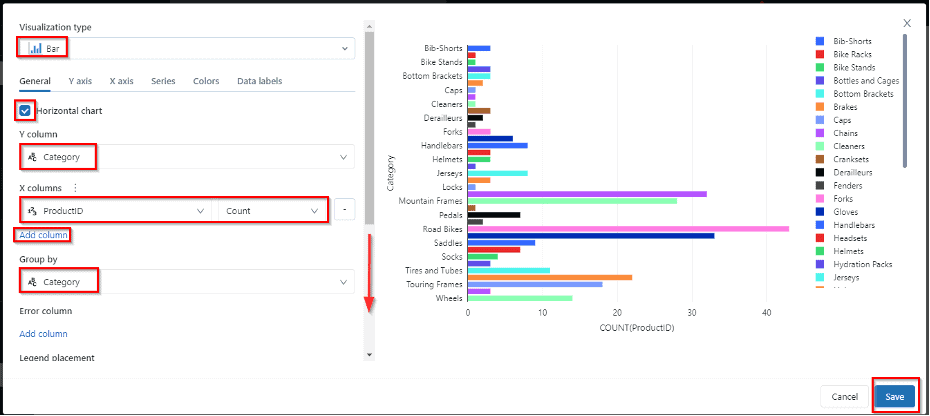

You can curate the sales data product by adding a readme, glossary term, and metadata forms to provide business context to the data product, as shown in the following screenshot.

- Choose Add terms under GLOSSARY TERMS. Select a glossary term that you have added to your glossary, for example, Sales. Refer to Create, edit, or delete a business glossary for how to create a business glossary.

- Choose Add metadata form to add a form such as a business owner. Refer to Create, edit, or delete metadata forms for how to create a metadata form. In this example, we added Ownership as a metadata form.

Publish data product

Follow these steps to publish a data product.

- Once all the necessary business metadata has been added, choose Publish to publish the data product to the business catalog, as shown in the following screenshot.

- In the pop-up, choose Publish data product.

The six data assets in the data product will also be published but will only be discoverable through the data product unless published individually. Consumers cannot subscribe to the individual data assets unless they are published and made discoverable in the catalog separately.

Data consumer discovers and subscribes to data product

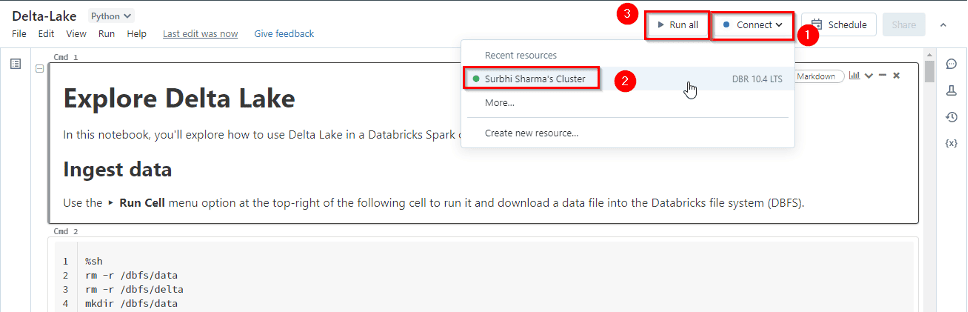

Now, as the marketing user, inside of the marketing project, you can find and subscribe to the sales data product.

Search data product

Sign in to the Amazon DataZone data portal as a marketing user in the marketing consumer project. In the search bar, enter “sales” or any other metadata that you added to the sales data product.

Once you find the appropriate data product, select it. You can view the metadata added and see which data assets are included in the data product by selecting the DATA ASSETS tab, as shown in the following screenshot.

Request subscription

Choose Subscribe to bring up the Subscribe to Sales Data Product modal. Make sure the project is your consumer project, for example, Marketing Consumer Project. In Reason for request, enter “Running a marketing campaign for the latest sales play.” Choose SUBSCRIBE.

The request will be routed to the sales producer project for approval.

Data owner approves subscription request

Sign in to Amazon DataZone as the project owner for the sales producer project to approve the request. You will see an alert in the task notification bar. Choose the notification icon on the top right to see the notifications, then choose Subscription Request Created, as shown in the following screenshot.

You can also view incoming subscription requests by choosing DATA in the blue ribbon at the top. Then choose Incoming requests in the navigation pane, REQUESTED under Incoming requests, and then View request, as shown in the following screenshot.

On the Subscription request pop-up, you will see who requested access to the Sales Data Product, from which project, the requested date and time, and their reason for requesting it. You can enter a Decision comment and then choose APPROVE.

Review access approval and grant

The marketing consumer is now approved to access the six assets included in the sales data product. Sign in to Amazon DataZone as a marketing user in the marketing consumer project. A new event will appear, showing that the SUBSCRIPTION REQUEST APPROVED has been completed.

You can view this in two different ways. Choose the notification icon on the top right and then EVENTS under Notifications, as shown in the first following screenshot. Alternatively, select DATA in the blue ribbon bar, then Subscribed data, and then Data products, as shown in the second following screenshot.

Choose the Sales Data Product and then Data assets. Amazon DataZone will automatically add the six data assets to the AWS Glue tables that the marketing consumer can use. Wait until you see that all six assets have been added to one environment, as shown in the following screenshot, before proceeding.

Query subscribed data

Once you complete the previous step, return to the main page of the marketing consumer project by choosing Marketing Consumer Project in the top left pull-down project selector, then choose OVERVIEW. The data can now be consumed through the Amazon Athena deep link on the right side. Choose Query data to open Athena, as shown in the following screenshot. In the Open Amazon Athena window, choose Open Amazon Athena.

A new window will open where the marketing consumer has been federated into the role that Amazon DataZone uses for granting permissions to the marketing consumer project data lake environment. The workgroup defaults to the appropriate workgroup that Amazon DataZone manages. Make sure that the Database under Data is the sub_db for the marketing consumer data lake environment. There will be six tables listed that correspond to the original six data assets added to the sales data product. Run your query. In this case, we used a query that looked for the top five best-selling products, as shown in the following code snippet and screenshot.

Data owner maintains lifecycle of data product

Follow these steps to maintain the lifecycle of the data product.

Revise data product

The data owner updates the data product, which includes editing metadata and adding or removing assets as needed. For detailed instructions, refer to Republish data products.

The sales data engineer has been tasked with removing one of the assets, the reviews table, from the sales data product.

- Open the SALES PRODUCER PROJECT by selecting it from the top project selector.

- Select DATA in the top ribbon.

- Select Published data in the navigation pane.

- Choose DATA PRODUCTS on the right side.

- Choose Sales Data Product.

The following screenshot shows these steps.

Once in the data product, the data engineer can add and remove metadata or assets. In To change any of the assets in the data product, follow these steps, as shown in the following screenshot.

- Select ASSETS in Sales Data Product.

- Select any of the assets. For this example, we remove the Reviews

- Select the three dots on the right side.

- Select Remove asset.

- A pop-up will appear confirming that you want to remove the asset. Choose Remove. The Reviews asset will now have a status of Removing asset: This asset is still available to subscribers.

- Republish the data product to remove access to this asset from all subscribers. Choose REPUBLISH and REPUBLISH DATA PRODUCT in the pop-up.

- To confirm the asset has been removed, sign in to the marketing project as the consumer. Open the Amazon Athena deep link on the OVERVIEW After selecting the

sub_dbassociated with the marketing consumer data lake environment, only five tables are visible because the Reviews table was removed from the data product, as shown in the following screenshot.

The consumer doesn’t have to take any action after a data product has been republished. If the data engineer had changed any of the business metadata, such as by adding a metadata form, updating the readme, or adding glossary terms and republishing, the consumer would see those changes reflected when viewing the data product under the subscribed data.

Unpublish data product

The data owner removes the data product from the catalog, making it no longer discoverable to the organization. You can choose to retain existing subscription access for the underlying assets. For detailed instructions, refer to refer to Unpublish data product.

Delete data product

The data owner permanently deletes the data product if it is no longer needed. Before deletion, you need to revoke all subscriptions. This action will not delete the underlying data assets. For detailed instructions, refer to Delete Data Product.

Revoke subscription

The data owner manages subscriptions and may revoke a subscription after it has been approved. For detailed instructions, refer to Revoke subscription.

Cleanup

To ensure no additional charges are incurred after testing, be sure to delete the Amazon DataZone domain. Refer to Delete domains for the process.

Conclusion

Data products are crucial for improving decision-making accuracy and speed in modern businesses. Beyond making raw data available, they offer strategic packaging, curation, and discoverability. Data products help customers address the difficulty of locating and accessing fragmented data, which reduces the time and resources needed to perform this important task.

Amazon DataZone already facilitates data cataloging from various sources. Building on this capability, this new feature streamlines data utilization by bundling data into purpose-built data products aligned with business goals. As a result, customers can unlock the full potential of their data.

The feature is supported in all the AWS commercial Regions where Amazon DataZone is currently available. To get started, check out the Working with data products.

About the authors

Jason Hines is a Senior Solutions Architect, at AWS, specializing in serving global customers in the Healthcare and Life Sciences industries. With over 25 years of experience, he has worked with numerous Fortune 100 companies across multiple verticals, bringing a wealth of knowledge and expertise to his role. Outside of work, Jason has a passion for an active lifestyle. He enjoys various outdoor activities such as hiking, scuba diving, and exploring nature. Maintaining a healthy work-life balance is essential to him.

Jason Hines is a Senior Solutions Architect, at AWS, specializing in serving global customers in the Healthcare and Life Sciences industries. With over 25 years of experience, he has worked with numerous Fortune 100 companies across multiple verticals, bringing a wealth of knowledge and expertise to his role. Outside of work, Jason has a passion for an active lifestyle. He enjoys various outdoor activities such as hiking, scuba diving, and exploring nature. Maintaining a healthy work-life balance is essential to him.

Ramesh H Singh is a Senior Product Manager Technical (External Services) at AWS in Seattle, Washington, currently with the Amazon DataZone team. He is passionate about building high-performance ML/AI and analytics products that enable enterprise customers to achieve their critical goals using cutting-edge technology. Connect with him on LinkedIn.

Ramesh H Singh is a Senior Product Manager Technical (External Services) at AWS in Seattle, Washington, currently with the Amazon DataZone team. He is passionate about building high-performance ML/AI and analytics products that enable enterprise customers to achieve their critical goals using cutting-edge technology. Connect with him on LinkedIn.

Leonardo Gomez is a Principal Analytics Specialist Solutions Architect at AWS. He has over a decade of experience in data management, helping customers around the globe address their business and technical needs. Connect with him on LinkedIn.

Leonardo Gomez is a Principal Analytics Specialist Solutions Architect at AWS. He has over a decade of experience in data management, helping customers around the globe address their business and technical needs. Connect with him on LinkedIn.

Federating access to Amazon DataZone with AWS IAM Identity Center and Okta

Post Syndicated from Carlos Gallegos original https://aws.amazon.com/blogs/big-data/federating-access-to-amazon-datazone-with-aws-iam-identity-center-and-okta/

Many customers rely today on Okta or other identity providers (IdPs) to federate access to their technology stack and tools. With federation, security teams can centralize user management in a single place, which helps simplify and brings agility to their day-to-day operations while keeping highest security standards.

To help develop a data-driven culture, everyone inside an organization can use Amazon DataZone. To realize the benefits of using Amazon DataZone for governing data and making it discoverable and available across different teams for collaboration, customers integrate it with their current technology stack. Handling access through their identity provider and preserving a familiar single sign-on (SSO) experience enables customers to extend the use of Amazon DataZone to users across teams in the organization without any friction while keeping centralized control.

Amazon DataZone is a fully managed data management service that makes it faster and simpler for customers to catalog, discover, share, and govern data stored across Amazon Web Services (AWS), on premises, and third-party sources. It also makes it simpler for data producers, analysts, and business users to access data throughout an organization so that they can discover, use, and collaborate to derive data-driven insights.

You can use AWS IAM Identity Center to securely create and manage identities for your organization’s workforce, or sync and use identities that are already set up and available in Okta or other identity provider, to keep centralized control of them. With IAM Identity Center you can also manage the SSO experience of your organization centrally, across your AWS accounts and applications.

This post guides you through the process of setting up Okta as an identity provider for signing in users to Amazon DataZone. The process uses IAM Identity Center and its native integration with Amazon DataZone to integrate with external identity providers. Note that, even though this post focuses on Okta, the presented pattern relies on the SAML 2.0 standard and so can be replicated with other identity providers.

Prerequisites

To build the solution presented in this post, you must have:

- A developer or licensed Okta account along with administrative access to manage users and permissions.

- IAM Identity Center administrator access for your single or multi-account environment. For more information, see Enabling AWS IAM Identity Center.

- A provisioned Amazon DataZone domain. For more information, see Create domains.

- Have IAM Identity Center for Amazon DataZone enabled. For more information, see Setting up AWS IAM Identity Center for Amazon DataZone.

Process overview

Throughout this post you’ll follow these high-level steps:

- Establish a SAML connection between Okta and IAM Identity Center

- Set up automatic provisioning of users and groups in IAM Identity Center so that users and groups in the Okta domain are created in Identity Center.

- Assign users and groups to your AWS accounts in IAM Identity Center by assuming an AWS Identity and Access Management (IAM) role.

- Access the AWS Management Console and Amazon DataZone portal through Okta SSO.

- Manage Amazon DataZone specific permissions in the Amazon DataZone portal.

Setting up user federation with Okta and IAM Identity Center

This guide follows the steps in Configure SAML and SCIM with Okta and IAM Identity Center.

Before you get started, review the following items in your Okta setup:

- Every Okta user must have a First name, Last name, Username and Display name value specified.

- Each Okta user has only a single value per data attribute, such as email address or phone number. Users that have multiple values will fail to synchronize. If there are users that have multiple values in their attributes, remove the duplicate attributes before attempting to provision the user in IAM Identity Center. For example, only one phone number attribute can be synchronized. Because the default phone number attribute is work phone, use the work phone attribute to store the user’s phone number, even if the phone number for the user is a home phone or a mobile phone.

- If you update a user’s address you must have streetAddress, city, state, zipCode and the countryCode value specified. If any of these values aren’t specified for the Okta user at the time of synchronization, the user (or changes to the user) won’t be provisioned.

1) Establish a SAML connection between Okta and AWS IAM Identity Center

Now, let’s establish a SAML connection between Okta and AWS IAM Identity Center. First, you’ll create an application in Okta to establish the connection:

- Sign in to the Okta admin dashboard, expand Applications, then select Applications.

- On the Applications page, choose Browse App Catalog.

- In the search box, enter

AWS IAM Identity Center, then select the app to add the IAM Identity Center app.

- Choose the Sign On tab.

- Under SAML Signing Certificates, select Actions, and then select View IdP Metadata. A new browser tab opens showing the document tree of an XML file. Select all of the XML from

<md:EntityDescriptor>to</md:EntityDescriptor>and copy it to a text file. - Save the text file as

metadata.xml.

Leave the Okta admin dashboard open, you will continue using it in the later steps.

Second, you’re going to set up Okta as an external identity provider in IAM Identity Center:

- Open the IAM Identity Center console as a user with administrative privileges.

- Choose Settings in the navigation pane.

- On the Settings page, choose Actions, and then select Change identity source.

- Under Choose identity source, select External identity provider, and then choose Next.

- Under Configure external identity provider, do the following:

- Under Service provider metadata, choose Download metadata file to download the IAM Identity Center metadata file and save it on your system. You will provide the Identity Center SAML metadata file to Okta later in this tutorial.

- Copy the following items to a text file for easy access (you’ll need these values later):

- IAM Identity Center Assertion Consumer Service (ACS) URL

- IAM Identity Center issuer URL

- Copy the following items to a text file for easy access (you’ll need these values later):

- Under Identity provider metadata, under IdP SAML metadata, choose Choose file and then select the metadata.xml file you created in the previous step.

- Choose Next.

- Under Service provider metadata, choose Download metadata file to download the IAM Identity Center metadata file and save it on your system. You will provide the Identity Center SAML metadata file to Okta later in this tutorial.

- After you read the disclaimer and are ready to proceed, enter

accept. - Choose Change identity source.

Leave the AWS console open, because you will use it in the next procedure.

- Return to the Okta admin dashboard and choose the Sign On tab of the IAM Identity Center app, then choose Edit.

- Under Advanced Sign-on Settings enter the following:

- For ACS URL, enter the value you copied for IAM Identity Center Assertion Consumer Service (ACS) URL.

- For Issuer URL, enter the value you copied for IAM Identity Center issuer URL.

- For Application username format, select one of the options from the drop-down menu.

Make sure the value you select is unique for each user. For this tutorial, select Okta username.

- Choose Save.

2) Set up automatic provisioning of users and groups in AWS IAM Identity Center

You are now able to set up automatic provisioning of users from Okta into IAM Identity Center. Leave the Okta admin dashboard open and return to the IAM Identity Center console for the next step.

- In the IAM Identity Center console, on the Settings page, locate the Automatic provisioning information box, and then choose Enable. This enables automatic provisioning in IAM Identity Center and displays the necessary System for Cross-domain Identity Management (SCIM) endpoint and access token information.

- In the Inbound automatic provisioning dialog box, copy each of the values for the following options:

- SCIM endpoint

- Access token

You will use these values to configure provisioning in Okta later.

- Choose Close.

- Return to the Okta admin dashboard and navigate to the IAM Identity Center app.

- On the AWS IAM Identity Center app page, choose the Provisioning tab, and then in the navigation pane, under Settings, choose Integration.

- Choose Edit, and then select the check box next to Enable API integration to enable provisioning.

- Configure Okta with the SCIM provisioning values from IAM Identity Center that you copied earlier:

- In the Base URL field, enter the SCIM endpoint Make sure that you remove the trailing forward slash at the end of the URL.

- In the API Token field, enter the Access token value.

- Choose Test API Credentials to verify the credentials entered are valid. The message AWS IAM Identity Center was verified successfully! displays.

- Choose Save. You are taken to the Settings area, with Integration selected.

- Review the following setup before moving forward. In the Provisioning tab, in the navigation pane under Settings, choose To App. Check that all options are enabled. They should be enabled by default, but if not, enable them.

3) Assign users and groups to your AWS accounts in AWS IAM Identity Center by assuming an AWS IAM role

By default, no groups nor users are assigned to your Okta IAM Identity Center app. Complete the following steps to synchronize users with IAM Identity Center.

- In the Okta IAM Identity Center app page, choose the Assignments tab. You can assign both people and groups to the IAM Identity Center app.

- To assign people:

- In the Assignments page, choose Assign, and then choose Assign to people.

- Select the Okta users that you want to have access to the IAM Identity Center app. Choose Assign, choose Save and Go Back, and then choose Done.

This starts the process of provisioning the individual users into IAM Identity Center.

- To assign groups:

- Choose the Push Groups tab. You can create rules to automatically provision Okta groups into IAM Identity Center.

- Choose the Push Groups drop-down list and select Find groups by rule.

- In the By rule section, set a rule name and a condition. For this post we’re using

AWS SSO Ruleas rule name andstarts with awsssoas a group name condition. This condition can be different depending on the name of the group you want to sync. - Choose Create Rule

- (Optional) To create a new group choose Directory in the navigation pane, and then choose Groups.

- Choose Add group and enter a name, and then choose Save.

- After you have created the group, you can assign people to it. Select the group name to manage the group’s users.

- Choose Assign people and select the users that you want to assign to the group.

- You will see the users that are assigned to the group.

- Going back to Applications in the navigation pane, select the AWS IAM Identity Center app and choose the Push Groups tab. You should have the groups that match the rule synchronized between Okta and IAM Identity Center. The group status should be set to Active after the group and its members are updated in Identity Center.

- To assign people:

- Return to the IAM Identity Center console. In the navigation pane, choose Users. You should see the user list that was updated by Okta.

- In the left navigation, select Groups, you should see the group list that was updated by Okta.

Congratulations! You have successfully set up a SAML connection between Okta and AWS and have verified that automatic provisioning is working.

OPTIONAL: If you need to provide Amazon DataZone console access to the Okta users and groups, you can manage these permissions through the IAM Identity Center console.

- In the IAM Identity Center navigation pane, under Multi-account permissions, choose AWS accounts.

- On the AWS accounts page, the Organizational structure displays your organizational root with your accounts underneath it in the hierarchy. Select the checkbox for your management account, then choose Assign users or groups.

- The Assign users and groups workflow displays. It consists of three steps:

- For Step 1: Select users and groups choose the user that will be performing the administrator job function. Then choose Next.

- For Step 2: Select permission sets choose Create permission set to open a new tab that steps you through the three sub-steps involved in creating a permission set.

- For Step 1: Select permission set type complete the following:

- In Permission set type, choose Predefined permission set.

- In Policy for predefined permission set, choose AdministratorAccess.

- Choose Next.

- For Step 2: Specify permission set details, keep the default settings, and choose Next.

The default settings create a permission set named AdministratorAccess with session duration set to one hour. You can also specify reduced permissions with a custom policy just to allow Amazon DataZone console access. - For Step 3: Review and create, verify that the Permission set type uses the AWS managed policy AdministratorAccess or your custom policy. Choose Create. On the Permission sets page, a notification appears informing you that the permission set was created. You can close this tab in your web browser now.

- For Step 1: Select permission set type complete the following:

- On the Assign users and groups browser tab, you are still on Step 2: Select permission sets from which you started the create permission set workflow.

- In the Permissions sets area, Refresh. The AdministratorAccess permission or your custom policy set you created appears in the list. Select the checkbox for that permission set, and then choose Next.

- For Step 3: Review and submit review the selected user and permission set, then choose Submit.

The page updates with a message that your AWS account is being configured. Wait until the process completes. - You are returned to the AWS accounts page. A notification message informs you that your AWS account has been re-provisioned, and the updated permission set is applied. When a user signs in, they will have the option of choosing the AdministratorAccess role or a custom policy role.

- For Step 3: Review and submit review the selected user and permission set, then choose Submit.

4) Access the AWS console and Amazon DataZone portal through Okta SSO

Now, you can test your user access into the console and Amazon DataZone portal using the Okta external identity application.

- Sign in to the Okta dashboard using a test user account.

- Under My Apps, select the AWS IAM Identity Center icon.

- Complete the authentication process using your Okta credentials.

4.1) For administrative users

- You’re signed in to the portal and can see the AWS account icon. Expand that icon to see the list of AWS accounts that the user can access. In this tutorial, you worked with a single account, so expanding the icon only shows one account.

- Select the account to display the permission sets available to the user. In this tutorial you created the AdministratorAccess permission set.

- Next to the permission set are links for the type of access available for that permission set. When you created the permission set, you specified both management console and programmatic access be enabled, so those two options are present. Select Management console to open the console.

- The user is signed in to the console. Using the search bar, look for

Amazon DataZone serviceand open it. - Open the Amazon DataZone console and make sure you have enabled SSO users through IAM Identity Center. In case you haven’t, you can follow the steps in Enable IAM Identity Center for Amazon DataZone.

Note: In this post, we followed the default IAM Identity Center for Amazon DataZone configuration, which has implicit user assignment mode enabled. With this option, any user added to your Identity Center directory can access your Amazon DataZone domain automatically. If you opt for using explicit user assignment instead, remember that you need to manually add users to your Amazon DataZone domain in the Amazon DataZone console for them to have access.

To learn more about how to manage user access to an Amazon DataZone domain, see Manage users in the Amazon DataZone console.

- Choose the Open data portal to access the Amazon DataZone Portal.

4.2) For all other users

- Choose the Applications tab in the AWS access portal window and choose the Amazon DataZone data portal application link.

- In the Amazon DataZone data portal, choose SIGN IN WITH SSO to continue

Congratulations! Now you’re signed in to the Amazon DataZone data portal using your user that’s managed by Okta.

5) Manage Amazon DataZone specific permissions in the Amazon DataZone portal

After you have access to the Amazon DataZone portal, you can work with projects, the data assets within, environments, and other constructs that are specific to Amazon DataZone. A project is the overarching construct that brings together people, data, and analytics tools. A project has two roles: owner and contributor. Next, you’ll learn how a user can be made an owner or contributor of existing projects.

These steps must be completed by the existing project owner in the Amazon DataZone portal:

- Open the Amazon DataZone portal, select the project in the drop-down list on the left top of the portal and choose the project you own

- In the project window, choose the Members tab to see the current users in the project and add a new one.

- Choose Add Members to add a new user. Make sure the User type is SSO User to add an Okta user. Look for the Okta user in the name drop-down list, select it, and select a project role for it. Finally, choose Add Members to add the user.

- The Okta user has been granted the selected project role and can interact with the project, assets, and tools.

- You can also grant permissions to SSO Groups. Choose Add members, then select SSO group in the drop-down list, next select the Group name, set the assigned project role, and choose Add Members.

- The Okta group has been granted the project role and can interact with the project, assets, and tools.

You can also manage SSO user and group access to the Amazon DataZone data portal from the console. See Manage users in the Amazon DataZone console for additional details.

Clean up

To ensure a seamless experience and avoid any future charges, we kindly request that you follow these steps:

- Delete the Amazon DataZone project if you created it as part of this blog post.

- Delete the Amazon DataZone domain if you created it as part of this blog post.

- If you changed your identity source from IAM Identity Center to an external identity provider (IdP), please revert it back to IAM Identity Center.

- If you added an AWS IAM Identity Center app integration to your Okta account as part of this post, please delete the app integration.

- Delete the permission set that you created specifically for this blog post.

By following these steps, you can effectively clean up the resources utilized in this blog post and prevent any unnecessary charges from accruing.

Summary

In this post, you followed a step-by-step guide to set up and use Okta to federate access to Amazon DataZone with AWS IAM Identity Center. You also learned how to group users and manage their permission in Amazon DataZone. As a final thought, now that you’re familiar with the elements involved in the integration of an external identity provider such as Okta to federate access to Amazon DataZone, you’re ready to try it with other identity providers.

To learn more about, see Managing Amazon DataZone domains and user access.

About the Authors

Carlos Gallegos is a Senior Analytics Specialist Solutions Architect at AWS. Based in Austin, TX, US. He’s an experienced and motivated professional with a proven track record of delivering results worldwide. He specializes in architecture, design, migrations, and modernization strategies for complex data and analytics solutions, both on-premises and on the AWS Cloud. Carlos helps customers accelerate their data journey by providing expertise in these areas. Connect with him on LinkedIn.

Carlos Gallegos is a Senior Analytics Specialist Solutions Architect at AWS. Based in Austin, TX, US. He’s an experienced and motivated professional with a proven track record of delivering results worldwide. He specializes in architecture, design, migrations, and modernization strategies for complex data and analytics solutions, both on-premises and on the AWS Cloud. Carlos helps customers accelerate their data journey by providing expertise in these areas. Connect with him on LinkedIn.

Jose Romero is a Senior Solutions Architect for Startups at AWS. Based in Austin, TX, US. He’s passionate about helping customers architect modern platforms at scale for data, AI, and ML. As a former senior architect in AWS Professional Services, he enjoys building and sharing solutions for common complex problems so that customers can accelerate their cloud journey and adopt best practices. Connect with him on LinkedIn.

Jose Romero is a Senior Solutions Architect for Startups at AWS. Based in Austin, TX, US. He’s passionate about helping customers architect modern platforms at scale for data, AI, and ML. As a former senior architect in AWS Professional Services, he enjoys building and sharing solutions for common complex problems so that customers can accelerate their cloud journey and adopt best practices. Connect with him on LinkedIn.

Arun Pradeep Selvaraj is a Senior Solutions Architect at AWS. Arun is passionate about working with his customers and stakeholders on digital transformations and innovation in the cloud while continuing to learn, build, and reinvent. He is creative, fast-paced, deeply customer-obsessed and uses the working backwards process to build modern architectures to help customers solve their unique challenges. Connect with him on LinkedIn.

Arun Pradeep Selvaraj is a Senior Solutions Architect at AWS. Arun is passionate about working with his customers and stakeholders on digital transformations and innovation in the cloud while continuing to learn, build, and reinvent. He is creative, fast-paced, deeply customer-obsessed and uses the working backwards process to build modern architectures to help customers solve their unique challenges. Connect with him on LinkedIn.

Get started with the new Amazon DataZone enhancements for Amazon Redshift

Post Syndicated from Carmen Manzulli original https://aws.amazon.com/blogs/big-data/get-started-with-the-new-amazon-datazone-enhancements-for-amazon-redshift/

In today’s data-driven landscape, organizations are seeking ways to streamline their data management processes and unlock the full potential of their data assets, while controlling access and enforcing governance. That’s why we introduced Amazon DataZone.

Amazon DataZone is a powerful data management service that empowers data engineers, data scientists, product managers, analysts, and business users to seamlessly catalog, discover, analyze, and govern data across organizational boundaries, AWS accounts, data lakes, and data warehouses.

On March 21, 2024, Amazon DataZone introduced several exciting enhancements to its Amazon Redshift integration that simplify the process of publishing and subscribing to data warehouse assets like tables and views, while enabling Amazon Redshift customers to take advantage of the data management and governance capabilities or Amazon DataZone.

These updates empower the experience for both data users and administrators.

Data producers and consumers can now quickly create data warehouse environments using preconfigured credentials and connection parameters provided by their Amazon DataZone administrators.

Additionally, these enhancements grant administrators greater control over who can access and use the resources within their AWS accounts and Redshift clusters, and for what purpose.

As an administrator, you can now create parameter sets on top of DefaultDataWarehouseBlueprint by providing parameters such as cluster, database, and an AWS secret. You can use these parameter sets to create environment profiles and authorize Amazon DataZone projects to use these environment profiles for creating environments.

In turn, data producers and data consumers can now select an environment profile to create environments without having to provide the parameters themselves, saving time and reducing the risk of issues.

In this post, we explain how you can use these enhancements to the Amazon Redshift integration to publish your Redshift tables to the Amazon DataZone data catalog, and enable users across the organization to discover and access them in a self-service fashion. We present a sample end-to-end customer workflow that covers the core functionalities of Amazon DataZone, and include a step-by-step guide of how you can implement this workflow.

The same workflow is available as video demonstration on the Amazon DataZone official YouTube channel.

Solution overview

To get started with the new Amazon Redshift integration enhancements, consider the following scenario:

- A sales team acts as the data producer, owning and publishing product sales data (a single table in a Redshift cluster called

catalog_sales) - A marketing team acts as the data consumer, needing access to the sales data in order to analyze it and build product adoption campaigns

At a high level, the steps we walk you through in the following sections include tasks for the Amazon DataZone administrator, Sales team, and Marketing team.

Prerequisites

For the workflow described in this post, we assume a single AWS account, a single AWS Region, and a single AWS Identity and Access Management (IAM) user, who will act as Amazon DataZone administrator, Sales team (producer), and Marketing team (consumer).

To follow along, you need an AWS account. If you don’t have an account, you can create one.

In addition, you must have the following resources configured in your account:

- An Amazon DataZone domain with admin, sales, and marketing projects

- A Redshift namespace and workgroup

If you don’t have these resources already configured, you can create them by deploying an AWS CloudFormation stack:

- Choose Launch Stack to deploy the provided CloudFormation template.

- For

AdminUserPassword, enter a password, and take note of this password to use in later steps. - Leave the remaining settings as default.

- Select I acknowledge that AWS CloudFormation might create IAM resources, then choose Submit.

- When the stack deployment is complete, on the Amazon DataZone console, choose View domains in the navigation pane to see the new created Amazon DataZone domain.

- On the Amazon Redshift Serverless console, in the navigation pane, choose Workgroup configuration and see the new created resource.

You should be logged in using the same role that you used to deploy the CloudFormation stack and verify that you’re in the same Region.

As a final prerequisite, you need to create a catalog_sales table in the default Redshift database (dev).

- On the Amazon Redshift Serverless console, selected your workgroup and choose Query data to open the Amazon Redshift query editor.

- In the query editor, choose your workgroup and select Database user name and password as the type of connection, then provide your admin database user name and password.

- Use the following query to create the

catalog_salestable, which the Sales team will publish in the workflow:

Now you’re ready to get started with the new Amazon Redshift integration enhancements.

Amazon DataZone administrator tasks

As the Amazon DataZone administrator, you perform the following tasks:

- Configure the

DefaultDataWarehouseBlueprint.- Authorize the Amazon DataZone admin project to use the blueprint to create environment profiles.

- Create a parameter set on top of

DefaultDataWarehouseBlueprintby providing parameters such as cluster, database, and AWS secret.

- Set up environment profiles for the Sales and Marketing teams.

Configure the DefaultDataWarehouseBlueprint

Amazon DataZone blueprints define what AWS tools and services are provisioned to be used within an Amazon DataZone environment. Enabling the data warehouse blueprint will allow data consumers and data producers to use Amazon Redshift and the Query Editor for data sharing, accessing, and consuming.

- On the Amazon DataZone console, choose View domains in the navigation pane.

- Choose your Amazon DataZone domain.

- Choose Default Data Warehouse.

If you used the CloudFormation template, the blueprint is already enabled.

Part of the new Amazon Redshift experience involves the Managing projects and Parameter sets tabs. The Managing projects tab lists the projects that are allowed to create environment profiles using the data warehouse blueprint. By default, this is set to all projects. For our purpose, let’s grant only the admin project.

- On the Managing projects tab, choose Edit.

- Select Restrict to only managing projects and choose the

AdminPRJproject. - Choose Save changes.

With this enhancement, the administrator can control which projects can use default blueprints in their account to create environment profile

The Parameter sets tab lists parameters that you can create on top of DefaultDataWarehouseBlueprint by providing parameters such as Redshift cluster or Redshift Serverless workgroup name, database name, and the credentials that allow Amazon DataZone to connect to your cluster or workgroup. You can also create AWS secrets on the Amazon DataZone console. Before these enhancements, AWS secrets had to be managed separately using AWS Secrets Manager, making sure to include the proper tags (key-value) for Amazon Redshift Serverless.

For our scenario, we need to create a parameter set to connect a Redshift Serverless workgroup containing sales data.

- On the Parameter sets tab, choose Create parameter set.

- Enter a name and optional description for the parameter set.

- Choose the Region containing the resource you want to connect to (for example, our workgroup is in

us-east-1). - In the Environment parameters section, select Amazon Redshift Serverless.

If you already have an AWS secret with credentials to your Redshift Serverless workgroup, you can provide the existing AWS secret ARN. In this case, the secret must be tagged with the following (key-value): AmazonDataZoneDomain: <Amazon DataZone domain ID>.

- Because we don’t have an existing AWS secret, we create a new one by choosing Create new AWS Secret.

- In the pop-up, enter a secret name and your Amazon Redshift credentials, then choose Create new AWS Secret.

Amazon DataZone creates a new secret using Secrets Manager and makes sure the secret is tagged with the domain in which you’re creating the parameter set.

- Enter the Redshift Serverless workgroup name and database name to complete the parameters list. If you used the provided CloudFormation template, use

sales-workgroupfor the workgroup name anddevfor the database name. - Choose Create parameter set.

You can see the parameter set created for your Redshift environment and the blueprint enabled with a single managing project configured.

Set up environment profiles for the Sales and Marketing teams

Environment profiles are predefined templates that encapsulate technical details required to create an environment, such as the AWS account, Region, and resources and tools to be added to projects. The next Amazon DataZone administrator task consists of setting up environment profiles, based on the default enabled blueprint, for the Sales and Marketing teams.

This task will be performed from the admin project in the Amazon DataZone data portal, so let’s follow the data portal URL and start creating an environment profile for the Sales team to publish their data.

- On the details page of your Amazon DataZone domain, in the Summary section, choose the link for your data portal URL.

When you open the data portal for the first time, you’re prompted to create a project. If you used the provided CloudFormation template, the projects are already created.

- Choose the

AdminPRJproject.

- On the Environments page, choose Create environment profile.

- Enter a name (for example,

SalesEnvProfile) and optional description (for example,Sales DWH Environment Profile) for the new environment profile. - For Owner, choose

AdminPRJ. - For Blueprint, select the

DefaultDataWarehouseblueprint (you’ll only see blueprints where the admin project is listed as a managing project).

- Choose the current enabled account and the parameter set you previously created.

Then you will see each pre-compiled value for Redshift Serverless.  Under Authorized projects, you can pick the authorized projects allowed to use this environment profile to create an environment. By default, this is set to All projects.

Under Authorized projects, you can pick the authorized projects allowed to use this environment profile to create an environment. By default, this is set to All projects.

- Select Authorized projects only.

- Choose Add projects and choose the

SalesPRJproject. - Configure the publishing permissions for this environment profile. Because the Sales team is our data producer, we select Publish from any schema.

- Choose Create environment profile.

Next, you create a second environment profile for the Marketing team to consume data. To do this, you repeat similar steps made for the Sales team.

- Choose the

AdminPRJproject. - On the Environments page, choose Create environment profile.

- Enter a name (for example,

MarketingEnvProfile) and optional description (for example,Marketing DWH Environment Profile). - For Owner, choose

AdminPRJ. - For Blueprint, select the

DefaultDataWarehouseblueprint. - Select the parameter set you created earlier.

- This time, keep All projects as the default (alternatively, you could select Authorized projects only and add

MarketingPRJ). - Configure the publishing permissions for this environment profile. Because the Marketing team is our data consumer, we select Don’t allow publishing.

- Choose Create environment profile.

With these two environment profiles in place, the Sales and Marketing teams can start working on their projects on their own to create their proper environments (resources and tools) with fewer configurations and less risk to incur errors, and publish and consume data securely and efficiently within these environments.

To recap, the new enhancements offer the following features:

- When creating an environment profile, you can choose to provide your own Amazon Redshift parameters or use one of the parameter sets from the blueprint configuration. If you choose to use the parameter set created in the blueprint configuration, the AWS secret only requires the

AmazonDataZoneDomaintag (theAmazonDataZoneProjecttag is only required if you choose to provide your own parameter sets in the environment profile). - In the environment profile, you can specify a list of authorized projects, so that only authorized projects can use this environment profile to create data warehouse environments.

- You can also specify what data authorized projects are allowed to be published. You can choose one of the following options: Publish from any schema, Publish from the default environment schema, and Don’t allow publishing.

These enhancements grant administrators more control over Amazon DataZone resources and projects and facilitate the common activities of all roles involved.

Sales team tasks

As a data producer, the Sales team performs the following tasks:

- Create a sales environment.

- Create a data source.

- Publish sales data to the Amazon DataZone data catalog.

Create a sales environment

Now that you have an environment profile, you need to create an environment in order to work with data and analytics tools in this project.

- Choose the

SalesPRJproject. - On the Environments page, choose Create environment.

- Enter a name (for example,

SalesDwhEnv) and optional description (for example,Environment DWH for Sales) for the new environment. - For Environment profile, choose

SalesEnvProfile.

Data producers can now select an environment profile to create environments, without the need to provide their own Amazon Redshift parameters. The AWS secret, Region, workgroup, and database are ported over to the environment from the environment profile, streamlining and simplifying the experience for Amazon DataZone users.

- Review your data warehouse parameters to confirm everything is correct.

- Choose Create environment.

The environment will be automatically provisioned by Amazon DataZone with the preconfigured credentials and connection parameters, allowing the Sales team to publish Amazon Redshift tables seamlessly.

Create a data source

Now, let’s create a new data source for our sales data.

- Choose the

SalesPRJproject. - On the Data page, choose Create data source.

- Enter a name (for example,

SalesDataSource) and optional description. - For Data source type, select Amazon Redshift.

- For Environment¸ choose

SalesDevEnv. - For Redshift credentials, you can use the same credentials you provided during environment creation, because you’re still using the same Redshift Serverless workgroup.

- Under Data Selection, enter the schema name where your data is located (for example,

public) and then specify a table selection criterion (for example, *).

Here, the * indicates that this data source will bring into Amazon DataZone all the technical metadata from the database tables of your schema (in this case, a single table called catalog_sales).

- Choose Next.

On the next page, automated metadata generation is enabled. This means that Amazon DataZone will automatically generate the business names of the table and columns for that asset.

- Leave the settings as default and choose Next.

- For Run preference, select when to run the data source. Amazon DataZone can automatically publish these assets to the data catalog, but let’s select Run on demand so we can curate the metadata before publishing.

- Choose Next.

- Review all settings and choose Create data source.

- After the data source has been created, you can manually pull technical metadata from the Redshift Serverless workgroup by choosing Run.

When the data source has finished running, you can see the catalog_sales asset correctly added to the inventory.

Publish sales data to the Amazon DataZone data catalog

Open the catalog_sales asset to see details of the new asset (business metadata, technical metadata, and so on).

In a real-world scenario, this pre-publishing phase is when you can enrich the asset providing more business context and information, such as a readme, glossaries, or metadata forms. For example, you can start accepting some metadata automatically generated recommendations and rename the asset or its columns in order to make them more readable, descriptive, and easy to search and understand from a business user.

For this post, simply choose Publish asset to complete the Sales team tasks.

Marketing team tasks

Let’s switch to the Marketing team and subscribe to the catalog_sales asset published by the Sales team. As a consumer team, the Marketing team will complete the following tasks:

- Create a marketing environment.

- Discover and subscribe to sales data.

- Query the data in Amazon Redshift.

Create a marketing environment

To subscribe and access Amazon DataZone assets, the Marketing team needs to create an environment.

- Choose the

MarketingPRJproject. - On the Environments page, choose Create environment.

- Enter a name (for example,

MarketingDwhEnv) and optional description (for example,Environment DWH for Marketing). - For Environment profile, choose

MarketingEnvProfile.

As with data producers, data consumers can also benefit from a pre-configured profile (created and managed by the administrator) in order to speed up the environment creation process, avoiding mistakes and reducing risks of errors.

- Review your data warehouse parameters to confirm everything is correct.

- Choose Create environment.

Discover and subscribe to sales data

Now that we have a consumer environment, let’s search the catalog_sales table in the Amazon DataZone data catalog.

- Enter

salesin the search bar. - Choose the

catalog_salestable. - Choose Subscribe.

- In the pop-up window, choose your marketing consumer project, provide a reason for the subscription request, and choose Subscribe.

When you get a subscription request as a data producer, Amazon DataZone will notify you through a task in the sales producer project. Because you’re acting as both subscriber and publisher here, you will see a notification.

- Choose the notification, which will open the subscription request.

You can see details including which project has requested access, who is the requestor, and why access is needed.

- To approve, enter a message for approval and choose Approve.

Now that subscription has been approved, let’s go back to the MarketingPRJ. On the Subscribed data page, catalog_sales is listed as an approved asset, but access hasn’t been granted yet. If we choose the asset, you can see that Amazon DataZone is working on the backend to automatically grant the access. When it’s complete, you’ll see the subscription as granted and the message “Asset added to 1 environment.”

Query data in Amazon Redshift

Now that the marketing project has access to the sales data, we can use the Amazon Redshift Query Editor V2 to analyze the sales data.

- Under

MarketingPRJ, go to the Environments page and select the marketing environment. - Under the analytics tools, choose Query data with Amazon Redshift, which redirects you to the query editor within the environment of the project.

- To connect to Amazon Redshift, choose your workgroup and select Federated user as the connection type.

When you’re connected, you will see the catalog_sales table under the public schema.

- To make sure that you have access to this table, run the following query:

SELECT * FROM catalog_sales LIMIT 10 As a consumer, you’re now able to explore data and create reports, or you can aggregate data and create new assets to publish in Amazon DataZone, becoming a producer of a new data product to share with other users and departments.

As a consumer, you’re now able to explore data and create reports, or you can aggregate data and create new assets to publish in Amazon DataZone, becoming a producer of a new data product to share with other users and departments.

Clean up

To clean up your resources, complete the following steps:

- On the Amazon DataZone console, delete the projects used in this post. This will delete most project-related objects like data assets and environments.

- Clean up all Amazon Redshift resources (workgroup and namespace) to avoid incurring additional charges.

Conclusion

In this post, we demonstrated how you can get started with the new Amazon Redshift integration in Amazon DataZone. We showed how to streamline the experience for data producers and consumers and how to grant administrators control over data resources.

Embrace these enhancements and unlock the full potential of Amazon DataZone and Amazon Redshift for your data management needs.

Resources

For more information, refer to the following resources:

- See the Amazon DataZone documentation

- Check out the YouTube playlist for some of the latest demos of Amazon DataZone and short descriptions of the capabilities available

- Check out How Amazon DataZone helps customers find value in oceans of data

About the author

Carmen is a Solutions Architect at AWS, based in Milan (Italy). She is a Data Lover that enjoys helping companies in the adoption of Cloud technologies, especially with Data Analytics and Data Governance. Outside of work, she is a creative people who loves being in contact with nature and sometimes practicing adrenaline activities.

Carmen is a Solutions Architect at AWS, based in Milan (Italy). She is a Data Lover that enjoys helping companies in the adoption of Cloud technologies, especially with Data Analytics and Data Governance. Outside of work, she is a creative people who loves being in contact with nature and sometimes practicing adrenaline activities.

How ATPCO enables governed self-service data access to accelerate innovation with Amazon DataZone

Post Syndicated from Brian Olsen original https://aws.amazon.com/blogs/big-data/how-atpco-enables-governed-self-service-data-access-to-accelerate-innovation-with-amazon-datazone/

This blog post is co-written with Raj Samineni from ATPCO.

In today’s data-driven world, companies across industries recognize the immense value of data in making decisions, driving innovation, and building new products to serve their customers. However, many organizations face challenges in enabling their employees to discover, get access to, and use data easily with the right governance controls. The significant barriers along the analytics journey constrain their ability to innovate faster and make quick decisions.

ATPCO is the backbone of modern airline retailing, enabling airlines and third-party channels to deliver the right offers to customers at the right time. ATPCO’s reach is impressive, with its fare data covering over 89% of global flight schedules. The company collaborates with more than 440 airlines and 132 channels, managing and processing over 350 million fares in its database at any given time. ATPCO’s vision is to be the platform driving innovation in airline retailing while remaining a trusted partner to the airline ecosystem. ATPCO aims to empower data-driven decision-making by making high quality data discoverable by every business unit, with the appropriate governance on who can access what.

In this post, using one of ATPCO’s use cases, we show you how ATPCO uses AWS services, including Amazon DataZone, to make data discoverable by data consumers across different business units so that they can innovate faster. We encourage you to read Amazon DataZone concepts and terminologies first to become familiar with the terms used in this post.

Use case

One of ATPCO’s use cases is to help airlines understand what products, including fares and ancillaries (like premium seat preference), are being offered and sold across channels and customer segments. To support this need, ATPCO wants to derive insights around product performance by using three different data sources:

- Airline Ticketing data – 1 billion airline ticket sales data processed through ATPCO

- ATPCO pricing data – 87% of worldwide airline offers are powered through ATPCO pricing data. ATPCO is the industry leader in providing pricing and merchandising content for airlines, global distribution systems (GDSs), online travel agencies (OTAs), and other sales channels for consumers to visually understand differences between various offers.

- De-identified customer master data – ATPCO customer master data that has been de-identified for sensitive internal analysis and compliance.

In order to generate insights that will then be shared with airlines as a data product, an ATPCO analyst needs to be able to find the right data related to this topic, get access to the data sets, and then use it in a SQL client (like Amazon Athena) to start forming hypotheses and relationships.

Before Amazon DataZone, ATPCO analysts needed to find potential data assets by talking with colleagues; there wasn’t an easy way to discover data assets across the company. This slowed down their pace of innovation because it added time to the analytics journey.

Solution

To address the challenge, ATPCO sought inspiration from a modern data mesh architecture. Instead of a central data platform team with a data warehouse or data lake serving as the clearinghouse of all data across the company, a data mesh architecture encourages distributed ownership of data by data producers who publish and curate their data as products, which can then be discovered, requested, and used by data consumers.

Amazon DataZone provides rich functionality to help a data platform team distribute ownership of tasks so that these teams can choose to operate less like gatekeepers. In Amazon DataZone, data owners can publish their data and its business catalog (metadata) to ATPCO’s DataZone domain. Data consumers can then search for relevant data assets using these human-friendly metadata terms. Instead of access requests from data consumer going to a ATPCO’s data platform team, they now go to the publisher or a delegated reviewer to evaluate and approve. When data consumers use the data, they do so in their own AWS accounts, which allocates their consumption costs to the right cost center instead of a central pool. Amazon DataZone also avoids duplicating data, which saves on cost and reduces compliance tracking. Amazon DataZone takes care of all of the plumbing, using familiar AWS services such as AWS Identity and Access Management (IAM), AWS Glue, AWS Lake Formation, and AWS Resource Access Manager (AWS RAM) in a way that is fully inspectable by a customer.

The following diagram provides an overview of the solution using Amazon DataZone and other AWS services, following a fully distributed AWS account model, where data sets like airline ticket sales, ticket pricing, and de-identified customer data in this use case are stored in different member accounts in AWS Organizations.

Implementation

Now, we’ll walk through how ATPCO implemented their solution to solve the challenges of analysts discovering, getting access to, and using data quickly to help their airline customers.

There are four parts to this implementation:

- Set up account governance and identity management.

- Create and configure an Amazon DataZone domain.

- Publish data assets.

- Consume data assets as part of analyzing data to generate insights.

Part 1: Set up account governance and identity management

Before you start, compare your current cloud environment, including data architecture, to ATPCO’s environment. We’ve simplified this environment to the following components for the purpose of this blog post:

- ATPCO uses an organization to create and govern AWS accounts.

- ATPCO has existing data lake resources set up in multiple accounts, each owned by different data-producing teams. Having separate accounts helps control access, limits the blast radius if things go wrong, and helps allocate and control cost and usage.

- In each of their data-producing accounts, ATPCO has a common data lake stack: An Amazon Simple Storage Service (Amazon S3) bucket for data storage, AWS Glue crawler and catalog for updating and storing technical metadata, and AWS LakeFormation (in hybrid access mode) for managing data access permissions.

- ATPCO created two new AWS accounts: one to own the Amazon DataZone domain and another for a consumer team to use for analytics with Amazon Athena.

- ATPCO enabled AWS IAM Identity Center and connected their identity provider (IdP) for authentication.

We’ll assume that you have a similar setup, though you might choose differently to suit your unique needs.

Part 2: Create and configure an Amazon DataZone domain

After your cloud environment is set up, the steps in Part 2 will help you create and configure an Amazon DataZone domain. A domain helps you organize your data, people, and their collaborative projects, and includes a unique business data catalog and web portal that publishers and consumers will use to share, collaborate, and use data. For ATPCO, their data platform team created and configured their domain.

Step 2.1: Create an Amazon DataZone domain

Persona: Domain administrator

Go to the Amazon DataZone console in your domain account. If you use AWS IAM Identity Center for corporate workforce identity authentication, then select the AWS Region in which your Identity Center instance is deployed. Choose Create domain.

- Enter a name and description.

- Leave Customize encryption settings (advanced) cleared.

- Leave the radio button selected for Create and use a new role. AWS creates an IAM role in your account on your behalf with the necessary IAM permissions for accessing Amazon DataZone APIs.

- Leave clear the quick setup option for Set-up this account for data consumption and publishing because we don’t plan to publish or consume data in our domain account.

- Skip Add new tag for now. You can always come back later to edit the domain and add tags.

- Choose Create Domain.

After a domain is created, you will see a domain detail page similar to the following. Notice that IAM Identity Center is disabled by default.

Step 2.2: Enable IAM Identity Center for your Amazon DataZone domain and add a group

Persona: Domain administrator

By default, your Amazon domain, its APIs, and its unique web portal are accessible by IAM principals in this AWS account with the necessary datazone IAM permissions. ATPCO wanted its corporate employees to be able to use Amazon DataZone with their corporate single sign-on SSO credentials without needing secondary federation to IAM roles. AWS Identity Center is the AWS cross-service solution for passing identity provider credentials. You can skip this step if you plan to use IAM principals directly for accessing Amazon DataZone.

Navigate to your Amazon DataZone domain’s detail page and choose Enable IAM Identity Center.

- Scroll down to the User management section and select Enable users in IAM Identity Center. When you do, User and group assignment method options appear below. Turn on Require assignments. This means that you need to explicitly allow (add) users and groups to access your domain. Choose Update domain.

Now let’s add a group to the domain to provide its members with access. Back on your domain’s detail page, scroll to the bottom and choose the User management tab. Choose Add, and select Add SSO Groups from the drop-down.

- Enter the first letters of the group name and select it from the options. After you’ve added the desired groups, choose Add group(s).

- You can confirm that the groups are added successfully on the domain’s detail page, under the User management tab by selecting SSO Users and then SSO Groups from the drop-down.

Step 2.3: Associate AWS accounts with the domain for segregated data publishing and consumption

Personas: Domain administrator and AWS account owners

Amazon DataZone supports a distributed AWS account structure, where data assets are segregated from data consumption (such as Amazon Athena usage), and data assets are in their own accounts (owned by their respective data owners). We call these associated accounts. Amazon DataZone and the other AWS services it orchestrates take care of the cross-account data sharing. To make this work, domain and account owners need to perform a one-time account association: the domain needs to be shared with the account, and the account owner needs to configure it for use with Amazon DataZone. For ATPCO, there are four desired associated accounts, three of which are the accounts with data assets stored in Amazon S3 and cataloged in AWS Glue (airline ticketing data, pricing data, and de-identified customer data), and a fourth account that is used for an analyst’s consumption.

The first part of associating an account is to share the Amazon DataZone domain with the desired accounts (Amazon DataZone uses AWS RAM to create the resource policy for you). In ATPCO’s case, their data platform team manages the domain, so a team member does these steps.

- Todo this in the Amazon DataZone console, sign in to the domain account and navigate to the domain detail page, and then scroll down and choose the Associated Accounts tab. Choose Request association.

- Enter the AWS account ID of the first account to be associated.

- Choose Add another account and repeat step one for the remaining accounts to be associated. For ATPCO, there were four to-be associated accounts.

- When complete, choose Request Association.

The second part of associating an account is for the account owner to then configure their account for use by Amazon DataZone. Essentially, this process means that the account owner is allowing Amazon DataZone to perform actions in the account, like granting access to Amazon DataZone projects after a subscription request is approved.

- Sign in to the associated account and go to the Amazon DataZone console in the same Region as the domain. On the Amazon DataZone home page, choose View requests.

- Select the name of the inviting Amazon DataZone domain and choose Review request.

- Choose the Amazon DataZone blueprint you want to enable. We select Data Lake in this example because ATPCO’s use case has data in Amazon S3 and consumption through Amazon Athena.

- Leave the defaults as-is in the Permissions and resources The Glue Manage Access role allows Amazon DataZone to use IAM and LakeFormation to manage IAM roles and permissions to data lake resources after you approve a subscription request in Amazon DataZone. The Provisioning role allows Amazon DataZone to create S3 buckets and AWS Glue databases and tables in your account when you allow users to create Amazon DataZone projects and environments. The Amazon S3 bucket for data lake is where you specify which S3bucket is used by Amazon DataZone when users store data with your account.

- Choose Accept & configure association. This will take you to the associated domains table for this associated account, showing which domains the account is associated with. Repeat this process for other to-be associated accounts.

After the associations are configured by accounts, you will see the status reflected in the Associated accounts tab of the domain detail page.

Step 2.4: Set up environment profiles in the domain

Persona: Domain administrator

The final step to prepare the domain is making the associated AWS accounts usable by Amazon DataZone domain users. You do this with an environment profile, which helps less technical users get started publishing or consuming data. It’s like a template, with pre-defined technical details like blueprint type, AWS account ID, and Region. ATPCO’s data platform team set up an environment profile for each associated account.

To do this in the Amazon DataZone console, the data platform team member sign in to the domain account and navigates to the domain detail page, and chooses Open data portal in the upper right to go to the web-based Amazon DataZone portal.

- Choose Select project in the upper-left next to the DataZone icon and select Create Project. Enter a name, like Domain Administration and choose Create. This will take you to your new project page.

- In the Domain Administration project page, choose the Environments tab, and then choose Environment profiles in the navigation pane. Select Create environment profile.

- Enter a name, such as Sales – Data lake blueprint.

- Select the Domain Administration project as owner, and the DefaultDataLake as the blueprint.

- Select the AWS account with sales data as well as the preferred Region for new resources, such as AWS Glue and Athena consumption.

- Leave All projects and Any database

- Finalize your selection by choosing Create Environment Profile.

Repeat this step for each of your associated accounts. As a result, Amazon DataZone users will be able to create environments in their projects to use AWS resources in specific AWS accounts forpublishing or consumption.

Part 3: Publish assets

With Part 2 complete, the domain is ready for publishers to sign in and start publishing the first data assets to the business data catalog so that potential data consumers find relevant assets to help them with their analyses. We’ll focus on how ATPCO published their first data asset for internal analysis—sales data from their airline customers. ATPCO already had the data extracted, transformed, and loaded in a staged S3 bucket and cataloged with AWS Glue.

Step 3.1: Create a project

Persona: Data publisher