Testing out Azure Storage Mover

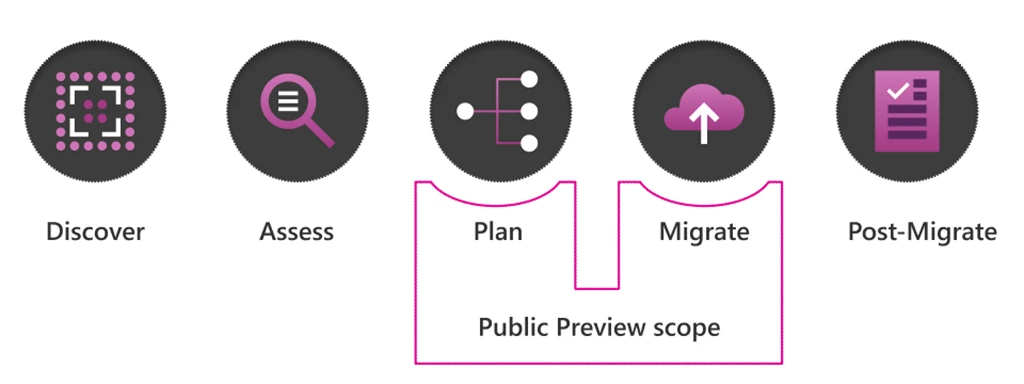

I recently noticed that Azure Storage Mover is now Generally Available. This is a service I’ve not used before, but migrations of Storage to Azure is something I am often involved with, so I was keen to test out this service, and explore the setup and usage with a test migration. In this post I will run through an overview of Storage Mover, how it is setup, and also run through a simple test migration.

What is Azure Storage Mover?

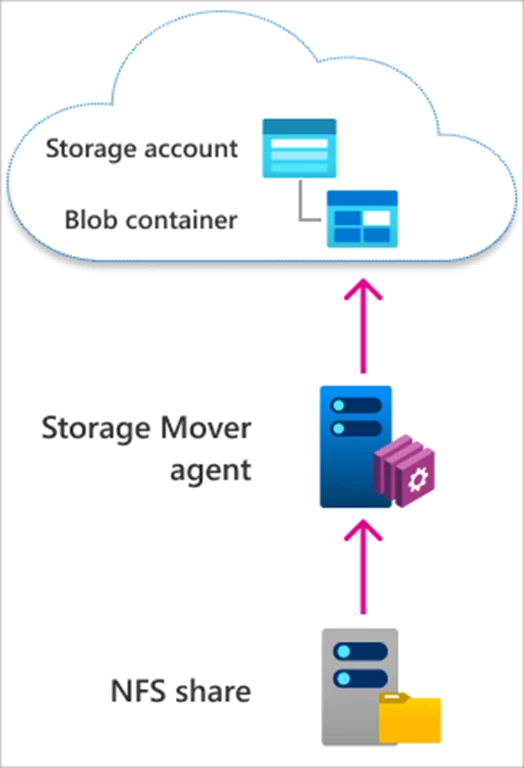

Azure Storage Mover is a service that enables the migration of files and folders to Azure Storage, whilst minimizing downtime for your workload. Storage Mover can be used for a variety of migration requirements and scenarios.

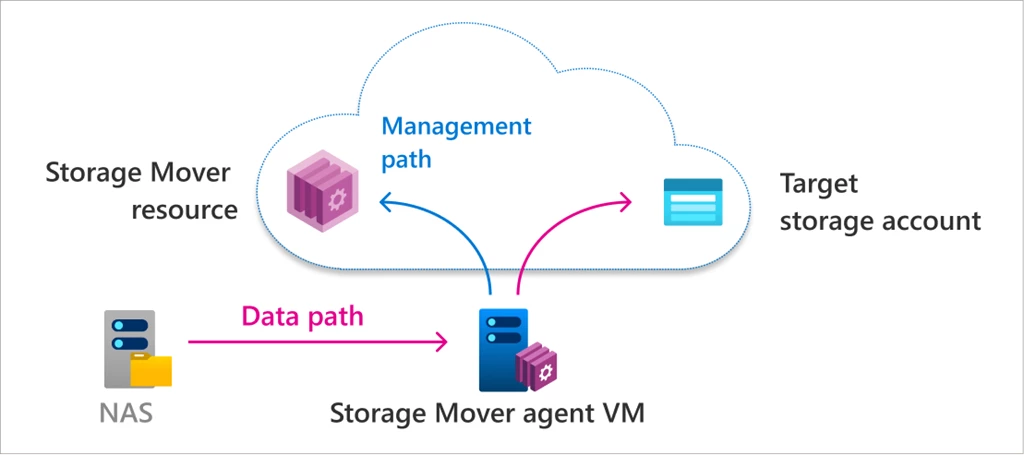

Azure Storage Mover is a Hybrid Cloud Service – so it comprises both a Cloud and Infrastructure component, and to use it we need to deploy both components.

What are the Supported Sources and Targets for a Move?

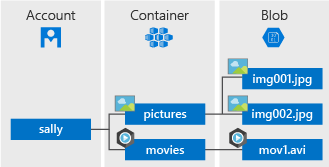

At the current time Azure Storage Mover enables the migration of NFS shares to an Azure Blob Container. For an overview of the supported sources and targets, see here: https://learn.microsoft.com/en-gb/azure/storage-mover/service-overview#supported-sources-and-targets.

Setup & Testing

To test out the Service, I will be using a simple setup, comprising of:

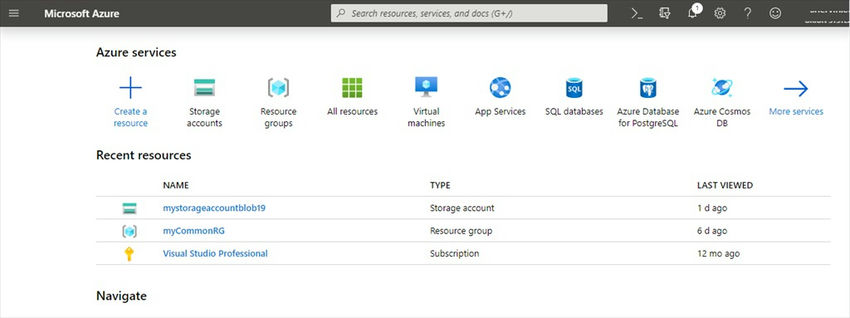

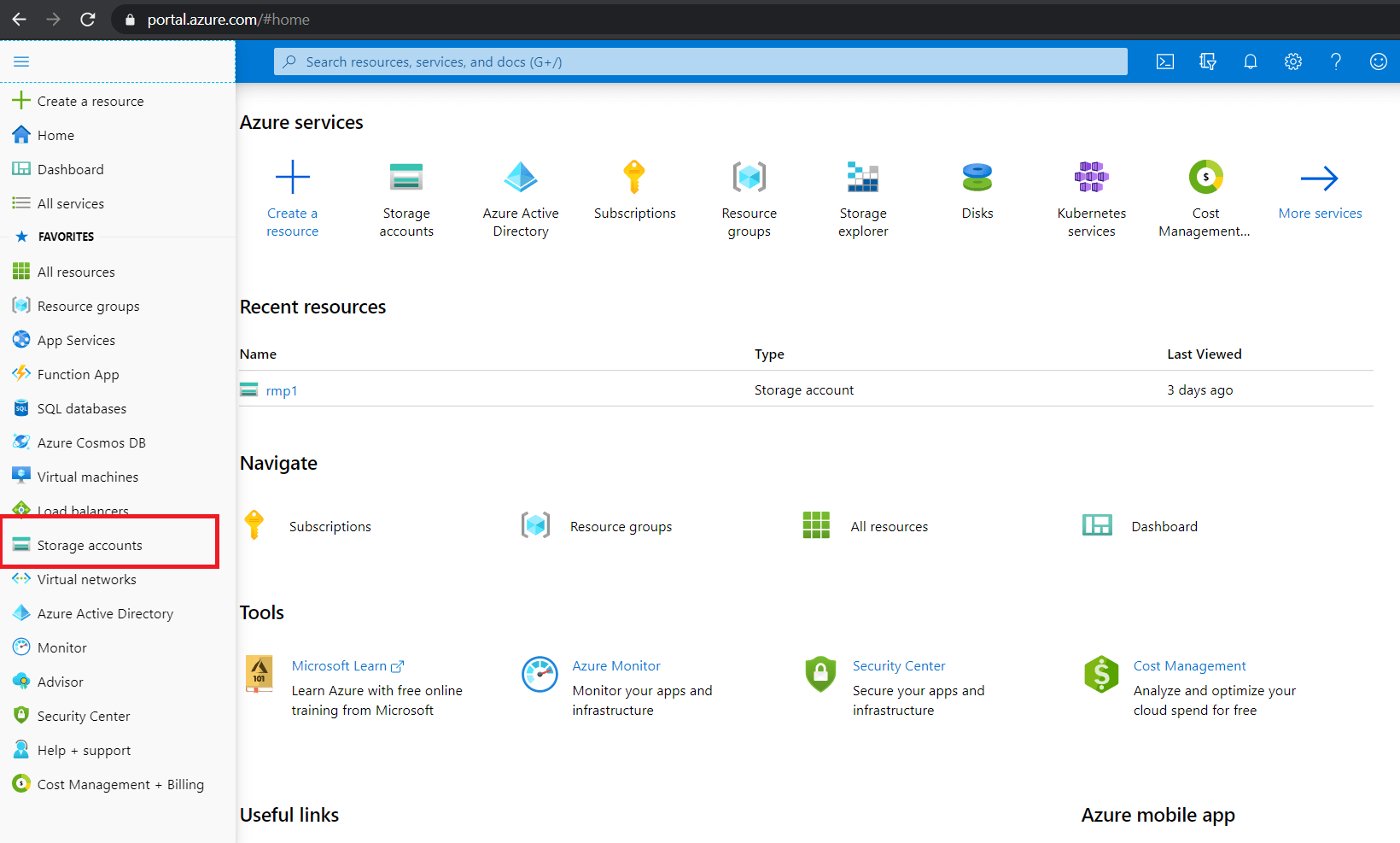

An Azure Subscription

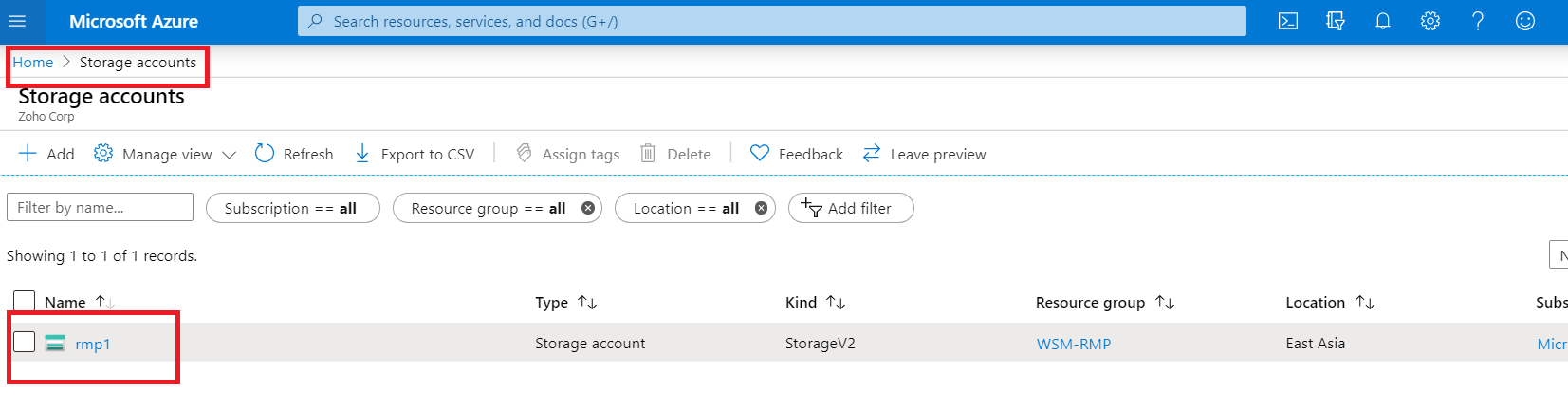

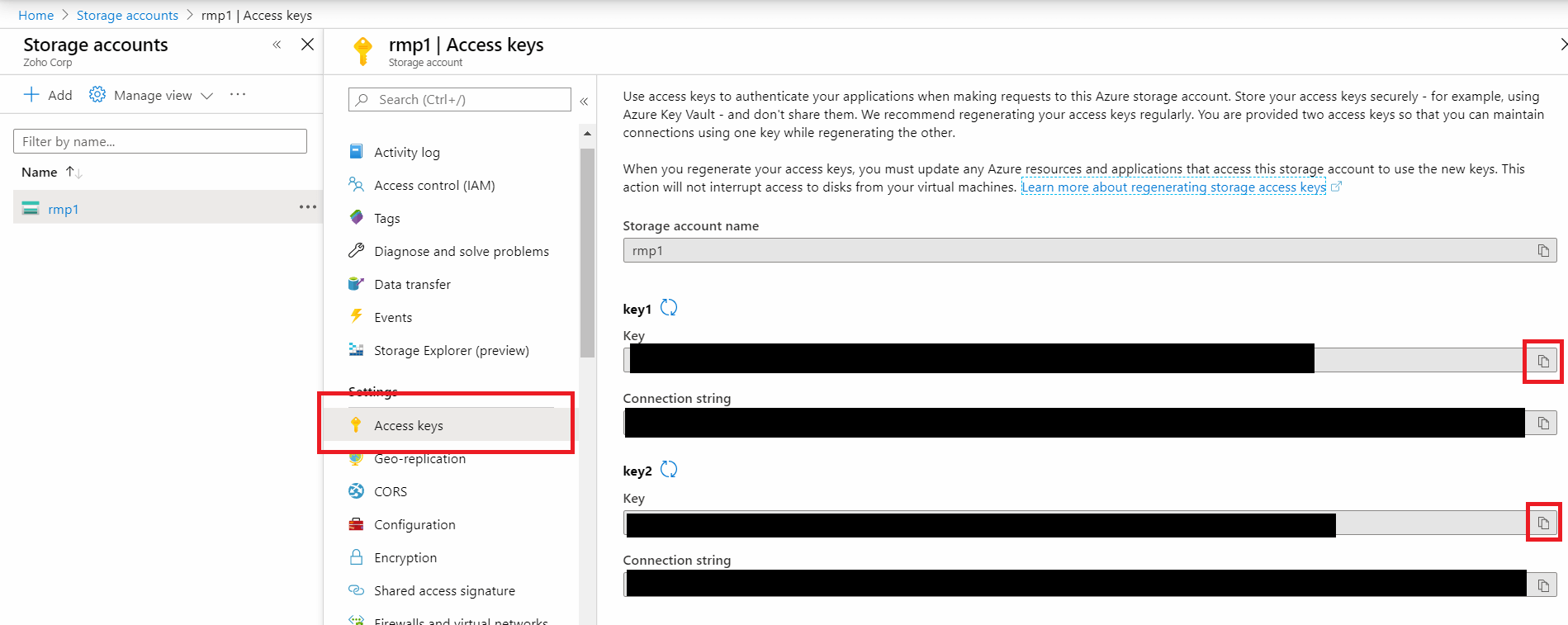

AN Azure Storage Account with a Blob Container

An NFS Share hosted on a Windows Virtual Machine (within my Lab)

A Storage Mover Agent deployed within my Lab

To start testing – I will first browse to my NFS share, and show that there is some data within:

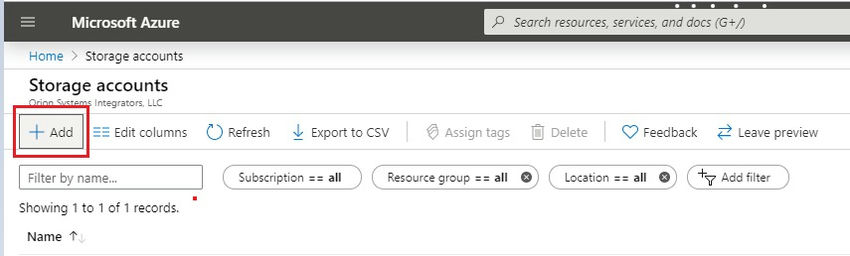

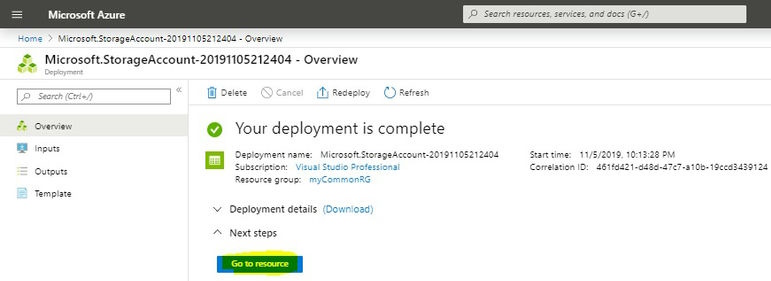

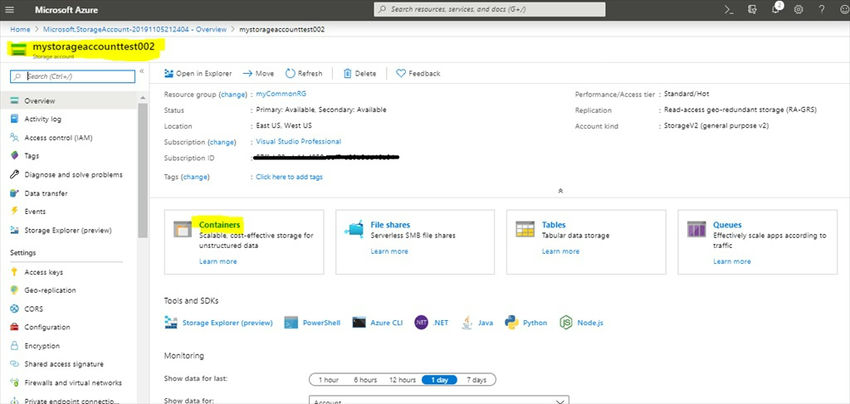

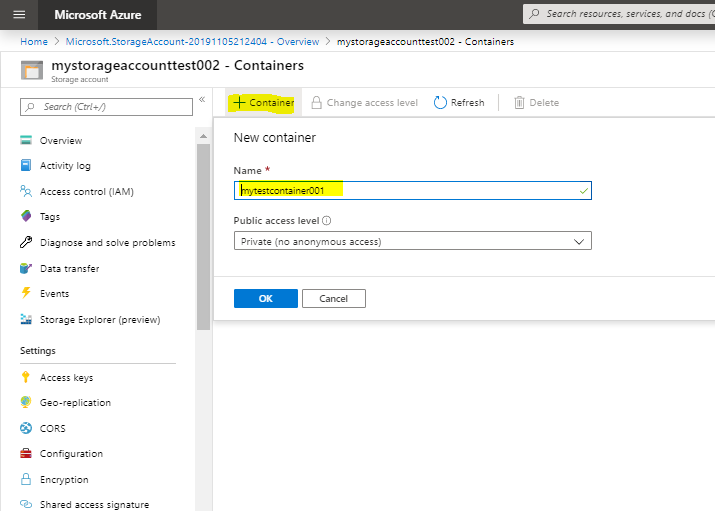

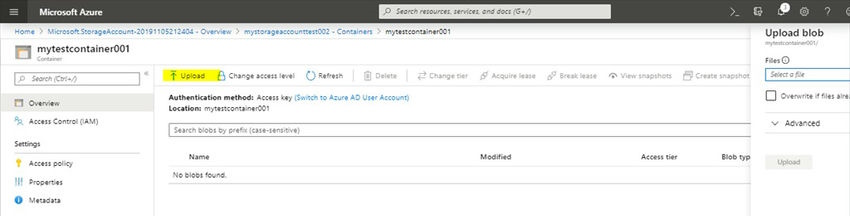

I also have an Azure Storage Account with a Blob Container setup – this is currently empty, and will be the target destination I use for the data above:

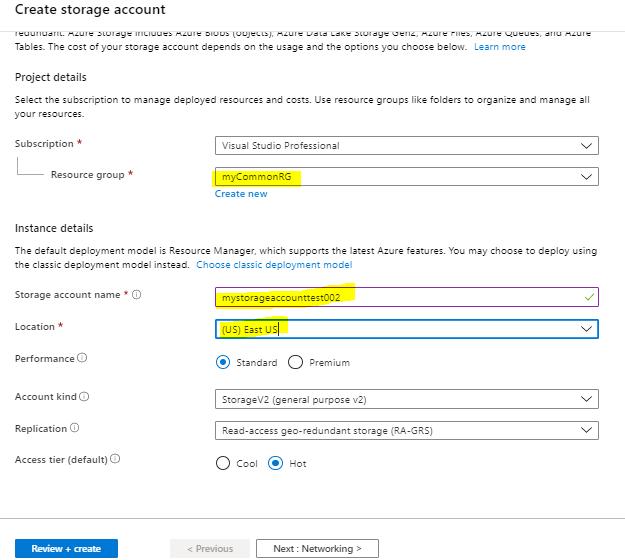

Creating the Storage Mover

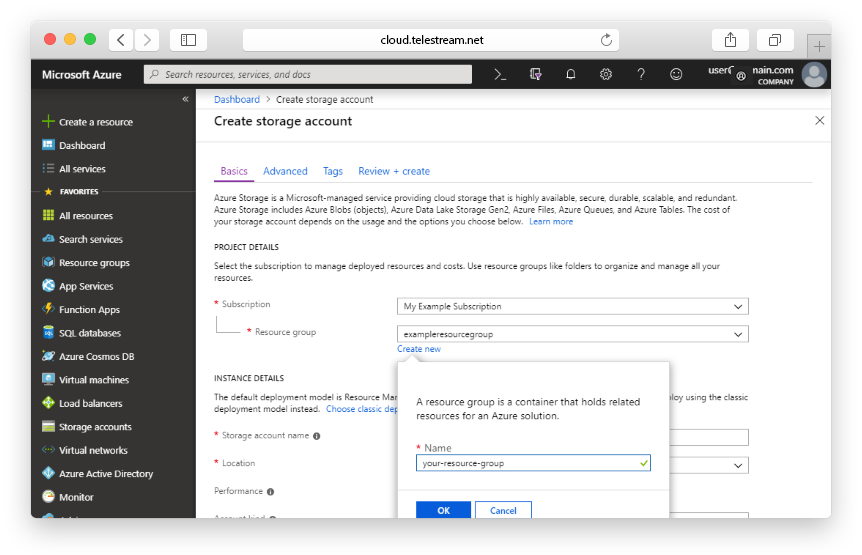

Next we need to deploy the Azure Storage Mover Resource – this is done from within the Azure Portal:

Click on “Create storage mover”:

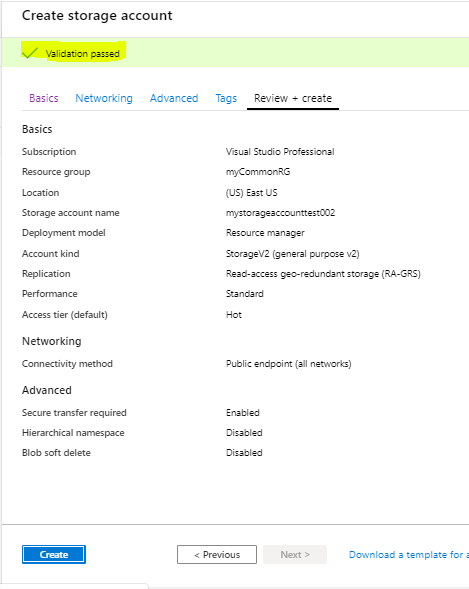

We can then fill in a few basic details to begin:

There is also an option to add Monitoring to Storage Mover – which enables metrics and logs to be collected:

Once this is done we can move onto the “Review + create” pane, and then create the Resource. Once created, we can see our Storage Mover:

We can now move onto the Agent Deployment. The agent is how our Storage Mover Resource will be able to community with our on-premises storage shares and devices.

Agent Deployment

Storage Mover makes the Agent deployment a simple process – from the Storage Mover overview, click on “Register agent”, as shown below:

You’ll then be prompted to download the Agent:

The link shown above will take you to a Microsoft download page, that allows you to download the VHDX for the Agent VM. Once this has downloaded we need to deploy this within our environment. My Lab environment is using Hyper-V, so the process is simple. If you need guidance on deployment of the VHDX to Hyper-V, please see this guide here: https://learn.microsoft.com/en-gb/azure/storage-mover/agent-deploy?tabs=xdmshell.

At this point, it is also worth noting that the Storage Mover agent VM requires outbound unrestricted internet connectivity. There is also a table of CPU Cores and RAM recommendations that needs consideration, depending upon the scale of migration: https://learn.microsoft.com/en-gb/azure/storage-mover/agent-deploy?tabs=xdmshell#recommended-compute-and-memory-resources. A minimum of 20 GB of disk space is also required – with potentially more space for a large migration.

Once the VM is deployed, and has been booted, we can connect via SSH, using the default credentials of admin/admin, and we are prompted to update the password. Note, you can use Hyper-V to check the IP you need to connect to!

We can now start the process of Registering the agent. To do this, select “4” from the menu, which starts the Registration process. Once you select this option and press enter, you will be prompted to enter the following information:

Tenant ID

Subscription ID

Resource Group Name

Storage Mover Name

Whether Private Link is required

Once completed – you will see the following:

Our Agent is now Registered:

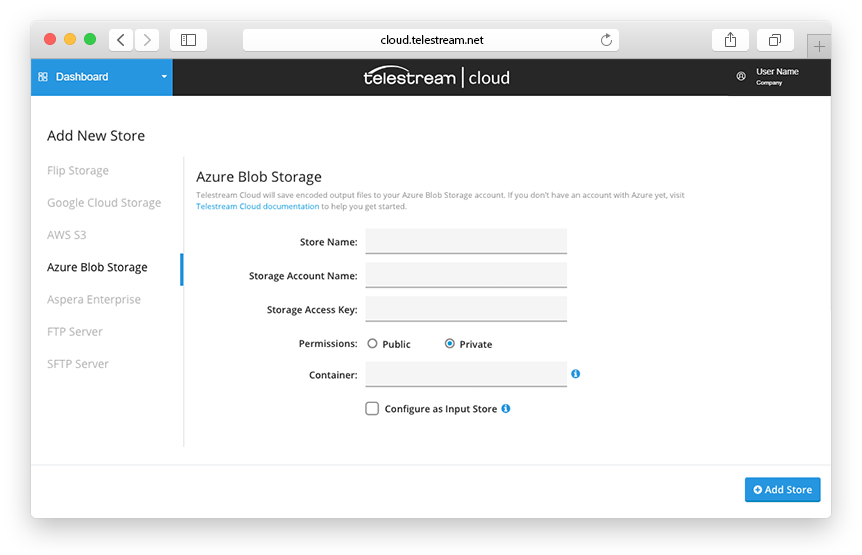

We can now setup our Storage Target within Storage Mover, before we move onto the Migration.

Adding our Storage Endpoints

We can now add our Storage Endpoints to Storage Mover, so that we have our source and destination endpoints configured. We will start with our target endpoint – to do this, visit your Storage Mover in the Portal, and click on “Storage endpoints”, then “Create endpoint”, and select “Create target endpoint”:

We are then prompted to fill in some details – as you can see I have selected my Subscription, Storage Account, and Container, and provided a description:

Once these details are added, click on “Create”. After a few moments, our endpoint will then be added:

Our Target endpoint is now added, and we can now move on to adding our Source endpoint. To do this, click on the “Create endpoint” drop down and select “Create source endpoint”:

This will bring up a window for us to add the details of our source NFS Share:

Once the details are added, we can click on “Create”, and our Source will be added:

We can now move onto the Migration!

Migration

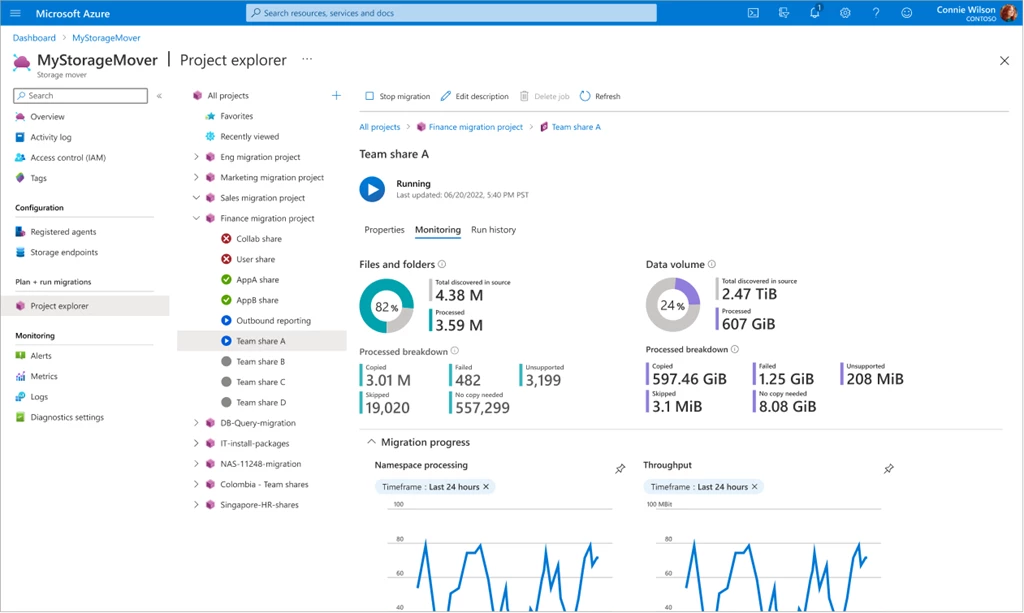

Once our Agents, and Source and Target endpoints are setup, Migration is a straightforward process using Storage Mover. We next need to create a Project, and then a Job within this project – which we can do from the main Storage Mover window. To get started click on “create project”:

This will bring up the Project explorer window, where we need to click on “create project” again to start. We can then fill in basic details and click on “create”:

We can now create a job definition within this project:

We can now fill in the job definition details – there are a few screens for this, which are shown below:

Our job will now be setup – and we can review this from the Project explorer. Once we are happy with the details shown, we can click on “Start job”:

The job will then show as “Queued”, whilst the agent picks up the job:

Once the operation is completed, you will see the overview screen that details how the job went. Note I added a few more files to my share to increase the number of files/objects since I took the “source” screenshot at the start of this post.

We can now browse to our Storage Account and confirm that files are showing as expected. I can see the test files I added below:

This concludes my test migration. Obviously in a production scenario it’s likely to be significantly more data or shares, however this post hopefully demonstrates the simplicity with which migration can be done using Storage Mover – and in particular, how it can be used to manage a number of migration jobs across different sources and targets.