Step by Step Azure Stack Edge – Azure Data Box Gateway for a Hybrid Cloud

Azure Data Box Gateway Where is the difference between Azure Files Sync or Azure file share or even a StorSimple now a Data Box. As you may know a Azure Data box the the ultimate device to bring data fast to the Azure Cloud.

This blog was long pending as I did many Azure migrations and new stuff came up every time. Now that there is a Azure Data Box Gateway that you can run on your favorite hypervisor Hyper-V you can create a virtual instance to bring your data to a Azure storage account. Now days there is a lot of overlap in products.

- Azure Files (Sync) sync’s your data to an Azure Storage account – Auto Sync.

- Azure files uses Net use to connect to a storage account – Manual copy and writes direct to Azure

- StorSimple (old but still seen in the wild)

- Azure Data Box Gateway

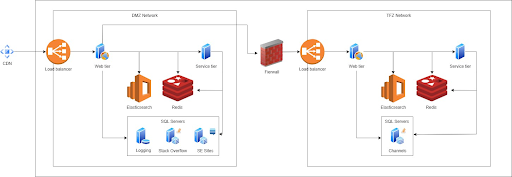

One of the primary advantages of Data Box Gateway is the ability to continuously ingest data into the device to copy to the cloud, regardless of the data size. Keep in mind this is not a file server replacement. but my first impression is this could replace a storsimple as this may not the goal for this. As you could run a virtual StorSimple.

As the data is written to the gateway device, the device uploads the data to Azure Storage. The device automatically manages storage by removing the files locally while retaining the metadata when it reaches a certain threshold. Keeping a local copy of the metadata enables the gateway device to only upload the changes when the file is updated. Keep in mind the Azure Storage account limits https://docs.microsoft.com/nl-nl/azure/databox-online/data-box-gateway-limits#azure-object-size-limits

there is a thin line between the products and I must say I was impressed by the speed of the upload it was fast and I could used the whole bandwidth.

So let us start building.

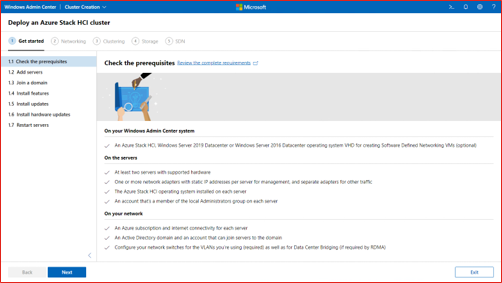

To create any Azure Stack Edge / Data Box Gateway resource, you should have permissions as a contributor (or higher) scoped at resource group level. You also need to make sure that the DataBoxEdge provider is registered.

In the Azure portal we go to the Data Box Gateway.

Do Add to create a new BOX below is the Databox blade and not the Gateway option

Selecting the DataBox gateway gives you the option to select the hypervisor this option is not available in the DataBox.

I used the Hypervisor

In this we pick the DataBox Gateway. the Cost are $105 per month not a big price.

We create a resource group as for all Azure resources. and a location

PAYG-Azure Sponsorship

Resource group

rg-databox-gw-001

Name

mvp-databox-gateway-001

Region

West Europe

Details above easy setup in Azure.

Now that the Azure Databox Gateway is bought in the Marketplace we can setup the device. First we need to download the VHDX file for our VM

So We download the 5GB image and use this in our Hyper-v Server

On the Download image tile, select the virtual device image corresponding to the operating system on the host server used to provision the VM. The image files are approximately 5.6 GB.

Extract the File and use the VHDX as an Gen 2 VM

Some basic specs for the VM

I played with the settings a bit to see if I could lower the VM’s Specs. You will see that later in a screenshot.

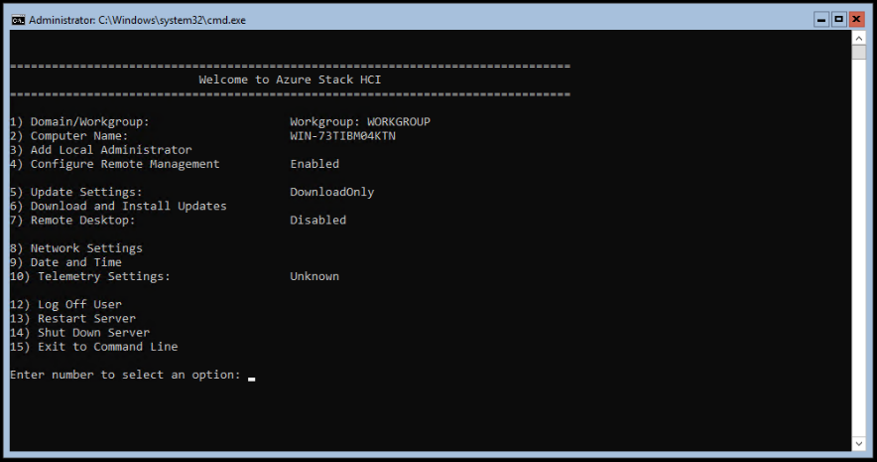

You may have to wait 10-15 minutes for the device to be ready. A status message is displayed on the console to indicate the progress. After the device is ready, go to Action. Press Ctrl + Alt + Delete to sign in to the virtual device.

The default user is EdgeUser and the default password is Password1.

Use Password1 as default password.

as you can see I used 1 CPU the setup stopped and I changed it to 8 CPU and 8 GB memory.

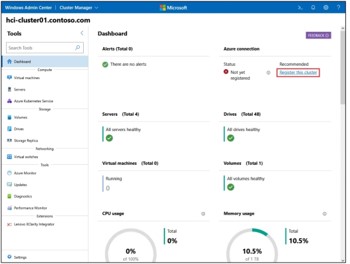

Now that the VM is setup we can go to the management page that runs on the IP.

Using the default password Password1

Chaning the Pasword in eh is something that you can remember

There are not much settings that you can change as time and IP and stop or reboot but the configuration is done from the Azure portal.

The one thing that is needed is to activate the VM

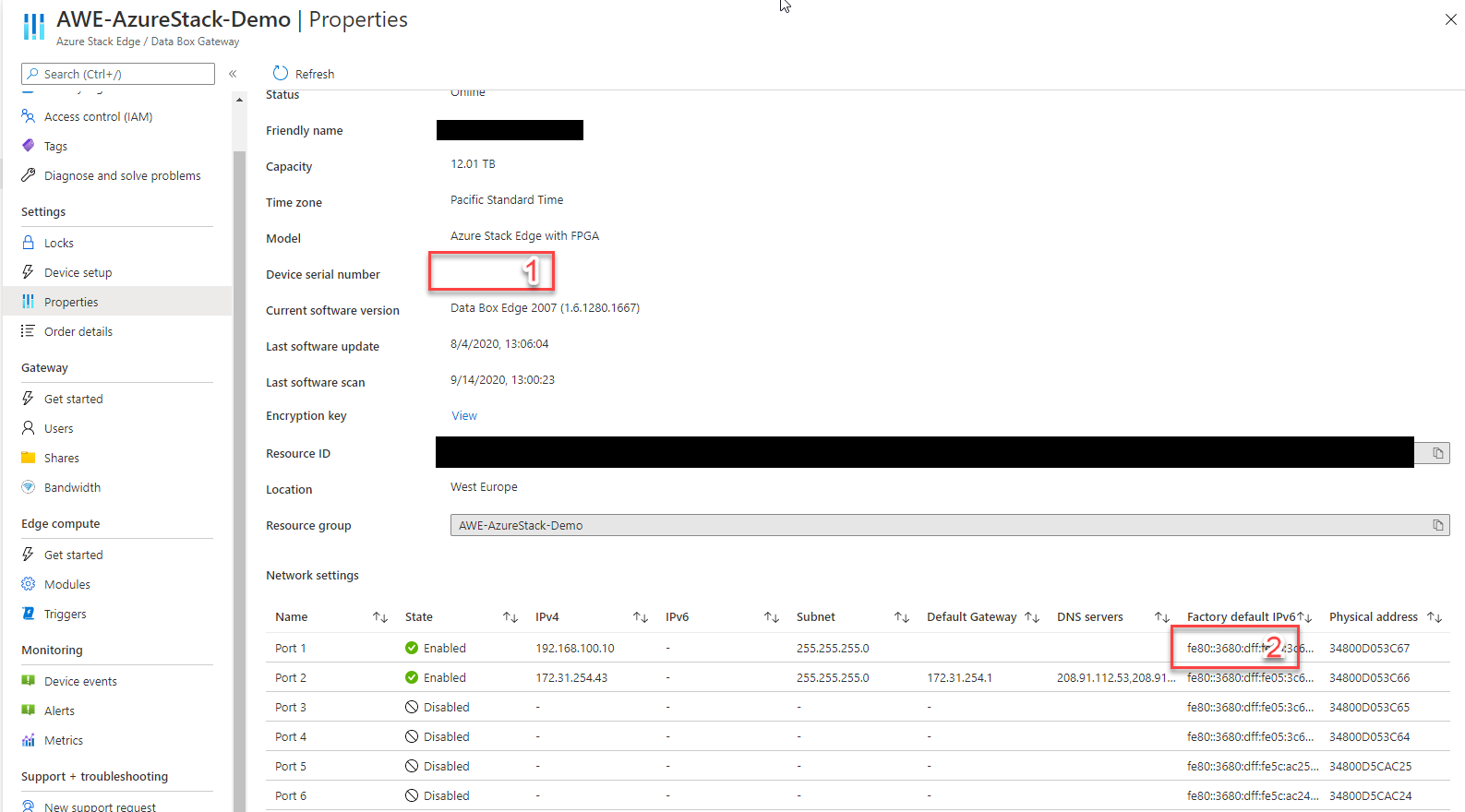

In the portal you can set the name and get the key.

Generate a key and use the keyvault name if you lose the key

When activating the device with the key the device is live!

There are 3 modes for the device I used the full connected setting.

There is some diagnostics in the VM and for now it all looks good.

Our Next steps are creating a share and an extra user and test some performance

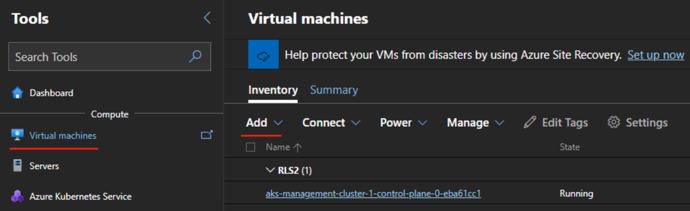

We add a user that can be used to connect to the Share as it is not AD or AADDS

Our next step is to create a share

make sure the storage account where the files need to land is already created

In the Azure portal, select your Data Box Gateway resource and then go to Overview. Your device should be online. Select + Add share on the device command bar.

You’re notified that the share creation is in progress. After the share is created with the specified settings, the Shares tile updates to reflect the new share.

Connect to the SMB share

On your Windows Server client connected to your Data Box Gateway, connect to an SMB share by entering the commands:

In a command window, type:

net use \\<IP address of the device>\<share name> /u:<user name for the share>Enter the password for the share when prompted.

net use * \\192.168.1.96\agwfiles001 /u:mvpadmin

now that the device is up and running we can push some data to the cloud. as the gateway is the man in the middle and the extra drive is holding the files and then transferred to Azure.

I had no limit set and I was surprised that it could eat the full line. this makes it more fun.

just a few files to test, but I need more files to test this. and let me set some bandwidth limits

Setting a limit from 200Mbps did limit the speed

Think I need to see and play a bit more as the 200 Mbps is not really working, it is more that I have still 200 Mbps over. but there is a schedule and that is really nice so these files or backup can be transferred in the night hours at high speed.

now back to no limit

yeb it is working and I think I need a bigger internet line. Remember this

If we had Azure in these days

Deleting the files from the Gateway did not remove the files from the storage account and showed as a nice archive, If you need to copy a large amount of files than this is a great solution and cheaper than the big data box.