One of the key criteria by which an application is judged is definitely the downtime.

In this blog post, we’ll discuss Blue-Green Deployment, which leads to Zero downtime. Being developers, we must have faced a situation where our applications’ updated version takes the customers’ heat for being slow or buggy. This leads to rolling back to the previous versions of our application, which are stable. Right? But is rollback easy?

As you must have guessed, rollback isn’t as easy as it sounds. It has several shortcomings as it is implemented by redeploying an older version from scratch. This process takes a lot of time, which can make us lose thousands of customers in realtime. Moreover, even if the bug is small, we do not get time to fix it and move to the stable version. So how to solve this problem?

This post will cover :

- Overview

- Step-by-Step Blue Green Testing Model

- Achieving Blue-Green Deployment via AWS Tools & Services

- Advantages of Blue-Green Deployments

- Disadvantages of Blue-Green Deployments

- Alternatives for Blue-Green Deployment

Overview

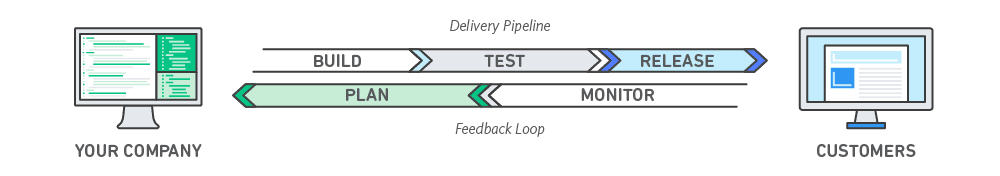

Here comes Blue-Green Deployment into the picture. Blue-green deployments can reduce common risks associated with deploying software, such as downtime and rollback capability. These deployments give just about a zero-downtime release and rollback capabilities.

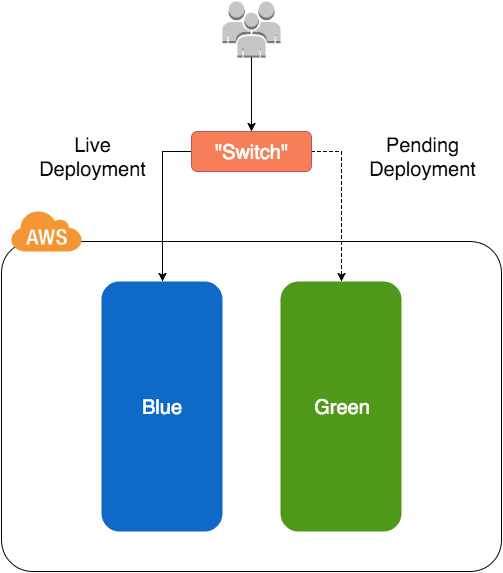

The basic idea is to shift traffic between two identical environments, running different versions of your application. Although the colours don’t represent a particular version, the blue colour usually signifies the live version, and the green colour signifies the version that needs to be tested. These can be swapped too. After the green environment is ready and tested, production traffic is redirected from blue to green. If you face any problem, you can always roll back by reverting traffic to the blue environment.

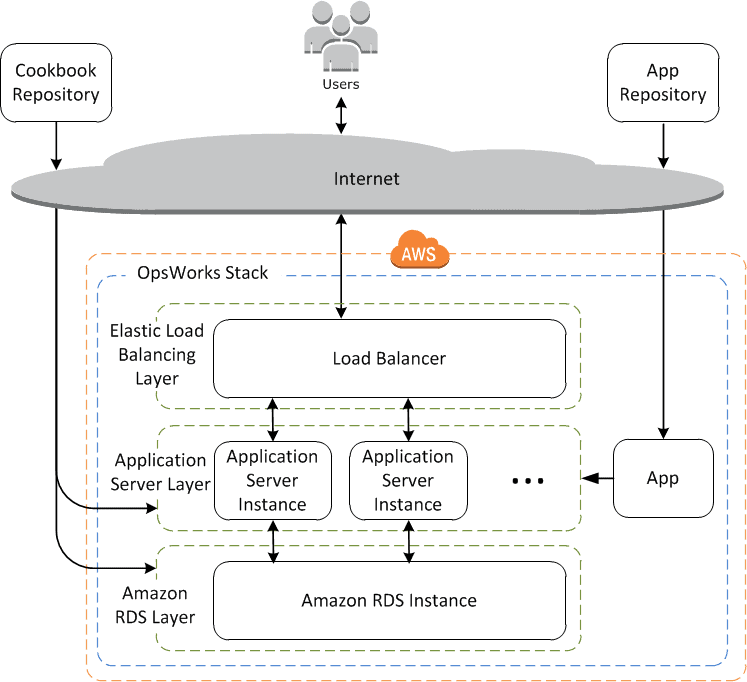

The two environments need to be different but as identical as possible. We can use a load balancer to route traffic between them. They can be different hardware pieces or different virtual machines running on the same or different hardware. They can also be a single operating environment partitioned into separate zones with separate IP addresses for the two slices.

Learn with us: Join our AWS Solution Architect Training and understand AWS basics in an easy way.

Step-by-Step Blue Green Testing Model

In Server A, for instance, we’ll have the green version of our application which needs to be deployed to the live production environment. You want to ensure that the update won’t interrupt service and that it can easily be rolled back if any major errors are found. Here comes the Blue-Green deployment model into the picture.

- Deploy the updated version of the application (Blue version) to Server B. Run any applicable tests to ensure that the update is working as expected.

- Configure the router to start sending live user traffic to Server B.

- Configure the router to stop sending live user traffic to Server A.

- Keep sending traffic to Server B until you can be certain that there are no errors and no rollbacks will be necessary. If a rollback is necessary, configure the router to send users back to your Green version on Server A until they can be resolved.

- Remove the Green version of your application from Server A, or update it to your Blue version so it can continue serving as a back-up version of your application.

Read about: Amazon Elastic Load Balancing (ELB). Its overview, features, and types.

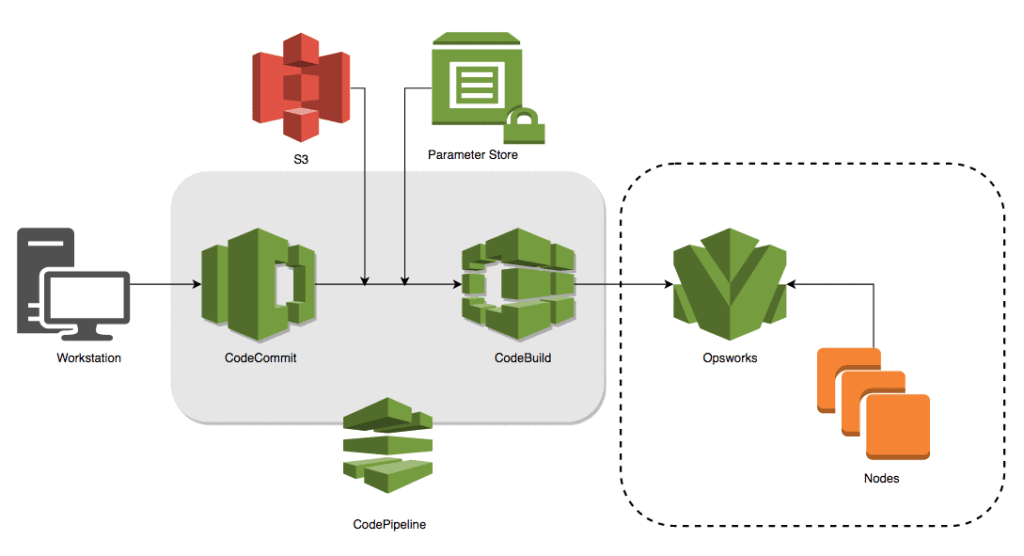

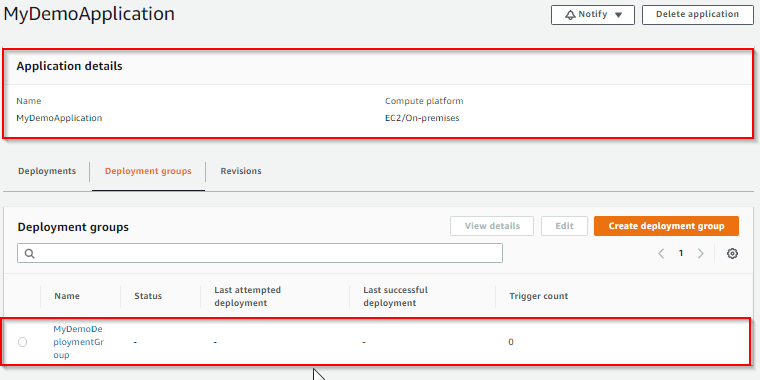

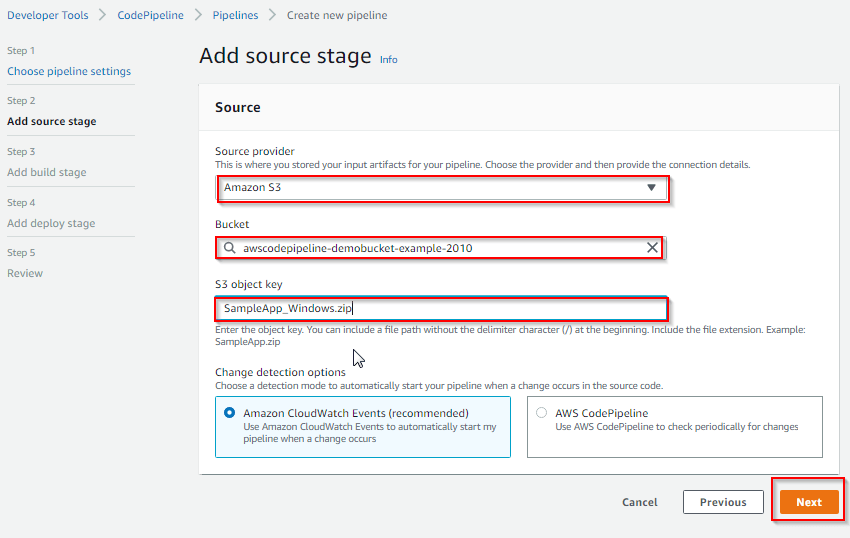

Achieving Blue-Green Deployment via AWS Tools & Services

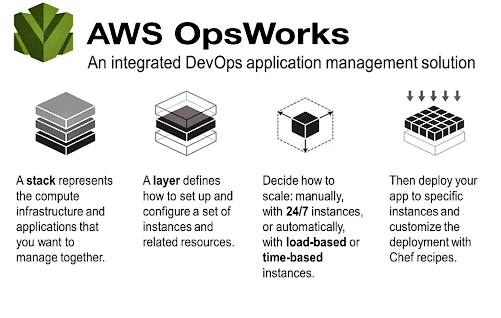

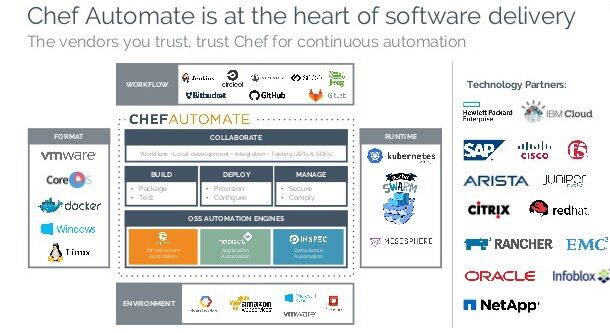

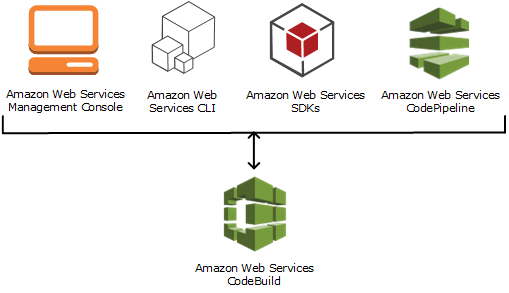

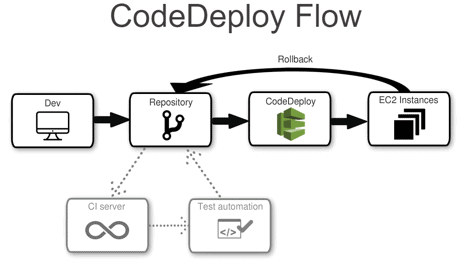

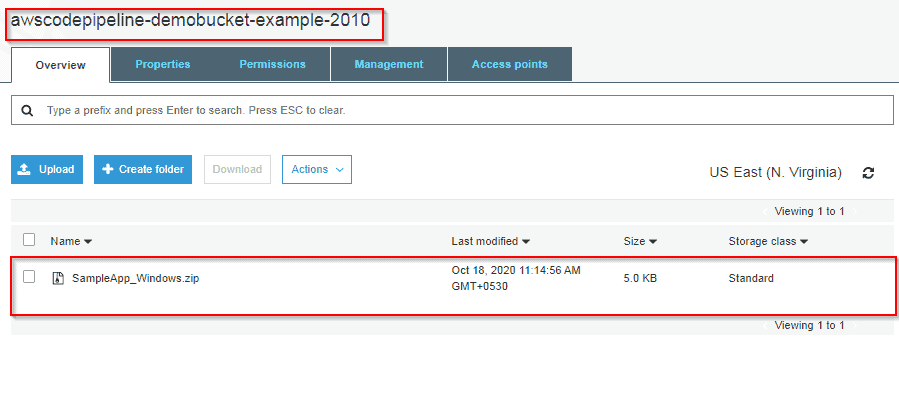

There are various services available in the AWS ecosystem that makes use of the blue-green deployment strategy. Some of such services are mentioned below which could help you automate your deployment.

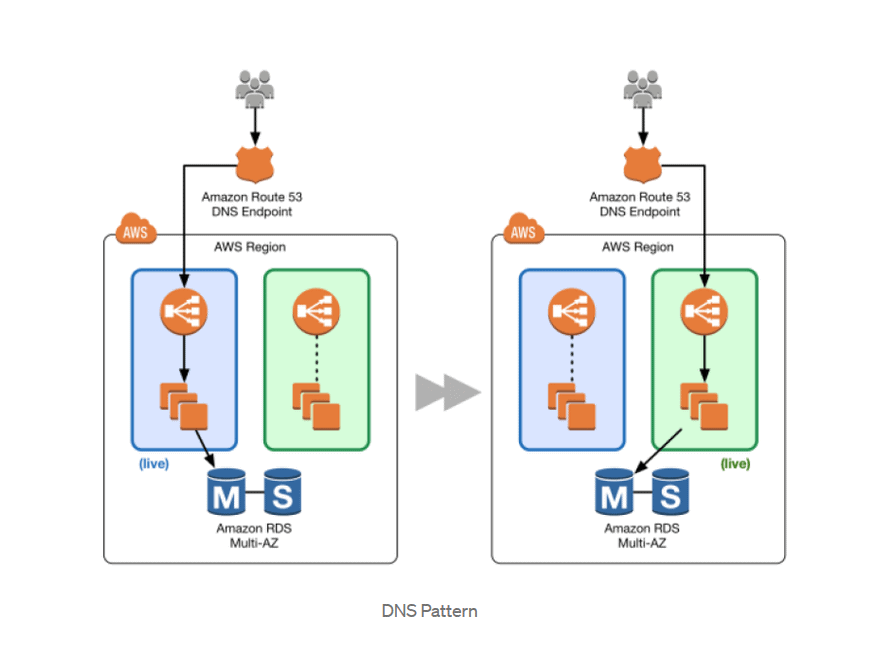

Amazon Route 53

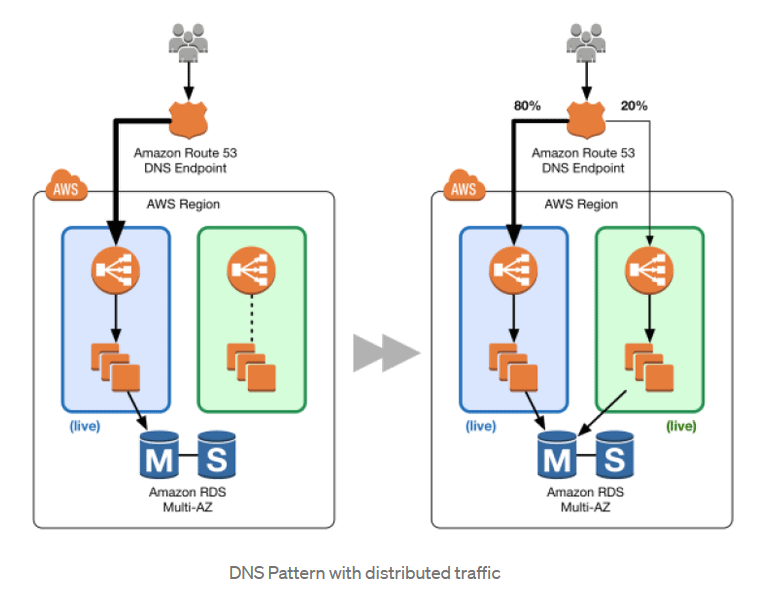

Amazon Route 53 is basically a highly available DNS service that can help you buy domains, route traffic by configuring DNS records and to do frequent health checks of your system. DNS is an exemplary way to deal with blue/green deployments as it permits administrators to coordinate traffic by just updating DNS records in the facilitated zone.

Amazon Route 53 also supports the weighted distribution of traffic. This means that you can provide a dedicated percentage of traffic you want on each of your servers. Therefore, you can allow a certain percentage of traffic to go to the green environment and gradually update the weights until the green environment carries full production traffic. This type of distribution helps us perform canary analysis where a small percentage of production traffic is introduced to a new environment.

To Know More About AWS Database Services . Click Here

Elastic Load Balancing

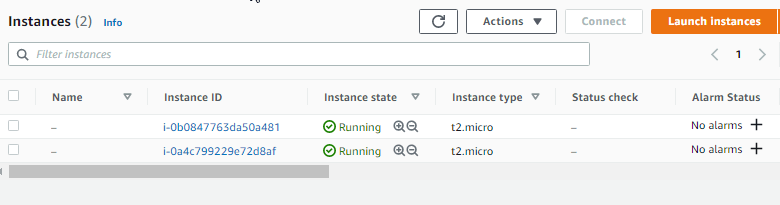

Load balancing provides another fantastic way to route traffic which in turn helps us achieve blue-green deployment. Elastic load balancing is one such technology that distributes traffic across various EC2 instances. Not just that, it does frequent health checks over those instances and can further be integrated with auto-scaling to achieve better performance and zero downtime.

Auto Scaling

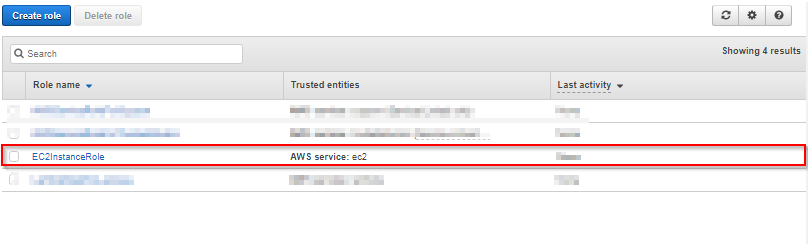

Auto Scaling refers to the increase and decrease of instances for our application according to the need. Various templates known as launch configurations can be used to launch EC2 instances in an Auto Scaling group. An Auto Scaling group could also be attached to different versions of the launch configuration to enable blue-green deployment.

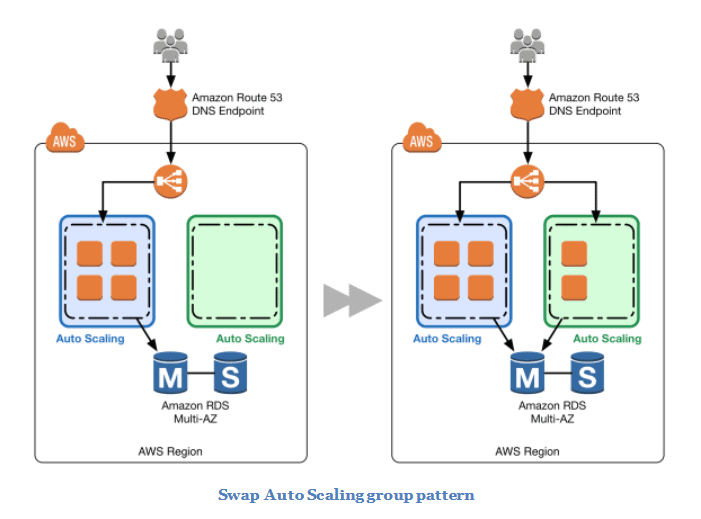

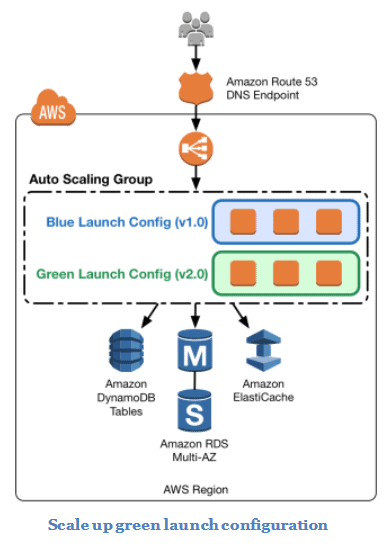

The figure below shows the environment boundary reduced to the Auto Scaling group. A blue group carries the production load while a green group is staged and deployed with the new code. When it’s time to deploy, you simply attach the green group to the existing load balancer to introduce traffic to the new environment. For HTTP/HTTPS listeners, the load balancer favours the green Auto Scaling group because it uses the least outstanding requests routing algorithm.

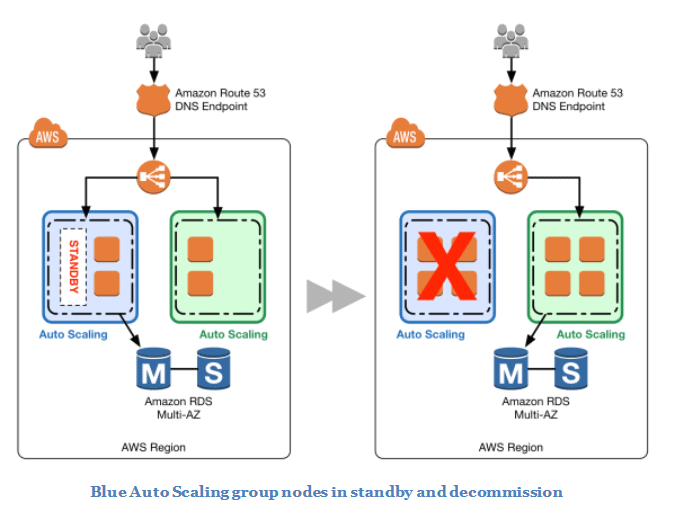

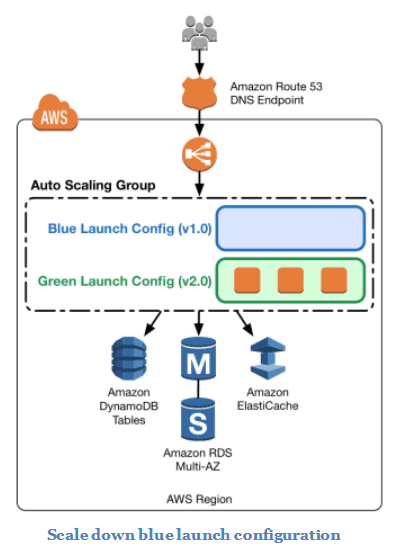

As you scale up the green Auto Scaling group, you can take blue Auto Scaling group instances out of service by either terminating them or putting them in a Standby state, as shown in the below figure. Standby is a good option because if you need to roll back to the blue environment, you only have to put your blue server instances back in service and they’re ready to go.

Update Auto Scaling Group Launch Configurations

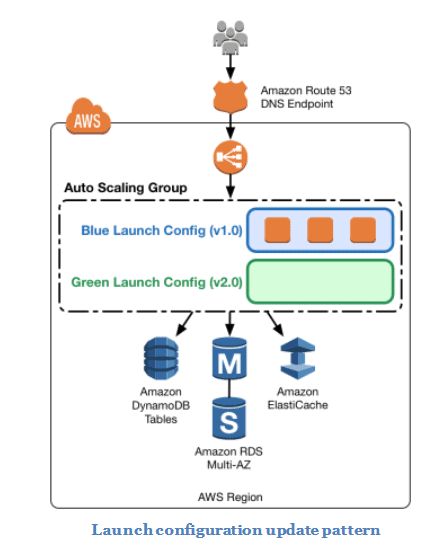

Auto Scaling groups have their own launch configurations. A launch configuration contains information like the Amazon Machine Image (AMI) ID, instance type, key pair, one or more security groups, and a block device mapping. You can associate only one launch configuration with an Auto Scaling group at a time, and it can’t be modified after you create it.

Now we will try to change the launch configuration associated with an Auto Scaling group and replace the existing launch configuration with a new one. To implement this, we will start with an Auto Scaling group and ElasticLoad Balancing load balancer. The current launch configuration has a blue environment.

To deploy the new version of the application in the green environment, update the Auto Scaling group with the new launch configuration, and then scale the Auto Scaling group to twice its original size.

Now, shrink the Auto Scaling group back to the original size. By default, instances with the old launch configuration are removed first. You can also leverage a group’s Standby state to temporarily remove instances from an Auto Scaling group.

To perform a rollback, update the Auto Scaling group with the old launch configuration. Then, do the preceding steps in reverse. If the instances are in a Standby state, bring them back online.

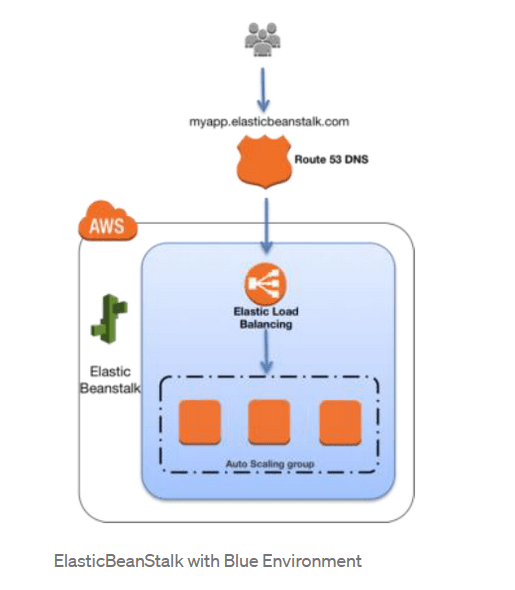

AWS Elastic Beanstalk

AWS Elastic Beanstalk helps you to quickly deploy applications and manage them. It supports Auto Scaling and Elastic Load Balancing, the two of which empower blue-green deployment. It also makes it simple to run different adaptations of your application and gives developers an option to exchange the environment URLs, encouraging blue-green deployment. To gain in-depth knowledge check our blog on AWS Elastic Beanstalk

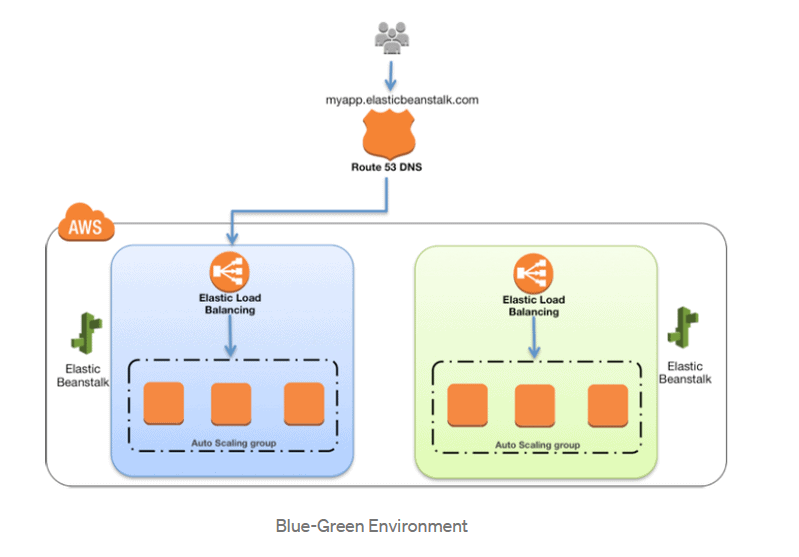

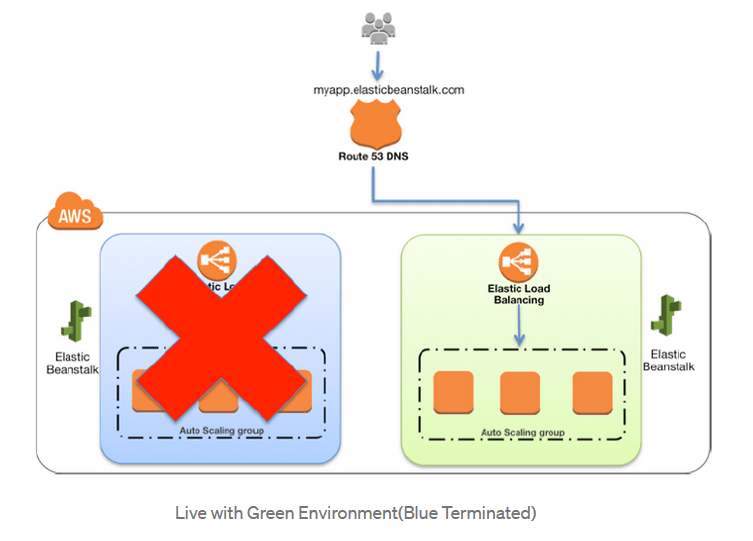

Elastic Beanstalk provides an environment URL when the application is up and running. Then, the green environment is spun up with its own environment URL. At this time, two environments are up and running, but only the blue environment is serving production traffic.

To promote the green environment to serve production traffic, you go to the environment’s dashboard in the Elastic Beanstalk console and choose the Swap Environment URL from the Actions menu. Elastic Beanstalk performs a DNS switch, which typically takes a few minutes.

AWS CloudFormation

AWS CloudFormation

AWS CloudFormation helps you to integrate, provision, and manage related AWS and third-party resources as a single collection. This service provides very powerful automation capabilities for provisioning blue-green environments and facilitating updates to switch traffic, whether through Route 53 DNS, Elastic LoadBalancing, etc. Want to know more? check our separate blog on Introduction to AWS CloudFormation

Amazon CloudWatch

Amazon CloudWatch acts as a monitoring service for AWS Cloud resources and the applications you run on AWS. It can collect log files, set alarms, and track various performance-based metrics. It provides system-wide visibility which is a key factor while detecting the application health in blue-green deployments.

Advantages of Blue-Green Deployments

- Debugging: In Blue-green deployment, rollbacks always leave the failed deployment intact for analysis.

- Zero-downtime: no downtime means that we can make releases at any time. There is no need to wait for maintenance windows.

- Instant switch: users are switched to another environment instantaneously.

- Instant rollback: we can easily go back to the previous version in an instant.

- Replacement: we can easily switch to the other environment if a data center containing our live environment goes down. This will work as long we have had the precaution of not putting blue and green on the same availability zone.

Disadvantages of Blue-Green Deployments

- Data Migration: database migrations are harder, even to the point of being a showstopper. Databases schema changes must be forward and backwards compatible. We may need to move back and forth between the old and new versions.

- Sudden Delays: If users are suddenly switched to a new environment, they might experience some slowness.

- Expenses: Compared to other methods, the blue-green deployments are more expensive. Provisioning infrastructure on-demand helps. But when we’re making several scaled-out deployments per day, the costs can snowball.

- Time & Effort: setting up the blue-green deployment process takes time and effort. The process is complex and has a great deal of responsibility. We may need to do many iterations before we get it working right.

- Handling transactions: while switching the environment, some user transactions will get interrupted.

- Shared services: databases and other shared services can leak information between blue and green. This could break the isolation rule and interfere with the deployment.

Alternatives for Blue-Green Deployment

Canary release is a technique to reduce the risk of introducing a new software version in production by slowly rolling out the change to a small subset of users before rolling it out to the entire infrastructure and making it available to everybody.

With canary testing, if you discover the green environment is not operating as expected, there is no impact on the blue environment. You can route traffic back to it, minimizing impaired operation or downtime, and limiting the blast radius of impact.