AMAZON TIMESTREAM :

Amazon Timestream is a fast, scalable, fully managed, purpose-built time series database that makes it easy to store and analyze trillions of time series data points per day. Timestream saves you time and cost in managing the lifecycle of time series data by keeping recent data in memory and moving historical data to a cost optimized storage tier based upon user defined policies. Timestream’s purpose-built query engine lets you access and analyze recent and historical data together, without having to specify its location. Amazon Timestream has built-in time series analytics functions, helping you identify trends and patterns in your data in near real-time. Timestream is serverless and automatically scales up or down to adjust capacity and performance. Because you don’t need to manage the underlying infrastructure, you can focus on optimizing and building your applications.

Timestream also integrates with commonly used services for data collection, visualization, and machine learning. You can send data to Amazon Timestream using AWS IoT Core, Amazon Kinesis, Amazon MSK, and open source Telegraf. You can visualize data using Amazon QuickSight, Grafana, and business intelligence tools through JDBC. You can also use Amazon SageMaker with Timestream for machine learning.

Topics

- Timestream Key Benefits

- Timestream Use Cases

- Getting Started With Timestream

Timestream Key Benefits :

The key benefits of Amazon Timestream are:

Serverless with auto-scaling - With Amazon Timestream, there are no servers to manage and no capacity to provision. As the needs of your application change, Timestream automatically scales to adjust capacity.

Data lifecycle management - Amazon Timestream simplifies the complex process of data lifecycle management. It offers storage tiering, with a memory store for recent data and a magnetic store for historical data. Amazon Timestream automates the transfer of data from the memory store to the magnetic store based upon user configurable policies.

Simplified data access - With Amazon Timestream, you no longer need to use disparate tools to access recent and historical data. Amazon Timestream's purpose-built query engine transparently accesses and combines data across storage tiers without you having to specify the data location.

Purpose-built for time series - You can quickly analyze time series data using SQL, with built-in time series functions for smoothing, approximation, and interpolation. Timestream also supports advanced aggregates, window functions, and complex data types such as arrays and rows.

Always encrypted - Amazon Timestream ensures that your time series data is always encrypted, whether at rest or in transit. Amazon Timestream also enables you to specify an AWS KMS customer managed key (CMK) for encrypting data in the magnetic store.

High availability - Amazon Timestream ensures high availability of your write and read requests by automatically replicating data and allocating resources across at least 3 different Availability Zones within a single AWS Region. For more information, see the Timestream Service Level Agreement

Durability - Amazon Timestream ensures durability of your data by automatically replicating your memory and magnetic store data across different Availability Zones within a single AWS Region. All of your data is written to disk before acknowledging your write request as complete.

Timestream Use Cases :

Examples of a growing list of use cases for Timestream include:

Monitoring metrics to improve the performance and availability of your applications.

Storage and analysis of industrial telemetry to streamline equipment management and maintenance.

Tracking user interaction with an application over time.

Storage and analysis of IoT sensor data.

Getting Started With Timestream :

We recommend that you begin by reading the following sections:

Tutorial - To create a database populated with sample data sets and run sample queries.

Timestream Concepts - To learn essential Timestream concepts.

Accessing Timestream - To learn how to access Timestream using the console, AWS CLI, or API.

Quotas - To learn about quotas on the number of Timestream components that you can provision.

To learn how to quickly begin developing applications for Timestream, see the following:

Using the AWS SDKs

Query Language Reference.

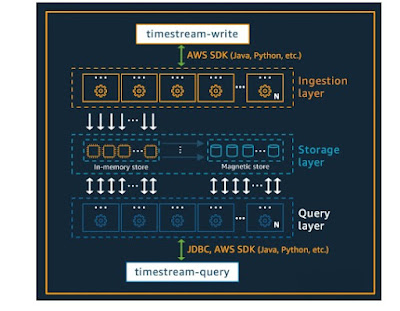

Architecture :

Amazon Timestream has been designed from the ground up to collect, store, and process time series data at scale. Its serverless architecture supports fully decoupled data ingestion, storage, and query processing systems that can scale independently. This design simplifies each sub-system, making it easier to achieve unwavering reliability, eliminate scaling bottlenecks, and reduce the chances of correlated system failures. Each of these factors becomes more important as the system scales. You can read more about each topic below.

.png)

.png)