Using Azure Container Apps at scale instead of your building your own NaaS on top of K8s?

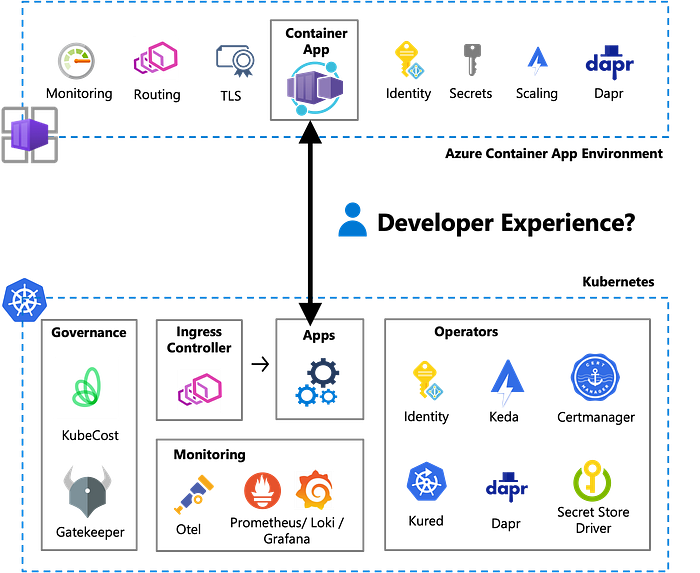

Platform engineering efforts (usually on top of Kubernetes) have become quite popular recently and some companies that I have been working with managed to launch very powerful and effective Namespace as a Service offerings to empower developer teams to run code while abstracting the underlying operational complexity successfully.

If you have operated a set of applications on Kubernetes in production for a while you will probably find out that implementing higher level service to increase your productivity can be done relatively easy because Kubernetes is designed as a platform technology with lots of features for extensibility and abstraction of capabilities. Building up a cluster by a small team of professional platform engineers that can focus on Kubernetes and its features and needs, makes sense if that means you can on the other side give developers more focus time on writing business relevant code.

The idea of standardising and preparing Kubernetes clusters with integrated container networking, layer 7 load balancing, end-to-end TLS encryption, read-to-use deployment methods, meaningful infrastructure integrations, insightful observability tooling, cost management, troubleshooting support, graceful failure recovery, autoscaling infrastructure and good security defaults makes a lot of sense for an organisation of a certain number of application developers. Ultimately this is about creating a developer experience where team can kick the tires and start writing business relevant code without worrying too much about infrastructure first — because someone else is doing that for them.

In my experience these custom made platforms have emerged to be very unique in its composition of open source, managed and commercial solutions and therefore will always require a dedicated team to keep them up running and up-to date within the fast moving and ever-changing ecosystem of Kubernetes.

The important difference is that Azure Container Apps gives you a set of capabilities that you can use to describe desired operational behaviour (which is defined and limited by Azure) of your application (scaling, encryption, configuration, authentication). On the other side in well-prepared Kubernetes cluster you need someone to understand the operational needs, evaluate the managed and open source extensions, configure them accordingly and take responsibility to keep them in place so that a developer team does not have to.

In my experience an effective split between Dev and Ops is still happening because every individual only has so much focus time and that means you are typically leaning more towards a developer or an platform engineer persona.

Here is now the question that came up in a couple customer engagements after the launch Azure Container Apps: “How close can the developer experience from Azure Container Apps in a mature organisation come to a custom made Namespace as a Service solution?” I want to share some of the answers and concepts with you below and help you understand the split in responsibilities between developer teams and platform engineers.

If you don’t know Azure Container Apps already: it is a simple PaaS that allows you bring a container image and have Azure run it for you at scale. Azure will also solve most of the jobs you don’t want to do like manage compute infrastructure (this is of course using VMs under the hood but you don’t see them), maintain TLS certificates (to encrypt incoming traffic is something you want to have anyway), layer 7 routing (based on envoy to make sure traffic gets routed towards the right endpoints), ensure authentication and authorisation (using Azure AD or any OIDC provider to validate access to your services) and scale out compute power according to metrics (for example by request per second or number of pending messages).

So as someone who wants to focus on writing business code you will probably appreciate the raw developer experience of Azure Container Apps, up until the point where you have to argue with the networking, security, governance and identity team of your company to spin up a couple of Azure Container App environments for your organisation— but maybe that is solved now ?;)

Azure Container Apps is a relatively new service with an interesting value proposition of giving you as a developer team a managed container service based on an invisible Kubernetes without the responsibility to understand/design/manage the operational complexity of Kubernetes. Think of this as an alternative to a shared Kubernetes cluster in which every developer team gets their own namespace and can leverage shared services like ingress controller, secret store driver, monitoring agents and workload identity. So in this mapping to a Kubernetes namespace each developer team gets their own Azure Container App Environment.

However this empowerment is only helpful if a mature cloud organisation can ensure the fully private network integration of multiple Azure Container App Environments at scale without needing to restrict developer teams again.

The biggest improvements allow you to now implement the following:

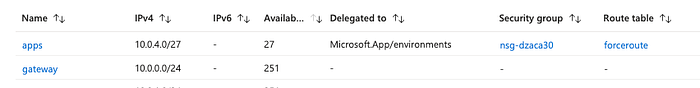

- You can now deploy ACA in smaller subnets down to a /27 which means you can now afford to run more Azure Container App Environments in the same limited IP space.

- You can now delegate the private DNS resolution of the ACA VNET to your own DNS infrastructure and allow your apps to connect to private resources and pull images from private container registries.

- You can lock down ingress from your application subnet and ensure that your applications are only reachable from private network or a WAF— which was possible before but without custom dns support this was tricky to use at scale.

- You can now also lock down egress traffic coming from your applications to the outside world because ACA now also fully supports force tunneling 0.0.0.0/0 through a NVA.

- You can now leverage more memory in the new consumption plan but also run bigger compute and memory intensive workloads in your environment at a cheaper price.

- You can now mount secrets from an Azure KeyVault as a environment variable into your containers and ensure that secret and configuration values are sources from a secure store.

- You can now operate Application Gateway V2 in subnets that have force tunneling enabled with the private deployment which allows you to lock down the VNET completely, which was not possible before.

- You can use the built-in Azure Policies to govern what Azure Container App configurations are allowed in your organisation.

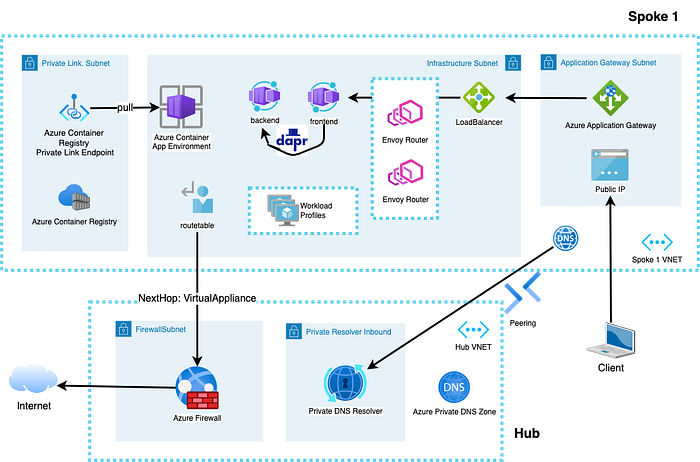

Let’ s take a look at the new network setup first. In detail there are some underlying changes which enable these improvements. A consequence of the new network integration is that you have to delegate one “Infrastructure” subnet to the Microsoft.App/environment service which will be used to deploy and connect the invisible virtual machines that run your containers. The upside is that you will only need twice as many IPs in the subnet as you need replicas of Azure Container apps (running in consumption profile) and/or one IP per Workload profile host (regardless how many containers are running on it) plus Load Balancer IPs, but the downside is that you cannot put anything else in the Container Apps subnet anymore.

I would suggest to carefully plan your subnets and check if you can afford at least a /26 to make proper use of workload profiles in the future. Previously a single ACA environment used to require two /23 subnets which was very hard to get — especially if you wanted a dev/staging and production environment. As a package the new changes allow a developer team to receive a locked down virtual network that has one delegated subnet (ACA used to require two subnets), which has a route table configured to force all traffic through a network virtual appliance (like Azure Firewall) and have custom DNS resolution configured on the VNET).

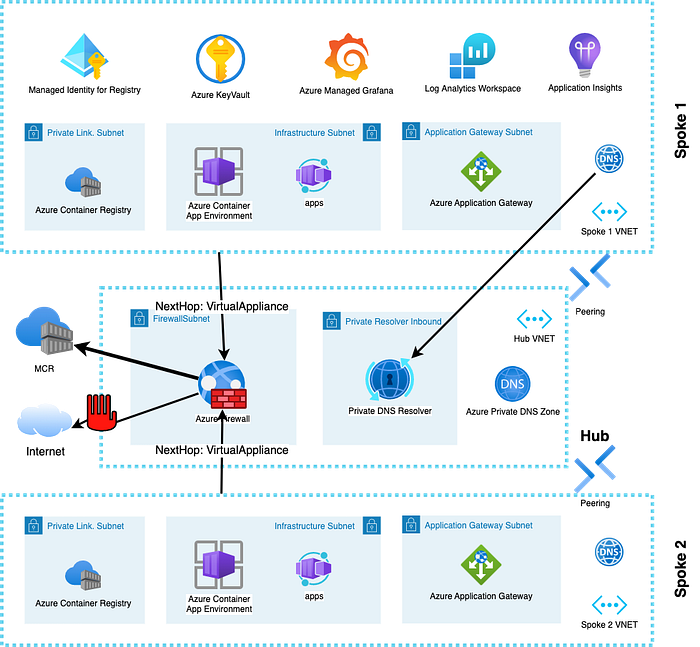

As most companies would use a Hub and Spoke network topology the new changes fit very well in a distributed responsibility where the networking team will provision a spoke for you with a pre-configured VNET and subnet, RouteTable (BGP and Wi-Wan are also supported but still require a RouteTable on the subnet) and custom DNS resolution — all of which can be automated to deploy preconfigured subscription templates at scale.

In comparison to AKS deployments in locked down VNETs you will not need to whitelist a lot of services and endpoints in your firewall because the communication from your workload infrastructure to the control plane is no longer going through your VNET. You only need to allow egress traffic from the ACA subnet to the Microsoft Container Registry. With an Azure Firewall that can be easily done by creating a network rule that allows egress to the service tags MicrosoftContainerRegistry and Frontdoor.Firstparty (which is a dependency of MCR) in addition to potentially allowing access to Azure Monitor by using the same service tag.

To make sure that you can give the maximum productivity to the developer teams you need to make sure that they can connect and pull images from their private registry. That only works it you allow each team their own Azure Container Registry and connect the private link endpoint to a dedicated subnet or provide a way to resolve existing private third party registries on your network.

Since private registries need private DNS entries you can either allow each developer team to link their own DNS zones in their spoke VNET (which is probably something that you do not want and will also make it tricky to connect to the ACR from other VNETs where your jumpbox, build machines or developer laptops are connecting from) or use centralized DNS lookup configuration in a central VNET using custom DNS servers and/or Azure Private DNS Resolvers, which means you will probably make sure that each spoke gets a dedicated ACR instance by default via your IaC deployment pipeline.

After having solved the problem of connecting to ACR inside the VNET and establishing rules for egress traffic out of the VNET we need to solve the problem of getting traffic from outside the VNET to your containers. By default Azure Container Apps can be deployed in two modes: The simplest is with a public load balancer ip that is routing traffic to an invisible set of envoy proxies which are implementing TLS and route traffic further to your containers.

This mode does not work when you are using a firewall for egress on your ACA subnet due to asymmetric routing but you can deploy an Azure Application Gateway or use private link service to connect clients from the Internet via Azure Frontdoor to your Container Apps because both will internally use NATing and ensure that a response can be returned to the client on the Internet. The usage of Azure Frontdoor is probably harder to automate and only works when you are expecting clients to connect from the Internet which is why Azure Application Gateway will probably be the preferred solution for implementing an ingress solution for Intranet as well as Internet traffic.

If you want to ensure a minimal set of configuration constraints you should take a look at the Azure Policy samples repository which will give you some idea on how you can govern or force the usage of HTTPS, managed identities instead of secrets, automatic authorization or private network access instead of public IPs for every Azure Container Apps that gets deployed in your environment.

Assuming you have a networking team that can ensure these requirements for routing, egress, ingress and DNS configuration for multiple subscriptions you are good to move ahead and think about how an Azure Container App environment can be used used to ensure governance at scale. As of today there is no possibility of segmenting access inside an Azure Container App environment so every app can by default communicate with any other app inside the environment.

Ina bigger organisation you also want to user RBAC to control what your infrastructure, apps and users can do with all the cloud resources that you own. That means you want your developers productive and give them as much freedom as they need to implement their ideas but prevent them from accidentally breaking more serious components like networking, routing and DNS that can cause bigger damages. The good news is that this can be achieved now, but since there is no Azure Policy equivalent for Azure Container Apps RBAC is pretty much the only way how you can limit developer actions.

Inside each Azure Container App you have the ability to provide two references to Managed Identities. The first kind of Managed Identity is the one that you can assign to your running containers so that your code (assuming it is using a recent version of the Azure SDKs) can impersonate this identity to interact with ServiceBus/SQL Database or anything else that supports integrated managed authentication in Azure.

The second kind of Managed Identity is the one that you will create before your Container App and grant ‘ACRPull’ permission on your Azure Container Registry. This identity will be used by the host that ACA will run your containers on to authenticate to the ACR, pull the image and hand over the running container to impersonate the Container App identity. It is absolutely valid to have multiple Container Apps use the same Managed Identity to authenticate to the same Azure Container Registry, but you probably want that each Azure Container App uses its own Managed Identity to talk to its data stores/ message broke and such.

// New Managed Identity that the application can use inside the container

resource loggermsi 'Microsoft.ManagedIdentity/userAssignedIdentities@2018-11-30' = {

name: 'logger-msi'

location: location

}

// Existing Managed Identity to authenticate to the Azure Container Registry

resource loggermsiacr 'Microsoft.ManagedIdentity/userAssignedIdentities@2018-11-30' = {

name: 'logger-acr'

location: location

}

// Azure Container App referencing

resource loggers 'Microsoft.App/containerapps@2022-11-01-preview' = {

name: 'logger'

location: location

identity: {

type: 'UserAssigned'

userAssignedIdentities: {

'${loggermsi.id}': {} // referencing the managed identity for the app

'${loggermsiacr.id}': {} // referencing the managed identity for the acr

}

}

properties: {

managedEnvironmentId: resourceId('Microsoft.App/managedEnvironments', environmentName)

workloadProfileName: 'Consumption'

configuration: {

registries: [

{

server: 'dzreg1.azurecr.io'

identity: loggermsiacr.id // managed identitity to authenticate to the ACR

}

]

...

}

}The user assigned identity of the Azure Container App is also relevant for loading your configuration and secret values at runtime and securing these in your dev/staging/production environment from developer teams. A new improvement now also allows you reference secret values from an Azure KeyVault into your Azure Container App during startup by referencing the versioned value directly in your manifest which also allows you easily to recycle and redeploy these values on a regular basis (which you should do independently of your deployments).

On the side of developer teams there is today not much in terms of builtin role definitions but you can easily create your own custom role definition to for example allow teams to perform deployments of Container Apps into existing ACA environments without giving them permissions to change the network, ingress, certificate or dns configuration.

Here is for example such a role definition that you can then deploy and scope towards your subscriptions:

{

"Name": "ContainerApp Deployer",

"IsCustom": true,

"Description": "Write all containerapp resources, but does not allow you to make any changes to the environment",

"Actions": [

"Microsoft.App/containerApps/*/write",

"Microsoft.App/containerApps/write",

"Microsoft.Insights/alertRules/*",

"Microsoft.Resources/deployments/*",

"Microsoft.Resources/subscriptions/resourceGroups/read"

],

"NotActions": [

],

"AssignableScopes": [

"/subscriptions/xxxx"

]

}The usage of Dapr as an abstraction between your application code and your external application dependencies like Kafka, ServiceBus, EventHub can also make a lot of sense because you remove the need for testing your services with a connected cloud service but instead using a local container that provides the same capability. Finally you can now also see the respective Keda and Dapr versions that are used inside your environment.

Previously there was a dependency on Dapr to achieve service to service communication inside the cluster and also get secrets from Azure KeyVault but that has been improved now so Dapr is a strictly optional capability that can be very valuable to use but you absolutely do not have use it if you don’t want to.

Very exiting is the new workload profile concept which allows you to provision a compute profile from a predefined set of compute profiles from a fixed cpu/ memory configurations. The existing runtime experience has been renamed to Consumption and will be going forward optimised towards fast-scaling, event-driven, per-second priced container runtime. On the other hand bigger workload now can be scheduled on instances of these workload profiles, which is more efficient if you need multiple of these instances and/or they need to be running 24x7.

The proper tracking and managing of costs turns out to be a not-so-easy challenge to overcome in a shared Kubernetes environment. While there are very popular open source solution to track usage on cpu and memory you still are faced with coming up on an algorithm/process to make sure that usage and costs are properly allocated to teams that are sharing a Kubernetes cluster. In comparison the cost tracking in Azure Container Apps is solved implicitly because every app and environment generate their own costs in your bill and you can use tags to track the costs accordingly.

Here is a sample list of the possible workload profiles that you can activate for Azure Container App environment side by side with the consumption profile:

Name Cores MemoryGiB Category

----------- ------- ----------- ---------------

D4 4 16 GeneralPurpose

D8 8 32 GeneralPurpose

D16 16 64 GeneralPurpose

E4 4 32 MemoryOptimized

E8 8 64 MemoryOptimized

E16 16 128 MemoryOptimized

Consumption 4 8 ConsumptionAll you have to do is reference the workload profiles that you want to be available in the definition of your Azure Container App environment with the minimum and maximum instance numbers that you are willing to pay for. At scheduling time you can reference any of the profiles for a given Azure Container App and Azure will try to fit your application instance with its memory and cpu requests into the available profiles. As you know from Kubernetes you cannot assume to assign all of the available cpu/memory resources of a host to your applications because the underlying container runtime with its agents also reserves some runtime resources to function properly.

resource environment 'Microsoft.App/managedEnvironments@2022-11-01-preview' = {

name: environmentName

location: location

properties: {

workloadProfiles: [

{

name: 'consumption'

workloadProfileType: 'Consumption'

}

{

name: 'f4-compute'

workloadProfileType: 'F4'

MinimumCount: 1

MaximumCount: 3

}

]

vnetConfiguration: {

infrastructureSubnetId: '${vnet.id}/subnets/aca-control'

internal: internalOnly

}

}

}In total this now significantly more flexible because you are no longer tied to the 1:2 cpu-memory ratio of the consumption plan but you still need to make sure that you as a developer are sizing the resource needs of your application at runtime correctly. Unfortunately the builtin capabilities for monitoring the resource utilisation are so far still limited because you can only see memory working set and cpu core usage. While we are waiting for the enhancements I described an alternative approach on how you can work around that in my last post.

The observability stack for Azure Container Apps built on top of Azure Monitor can be quite powerful when using Dapr because you will Logs, Metrics and Distributed Tracing activated without needing to configure anything special in your application or environment. However I have received very positive feedback from customers that are using DataDog for Azure Container Apps because DataDog gives you insights on different aspects of both application and runtime behaviour across multiple Azure Container App (environments).

If you are using multiple dynamically scaling Azure Container App environments in a subscription you also need to watch out for core quota assignments on your subscriptions which need to be sized and adjusted as you allocate more cores to your applications. As of today these limits can all be increased by a simple ticket but you need to watch for your current usage, your forecasted usage and act accordingly.

Looking back at what we were aiming to achieve we can see that by preparing the network design, configuring the integration of additional managed services, rbac and process we can indeed create a simple but fully managed application platform for developer teams that do not require regular infrastructure or maintenance work. The resulting developer experience can be comparable to a simple Kubernetes Namespace as a Service model as long as your application architecture follows 12 factor app principles.

Of course we have to ask the question from beginning again : “Is this a complete replacement for the full spectrum of Kubernetes ecosystem empowered Namespace as a Service offering?”

Obviously the answer is No because the Azure team made several very opinionated design choices to limit the full feature rich capabilities and also responsibilities of the developer team that wants to run applications in their service. These decisions for network integration of containers into your network, the implementation of default TLS on the builtin ingress controller, the possible scaling rules, the available workload profiles and the limitation of locally available storage types have an impact on the class of applications you can run, but maybe that is good enough for you compared to the efforts you have to make to build your own Namespace as a Service?

In my opinion these are (current) limitations which have an impact on popular the scenarios you can operate in Azure Container Apps (and therefore will have a better developer experience with more control and responsibility on Kubernetes instead):

- Support for custom metrics — not yet available.

- URL based routing and path based routing — not yet available.

- Keda scaling with managed identities — not yet available.

- Azure Defender support — not available yet

Some of these limitations are already available for testing in preview, while others are actively being actively worked on. Take a look at your requirements and validate for yourself if and how an Azure Container App environment can be just good enough for your workloads and your teams.

Hopefully this article helped you navigate your questions if and how Azure Container Apps can be used at scale and maybe even give you a similar operational/ developer experience than a custom made Namespace as a Service offering.

No comments:

Post a Comment