Creating a Continuous Integration (CI) Pipeline in Azure DevOps

One of the major points of doing microservices and containers is avoiding the whole “it works on my machine” concept. While containers are a vehicle for achieving this, we also need a vehicle for carrying the container from the developer workstation to other machines. In this guide that vehicle is Azure DevOps, the artist formerly known as Visual Studio Team Services (VSTS).

DevOps as a term encompasses more than just the technical pieces, but this guide will focus only on a few isolated components. The first part is Continuous Integration (CI) which handles building of the code, and the second part is Continuous Deployment (CD) which is about deploying the code built by CI. CD will be covered in the next section.

Let’s start by creating a new Dockerfile specifically for CI. Add a new file called Dockerfile.CI with the following contents:

FROM microsoft/dotnet:2.2-sdk AS build-env

WORKDIR /app# Copy csproj and restore as distinct layersCOPY *.csproj ./

RUN dotnet restore# Copy everything else and build

COPY . ./

RUN dotnet publish -c Release -o out# Build runtime image

FROM microsoft/dotnet:2.2-sdk

WORKDIR /app

COPY --from=build-env /app/out .

ENTRYPOINT ["dotnet", "AKS-Web-App.dll"]

You will notice that Visual Studio arranges it as a child node under the existing Dockerfile.

We want to use Helm for packaging and deploying, and for this we need a helm chart. Create a default by executing the command below:

Why Helm is needed might not be clear at this point, but it adds a few extra configuration abilities. The following is a recap of the configuration files for a service:

- Dockerfiles. These describe a single container with low-level details like base image, port openings, etc.

- Docker-Compose. These describe a collection of containers that logically belong together. For example, having both a database container and an API container.

- Helm charts. These typically describe additional metadata for the services, like the external url, the number of replicas, etc.

While it is not a requirement to use all three levels of configuration, it does make some things easier.

The default helm chart will actually not deploy the code you have in your VS solution, but instead an nginx container so a few adjustments will be needed. The helm charts have a templating system, but the important parts are in the values.yaml file. A simple file for this service would look like this:

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.replicaCount: 1image:

repository: aksdotnetacr.azurecr.io/aksdotnetcoder

tag: latest

pullPolicy: IfNotPresentnameOverride: ""

fullnameOverride: ""service:

type: ClusterIP

port: 80ingress:

enabled: false

annotations: {}

path: /

hosts:

- aksdotnetcoder

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.localresources: {}

nodeSelector: {}

tolerations: []

affinity: {}

Check in your code and return to the Azure DevOps Portal.

Creating a Continuous Deployment (CD) Pipeline in Azure DevOps

A dev might be more than happy to see the CI pipeline finish in the green, but code that compiles isn’t worth much if you’re not able to deploy it, so the next part is about building a second pipeline to take care of that.

Go to Builds => Releases and create a new pipeline. This time also choosing the Empty job template.

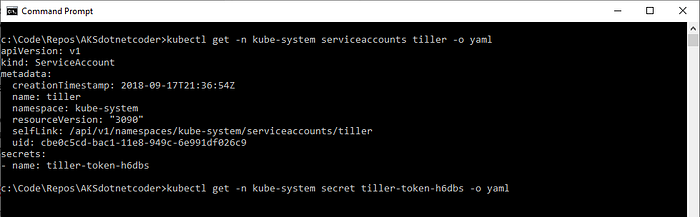

To acquire token and certificate you need to run the two kubectl commands listed:

kubectl get -n kube-system serviceaccount tiller -o yaml

kubectl get -n kube-system secret tiller-token-xyz -o yaml

The second kubectl will give you an output with two Base64-encoded strings containing the token and certificate. Copy and paste these into the form and hit OK.

Note: the UI includes the kubectl command without the namespace (kube-system) which means you will get an error that the service account cannot be found.

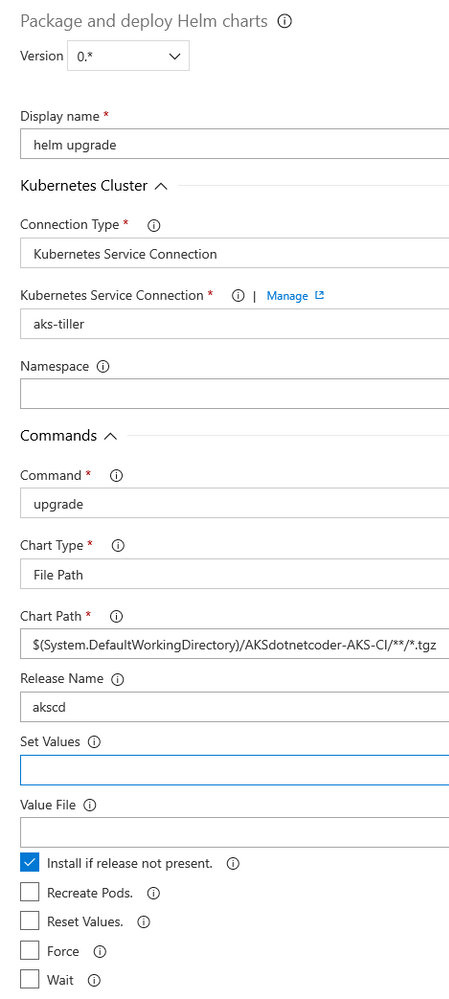

You can then define the second helm task. Reuse the Kubernetes Service Connection from the previous task. Make sure you choose File path as Chart Type and that the path contains /**/*.tgz at the end.

Hit Save followed by Release.

Make sure the Version aligns with your last build.

The UI should indicate the progress of the deployment.

Let it work its magic and watch things proceed to Succeeded.

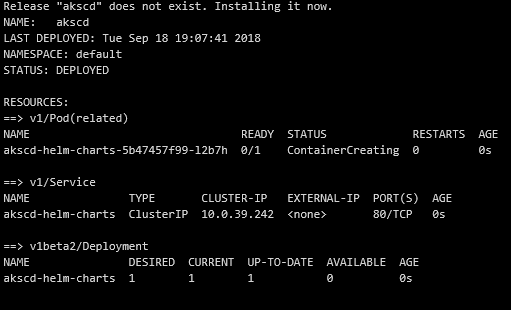

You can click the task log and peek inside the helm upgrade log to see a kubectl output for verification.

Jumping back to the command line you can run kubectl get all to verify things outside the Azure DevOps UI.

The output of a working CD pipeline.

We’ve come a long way, but there are a few loose threads. These will be tied up in the next, and concluding, part.

No comments:

Post a Comment