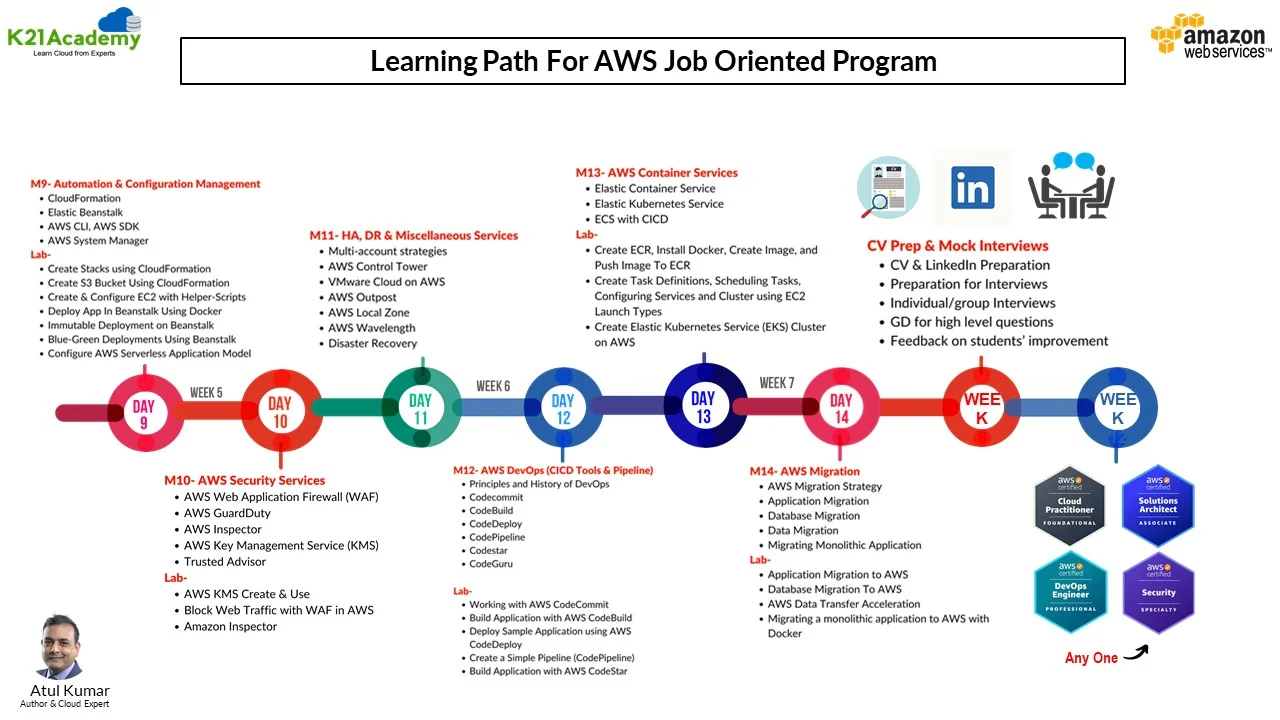

AWS Cloud Job Oriented Program: Step-by-Step Hands-on Labs & Projects

The walkthrough of the Step-By-Step Activity Guides of the AWS Job Oriented training program will prepare you thoroughly for the AWS Certification & Jobs

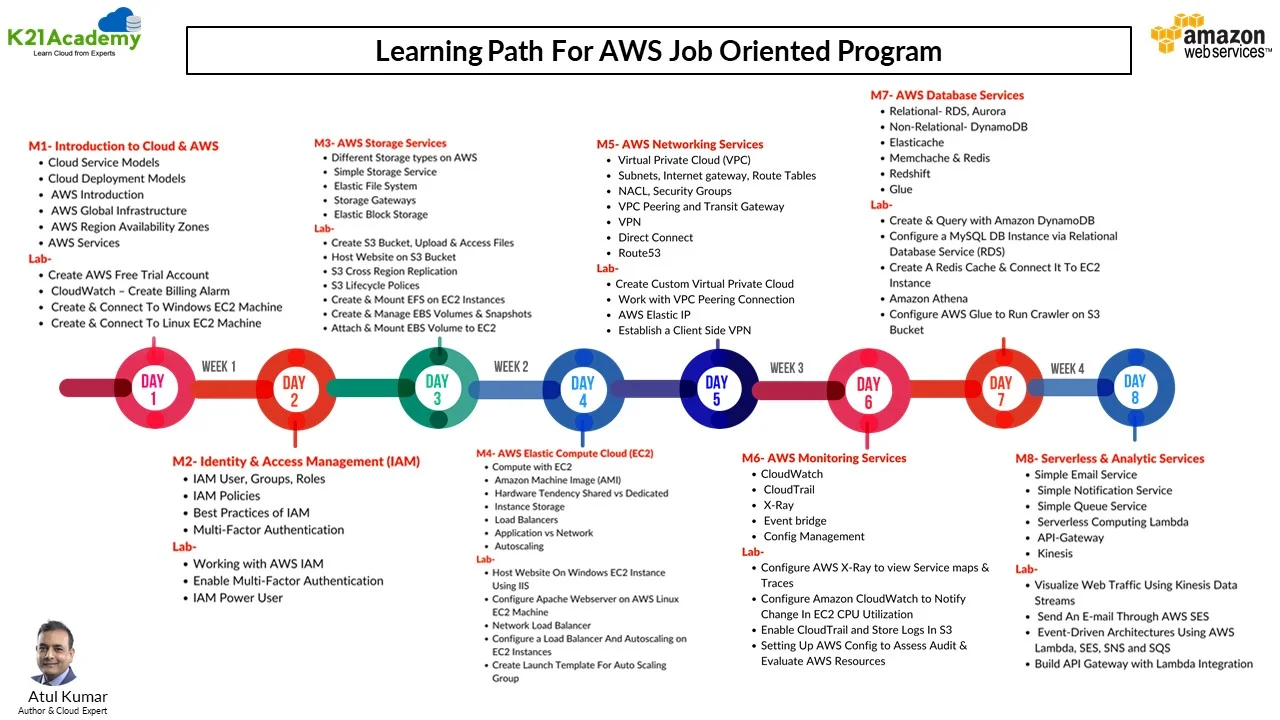

List of Labs that we include in Our training AWS Job Oriented Program.

- AWS Basic Labs

- AWS Identity & Access Management (IAM)

- AWS Storage Services

- AWS Elastic Compute Cloud (EC2)

- AWS Networking Services

- AWS Monitoring Services

- AWS Database Services

- AWS Serverless & Analytic Services

- AWS Automation & Configuration Management

- Services AWS Security Services

- CICD Tools and Pipeline

- AWS Container Services

- AWS Migration

- Real-time Projects

Lab 01: Create an AWS Free Trial Account

Lab 01: Create an AWS Free Trial Account

Embark on your AWS journey by setting up a free trial account. This hands-on lab guides you through the initial steps of creating an AWS account, giving you access to a plethora of cloud services to experiment and build with.

Amazon Web Services (AWS) is providing a free trial account for 12 months to new subscribers to get hands-on experience with all the services that AWS provides. Amazon is giving no. of different services that we can use with some of the limitations to get hands-on practice and achieve more knowledge on AWS Cloud services as well as regular business use.

With the AWS Free Tier account, all the services offered have limited usage on what we can use without being charged. Here, we will look at how to register for an AWS FREE Tier Account.

To know how to create a free AWS account, check our Step-by-step blog How To Create AWS Free Tier Account

Lab 02: CloudWatch – Create Billing Alarm & Service Limits

Dive into CloudWatch, AWS’s monitoring service. This lab focuses on setting up billing alarms to manage costs effectively and keeping an eye on service limits to ensure your applications run smoothly within defined boundaries.

AWS billing notifications can be enabled using Amazon CloudWatch. CloudWatch is an Amazon Web Services service that monitors all of your AWS account activity. CloudWatch, in addition to billing notifications, provides infrastructure for monitoring apps, logs, metrics collecting, and other service metadata, as well as detecting activity in your AWS account usage.

AWS CloudWatch offers a number of metrics through which you can set your alarms. For example, you may set an alarm to warn you when a running instance’s CPU or memory utilization exceeds 90% or when the invoice amount exceeds $100. We get 10 alarms and 1,000 email notifications each month with an AWS free tier account.

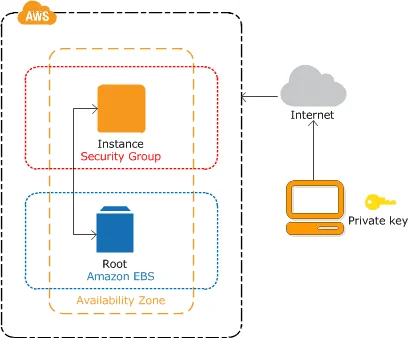

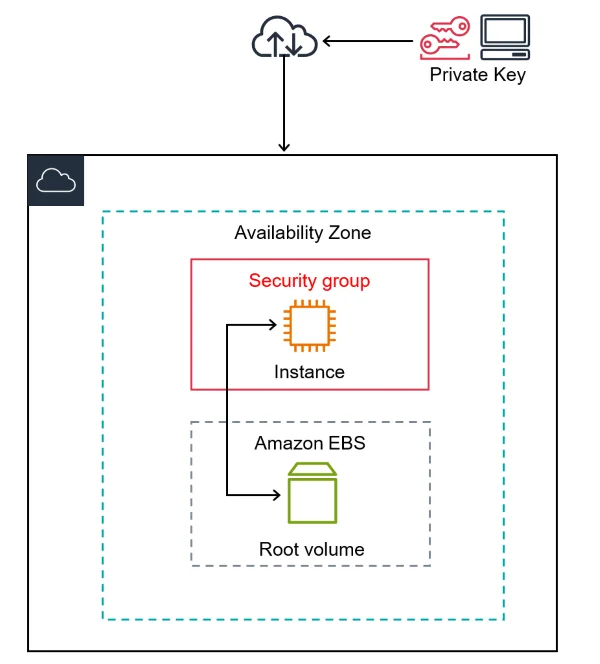

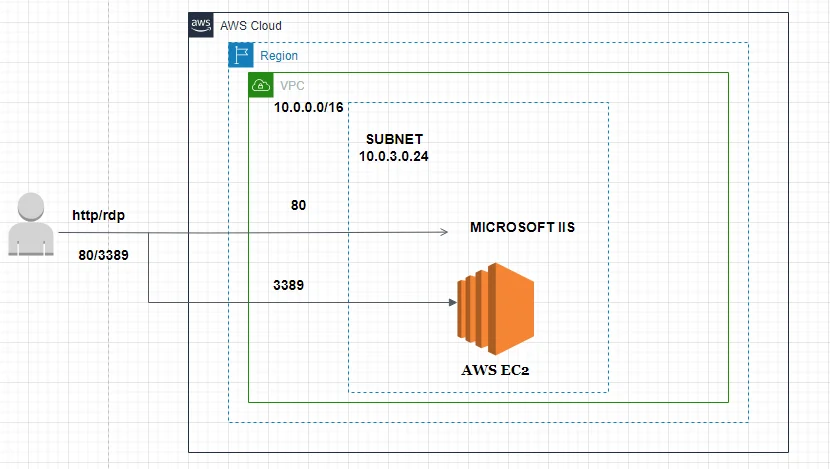

Lab 03: Create And Connect To Windows EC2 Machine

Lab 03: Create And Connect To Windows EC2 Machine

Get a practical experience with Elastic Compute Cloud (EC2) by launching a Windows instance. Learn the nuances of instance creation and connect seamlessly to Windows-based virtual machines.

Lab 04: Create And Connect To Linux EC2 Machine

Lab 04: Create And Connect To Linux EC2 Machine

Extend your EC2 skills by spinning up a Linux instance. Discover the nuances of the Linux environment within AWS, from creation to connecting and managing instances efficiently.

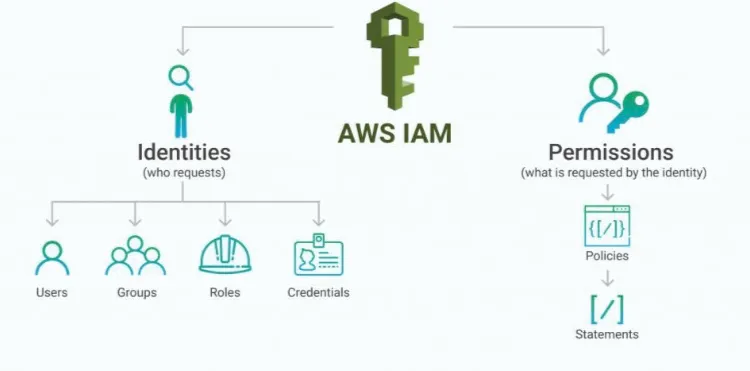

Lab 05: Working with AWS IAM

Lab 05: Working with AWS IAM

Unlock the power of Identity and Access Management (IAM) by navigating through user and group management. Gain insights into the foundation of AWS security, controlling who can access your resources and what actions they can perform.

Lab 06: Enable Multi-Factor Authentication

Elevate your security posture by implementing Multi-Factor Authentication (MFA) in IAM. This lab walks you through the process of adding an extra layer of authentication for enhanced account protection.

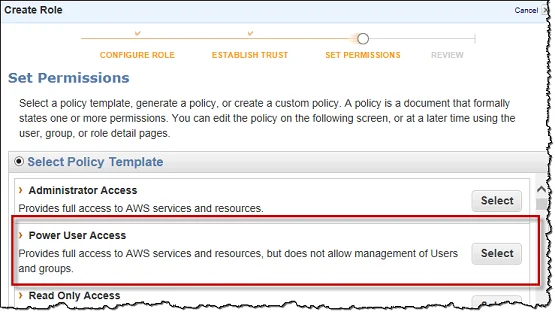

Lab 07: IAM Power User

Become an IAM power user by delving into advanced features. Learn to create and manage policies, roles, and permissions, gaining mastery over nuanced access control scenarios.

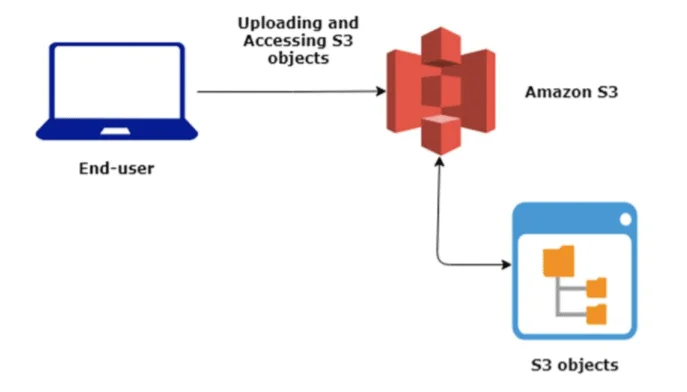

Lab 08: Create S3 Bucket, Upload and access Files, And Host the Website

Lab 08: Create S3 Bucket, Upload and access Files, And Host the Website

Explore the versatile Amazon Simple Storage Service (S3) by creating buckets, uploading files, and even hosting a static website. This lab provides hands-on experience in leveraging S3 for scalable and secure object storage.

Lab 09: Create and mount Elastic File System (EFS) on EC2 Instances

Dive into Elastic File System (EFS) to create a scalable and shared file storage solution. Learn to mount EFS on EC2 instances, facilitating collaboration and data access across multiple compute resources.

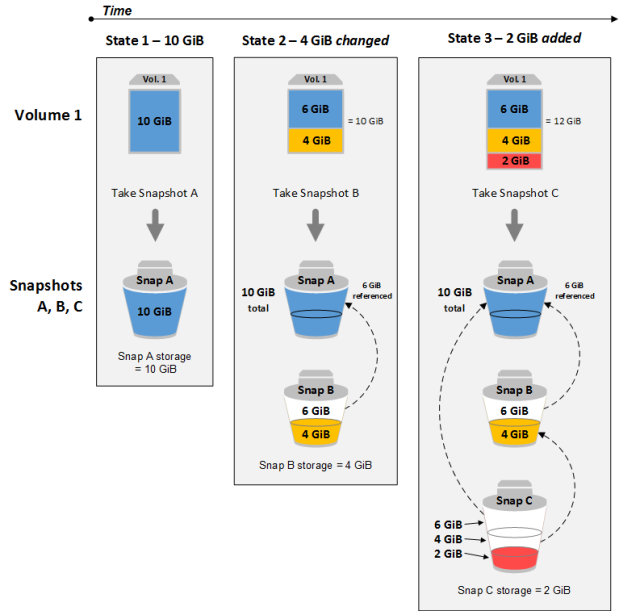

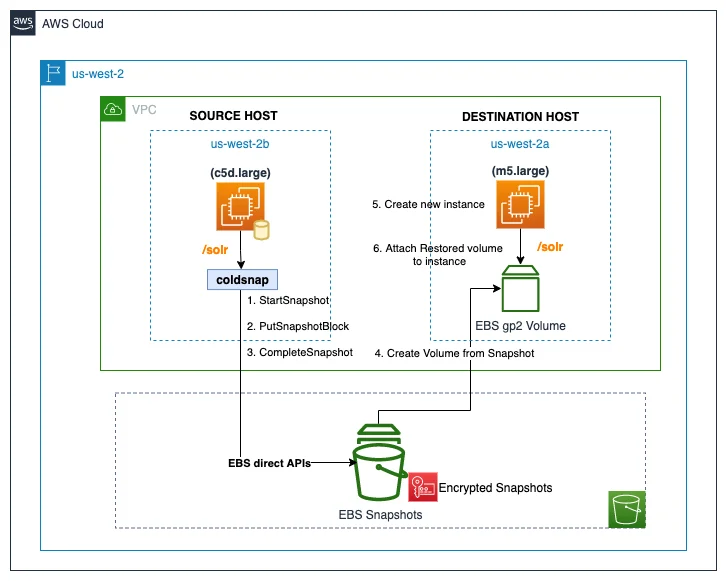

Lab 10: Create and Manage EBS Volumes & Snapshots

Master Elastic Block Store (EBS), a fundamental component for EC2 instances. This lab guides you through the creation and management of EBS volumes and snapshots, ensuring data durability and flexibility.

Lab 11: Host Website On Windows EC2 Instance Using IIS

Lab 11: Host Website On Windows EC2 Instance Using IIS

Take a deeper dive into EC2 by deploying a website on a Windows instance using Internet Information Services (IIS). Gain insights into web hosting on AWS and configuring Windows-based servers.

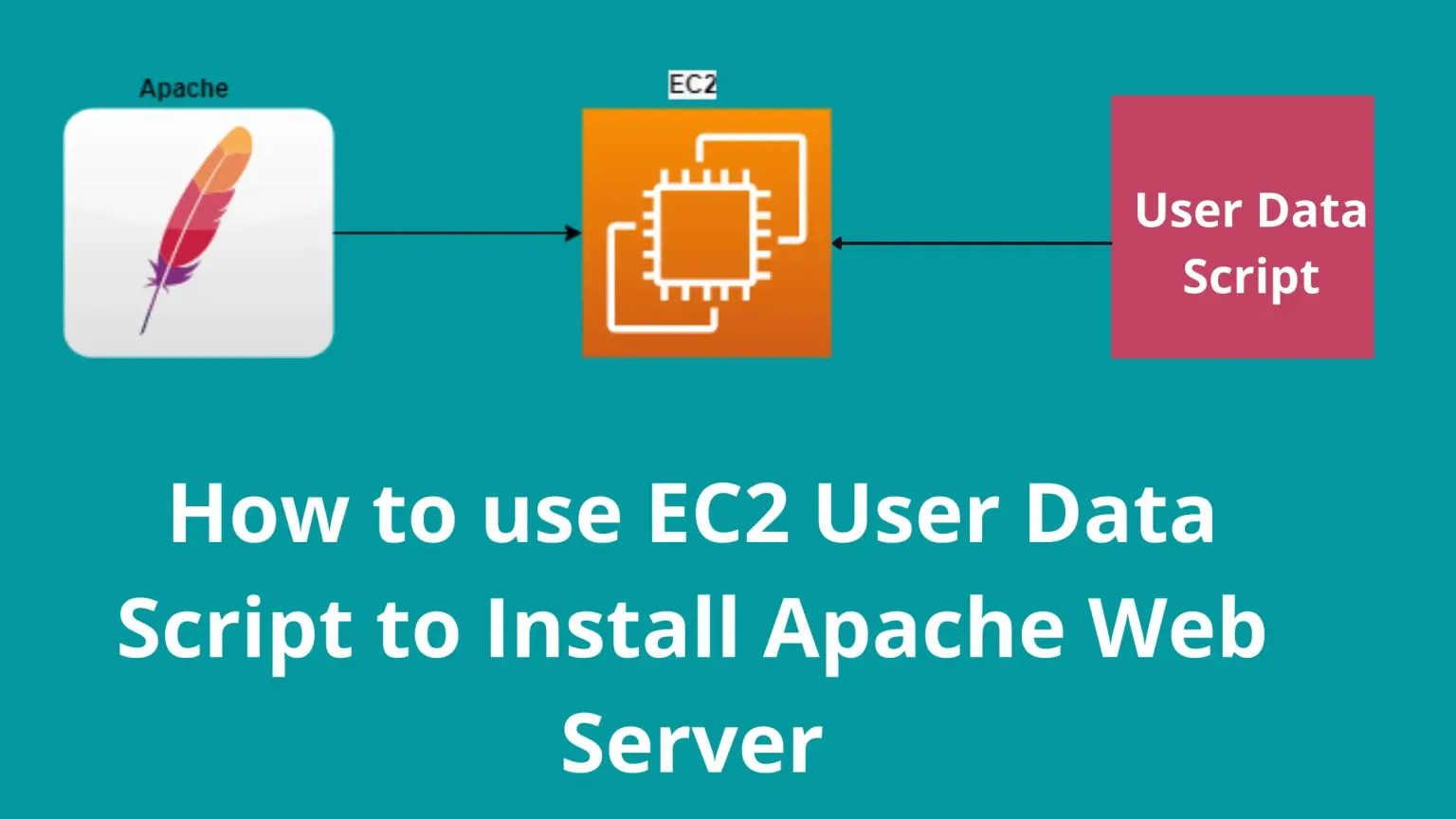

Lab 12: Configure Apache Webserver on AWS Linux EC2 Machine

Extend your web hosting knowledge by configuring an Apache web server on a Linux-based EC2 instance. Understand the intricacies of hosting applications on a Linux environment within AWS.

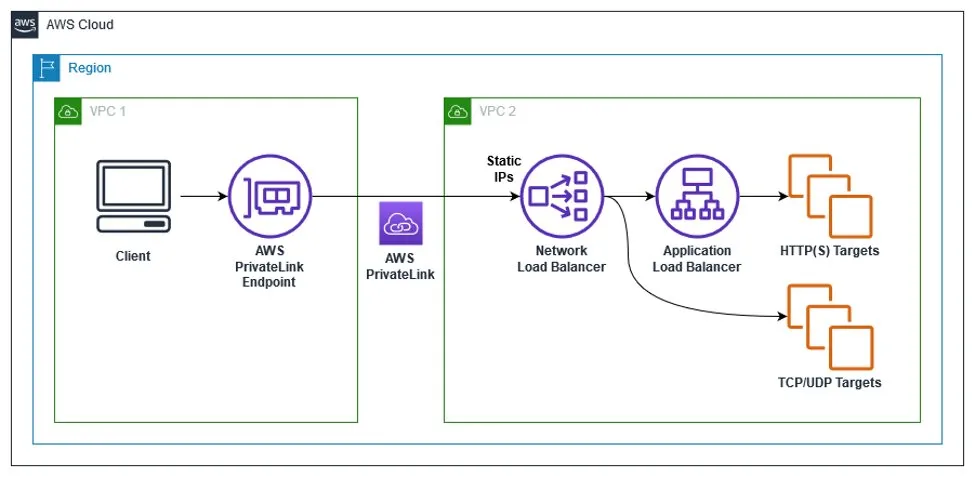

Lab 13: Network Load Balancer

Lab 13: Network Load Balancer

Delve into the world of load balancing with Network Load Balancer (NLB). This lab provides hands-on experience in setting up and optimizing network-based load balancing for high availability.

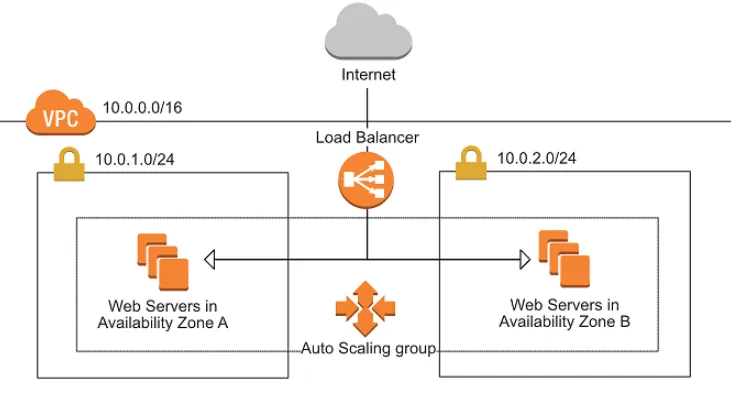

Lab 14: Configure a Load Balancer And Autoscaling on EC2 Instances

Lab 14: Configure a Load Balancer And Autoscaling on EC2 Instances

Optimize your infrastructure’s performance and availability by configuring load balancers and implementing auto-scaling on EC2 instances. This lab equips you with essential skills for managing dynamic workloads.

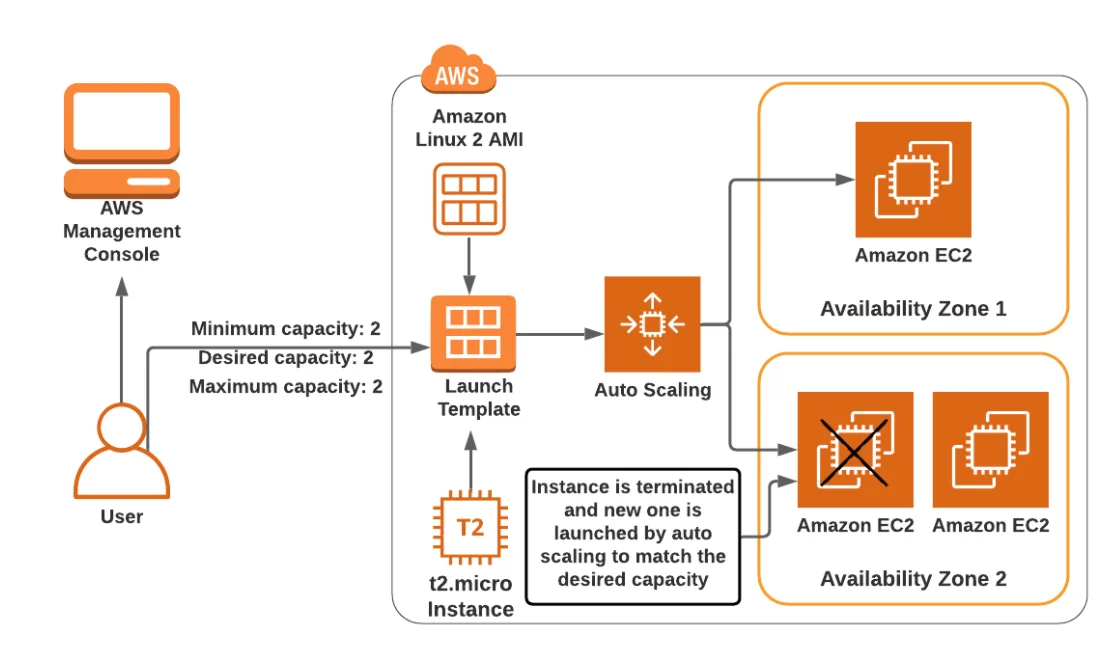

Lab 15: Create Launch Template For Auto Scaling Group

Streamline the process of launching and scaling EC2 instances with launch templates. This lab guides you through creating templates for efficient and consistent auto-scaling group deployments.

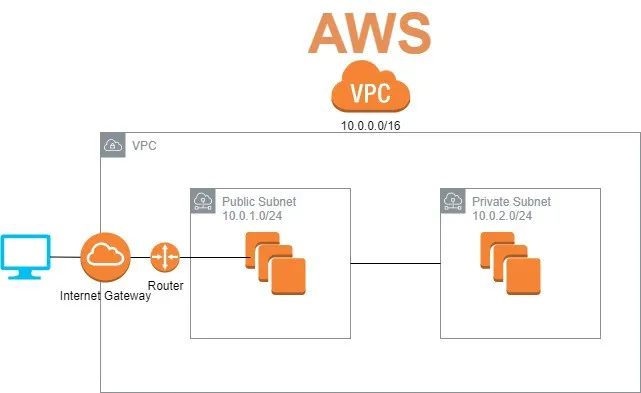

Lab 16: Create a Custom Virtual Private Cloud

Lab 16: Create a Custom Virtual Private Cloud

Build a tailored Virtual Private Cloud (VPC) to isolate and organize your AWS resources. This lab delves into creating custom VPCs, defining subnets, and configuring routing tables for optimal network architecture.

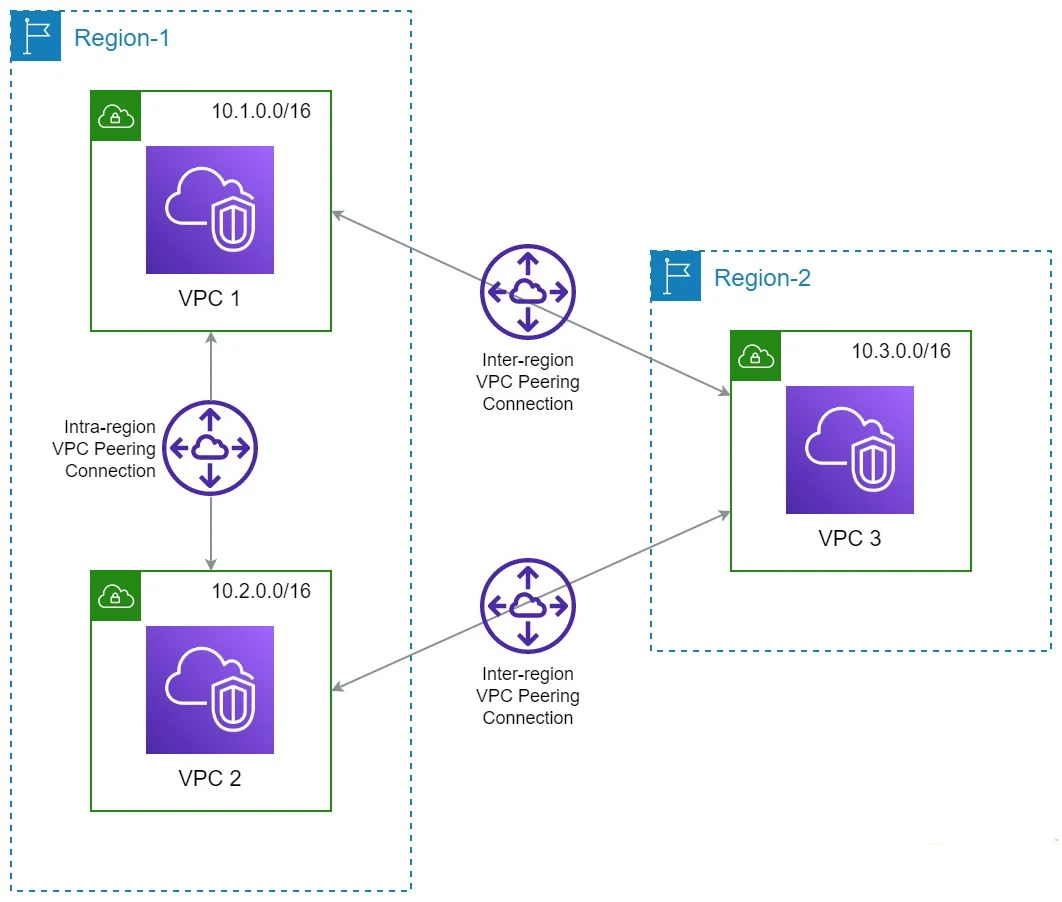

Lab 17: Work with VPC Peering Connection

Lab 17: Work with VPC Peering Connection

Connect multiple VPCs seamlessly with VPC peering connections. This lab provides hands-on experience in establishing and configuring peering connections for secure and efficient communication.

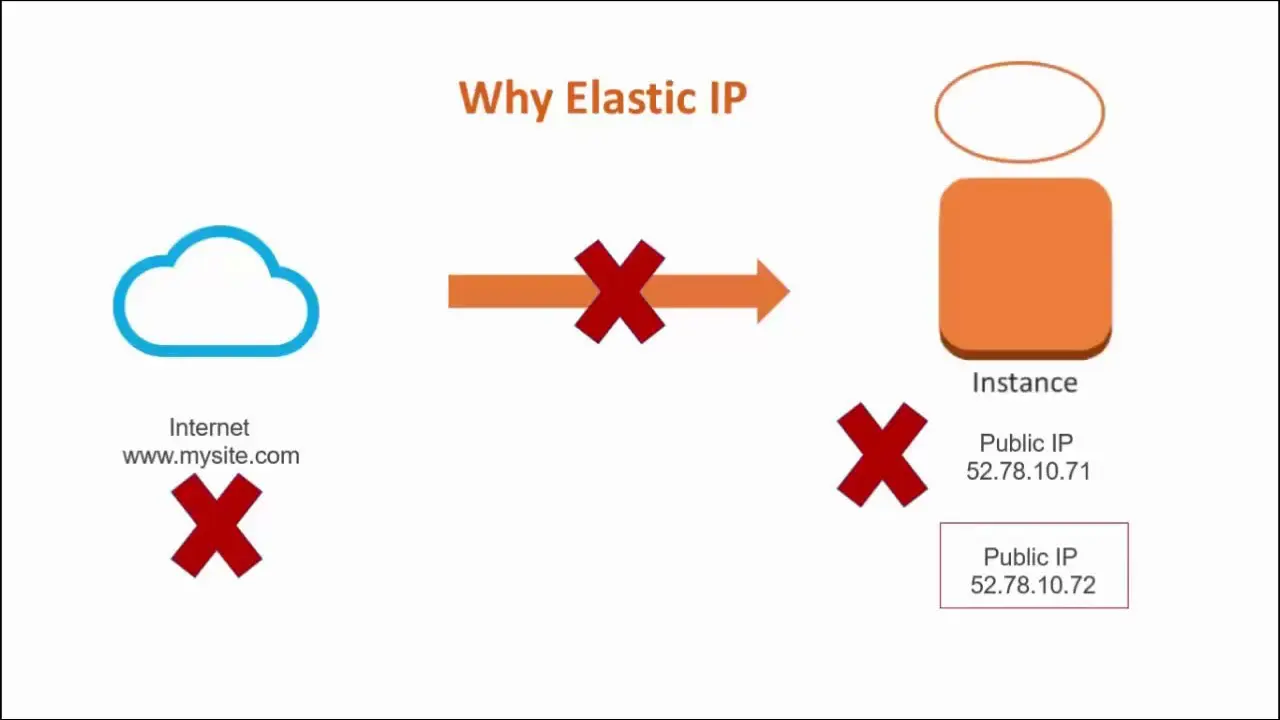

Lab 18: AWS Elastic IP

Explore the benefits of Elastic IP addresses in AWS. This lab guides you through the process of allocating and associating Elastic IPs to EC2 instances, providing static public IP addresses for dynamic cloud environments.

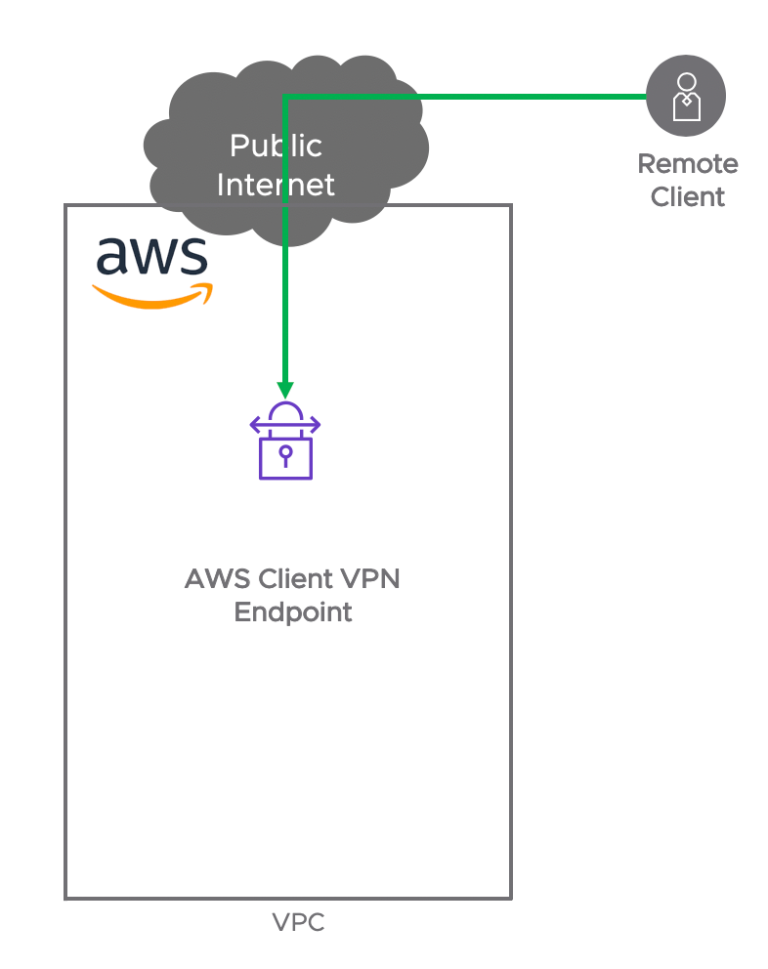

Lab 19: Establish a Client Side VPN

Enhance your network security by establishing a client-side VPN connection to AWS. This lab walks you through the setup, configuration, and optimization of VPN connections for secure data transmission.

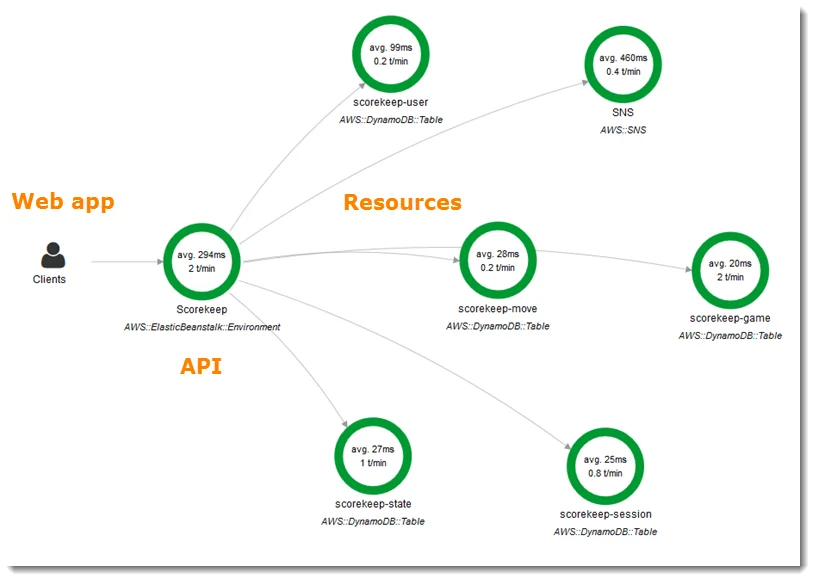

Lab 20: Get Started with AWS X-Ray

Step into the world of AWS X-Ray, a powerful tool for analyzing and debugging distributed applications. This lab provides hands-on experience in instrumenting applications and gaining insights into performance bottlenecks.

Lab 21: Configure Amazon CloudWatch to Notify Change In EC2 CPU Utilization

Lab 21: Configure Amazon CloudWatch to Notify Change In EC2 CPU Utilization

Master Amazon CloudWatch for proactive monitoring of your EC2 instances. This lab focuses on setting up notifications based on changes in CPU utilization, ensuring timely responses to performance fluctuations.

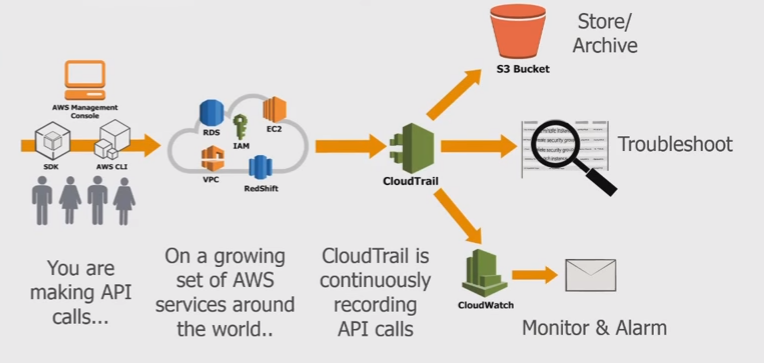

Lab 22: Enable CloudTrail and Store Logs In S3

Enhance your AWS security by enabling AWS CloudTrail. This lab guides you through the process of setting up CloudTrail and storing logs in Amazon S3 for comprehensive audit trails and compliance.

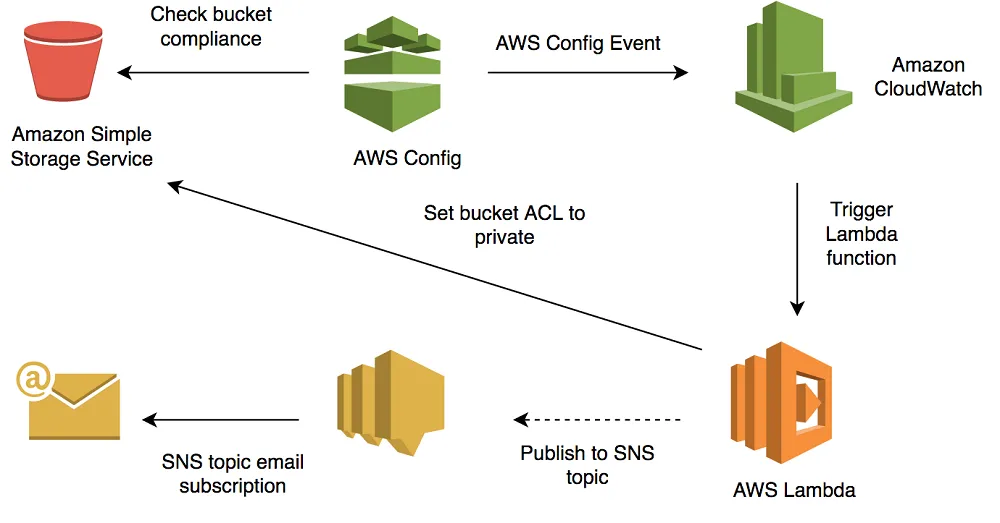

Lab 23: Setting Up AWS Config to Assess Audit & Evaluate AWS Resources

Lab 23: Setting Up AWS Config to Assess Audit & Evaluate AWS Resources

Gain control and visibility into your AWS resource configurations with AWS Config. This lab walks you through the setup and utilization of AWS Config for continuous assessment, auditing, and evaluation of your cloud environment.

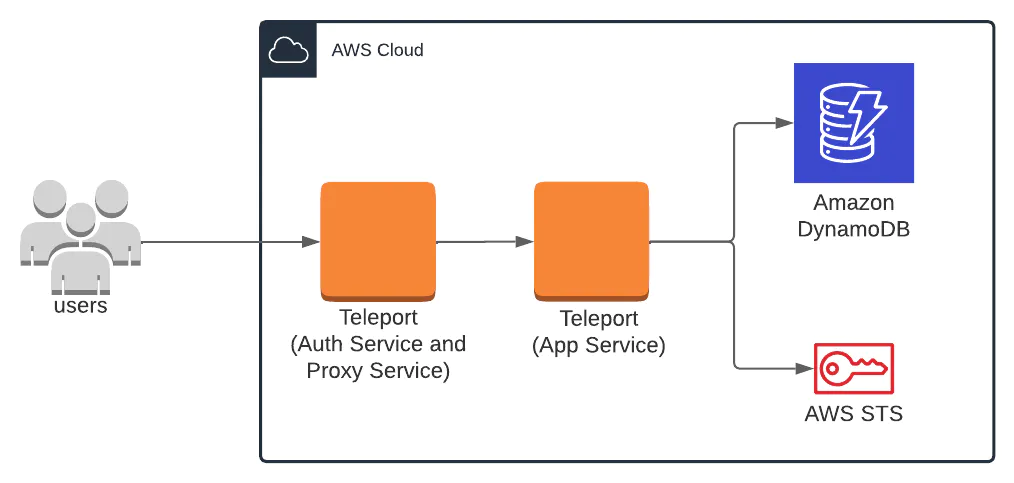

Lab 24: Create & Query with Amazon DynamoDB

Lab 24: Create & Query with Amazon DynamoDB

Dive into Amazon DynamoDB, a fully managed NoSQL database service. This lab provides hands-on experience in creating tables and executing queries, exploring the scalability and flexibility of DynamoDB.

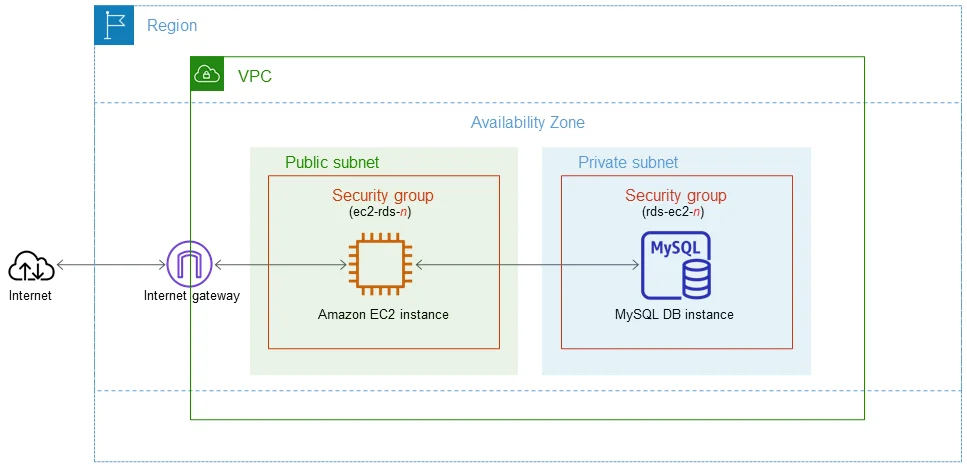

Lab 25: Configure a MySQL DB Instance via Relational Database Service (RDS)

Lab 25: Configure a MySQL DB Instance via Relational Database Service (RDS)

Navigate through Amazon Relational Database Service (RDS) to configure a MySQL database instance. This lab equips you with the skills to set up, manage, and optimize a relational database in the AWS cloud.

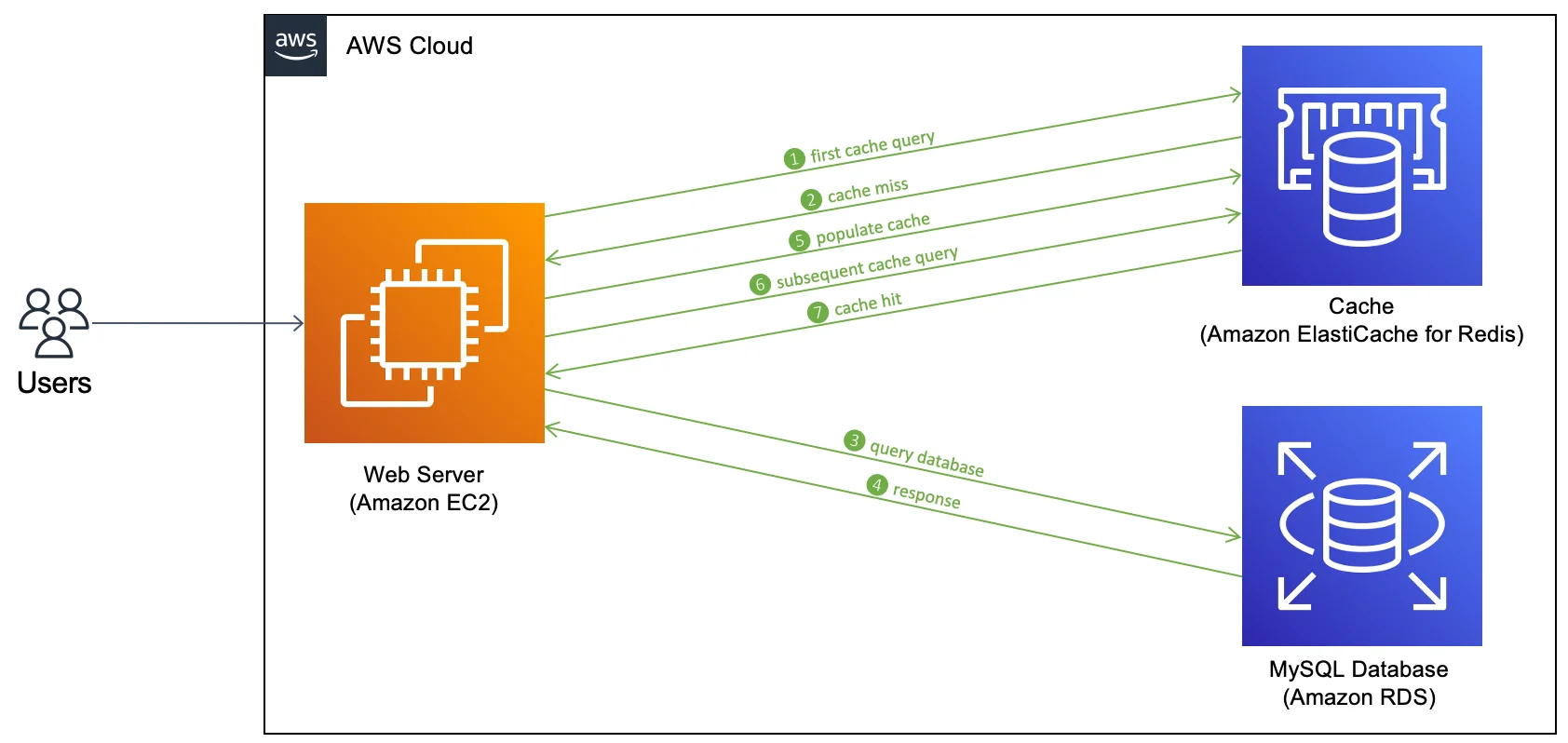

Lab 26: Create A Redis Cache and connect It To EC2 Instance

Lab 26: Create A Redis Cache and connect It To EC2 Instance

Explore Amazon ElastiCache and its Redis caching capabilities. This lab guides you through the creation of a Redis cache cluster and connecting it to an EC2 instance, enhancing data retrieval performance.

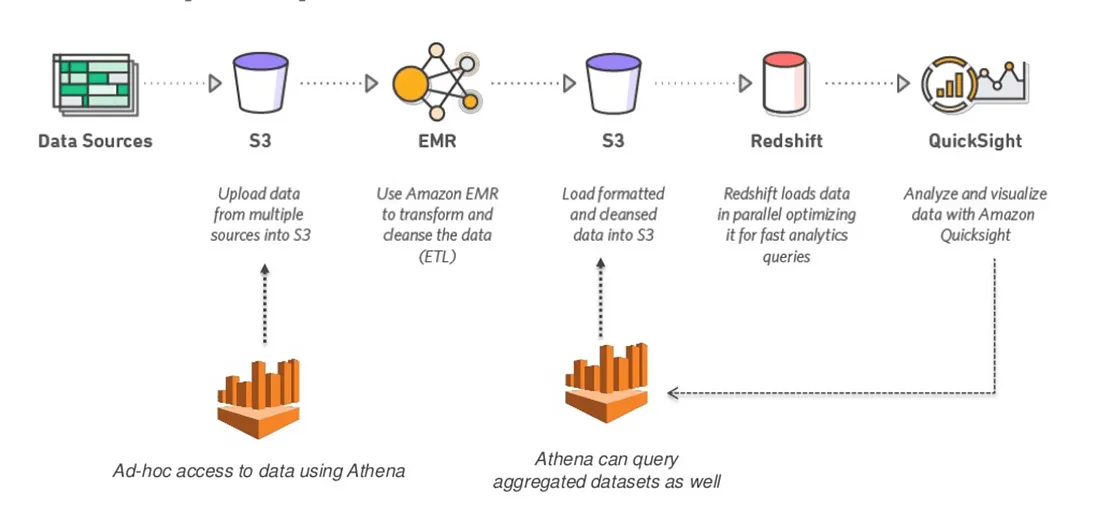

Lab 27: Amazon Athena

Lab 27: Amazon Athena

Unlock the power of serverless querying with Amazon Athena. This lab introduces you to Athena’s capabilities, allowing you to run ad-hoc SQL queries on data stored in Amazon S3.

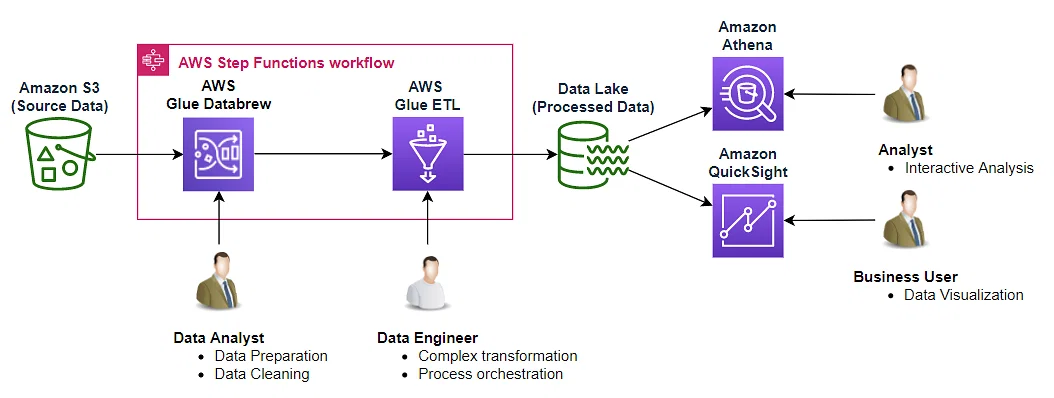

Lab 28: Introduction to AWS Glue

Lab 28: Introduction to AWS Glue

Delve into AWS Glue for data integration and transformation. This lab provides hands-on experience in discovering, cataloging, and transforming data, laying the foundation for efficient data processing.

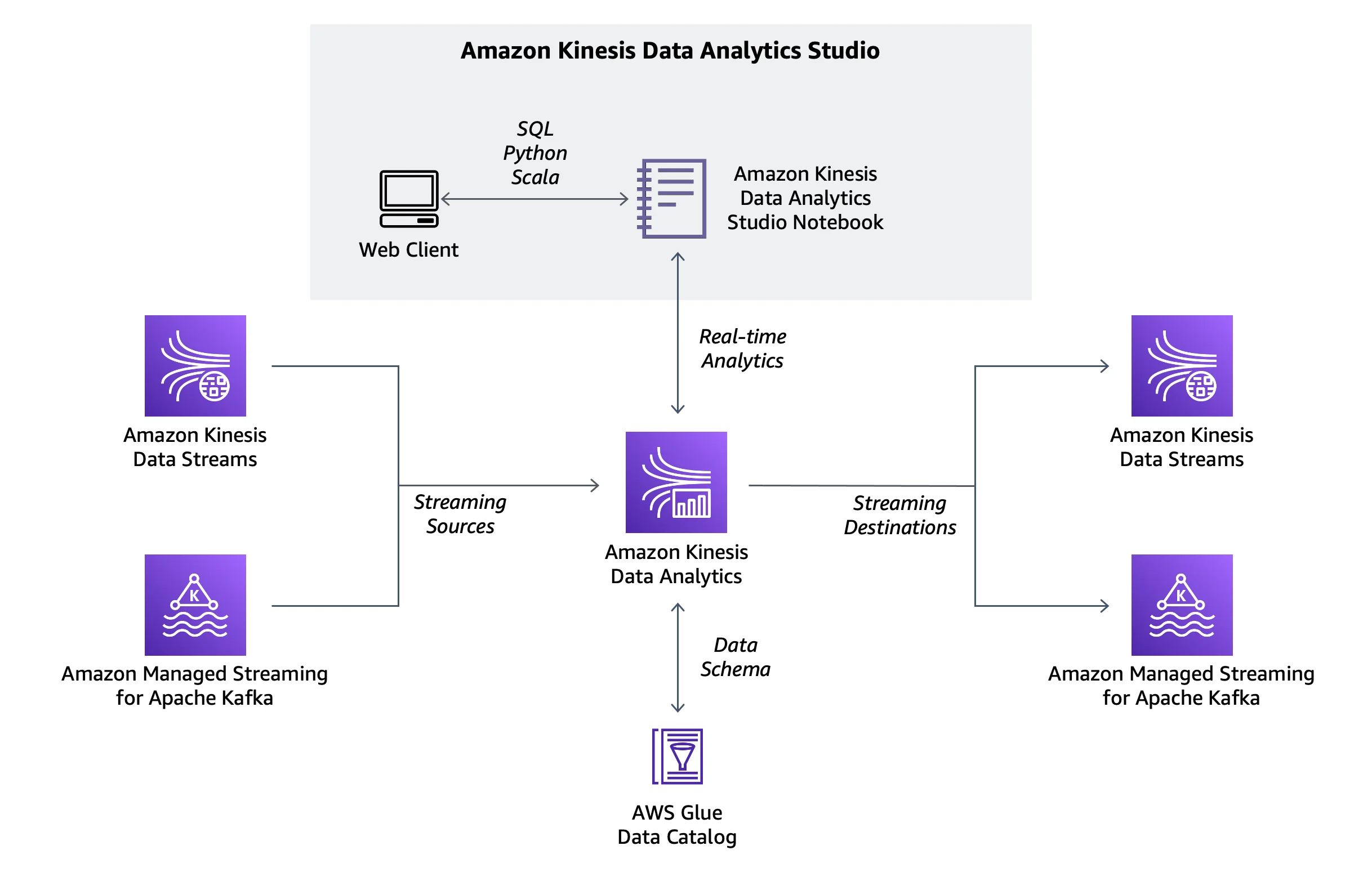

Lab 29: Visualize Web Traffic Using Kinesis Data Streams

Lab 29: Visualize Web Traffic Using Kinesis Data Streams

Enter the realm of real-time data streaming with Amazon Kinesis Data Streams. This lab guides you through the process of visualizing web traffic patterns, showcasing the capabilities of Kinesis for data analytics.

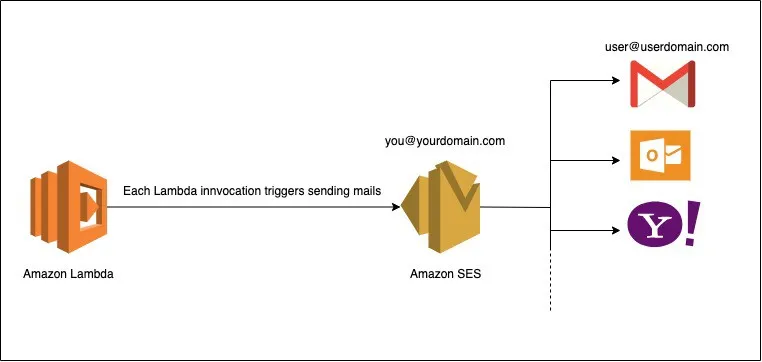

Lab 30: Send An E-mail Through AWS SES

Lab 30: Send An E-mail Through AWS SES

Master Amazon Simple Email Service (SES) to send emails securely and reliably. This lab explores SES’s features, from setting up email sending to configuring templates and handling bounces and complaints for robust email communication.

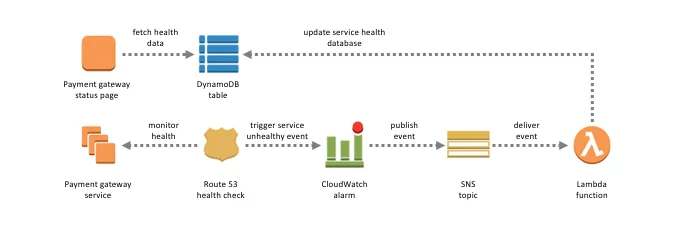

Lab 31: Event-Driven Architectures Using AWS Lambda, SES, SNS and SQS

Lab 31: Event-Driven Architectures Using AWS Lambda, SES, SNS and SQS

Build dynamic and scalable event-driven architectures with AWS Lambda, Simple Email Service (SES), Simple Notification Service (SNS), and Simple Queue Service (SQS). This lab guides you through creating a seamless communication flow within your AWS environment.

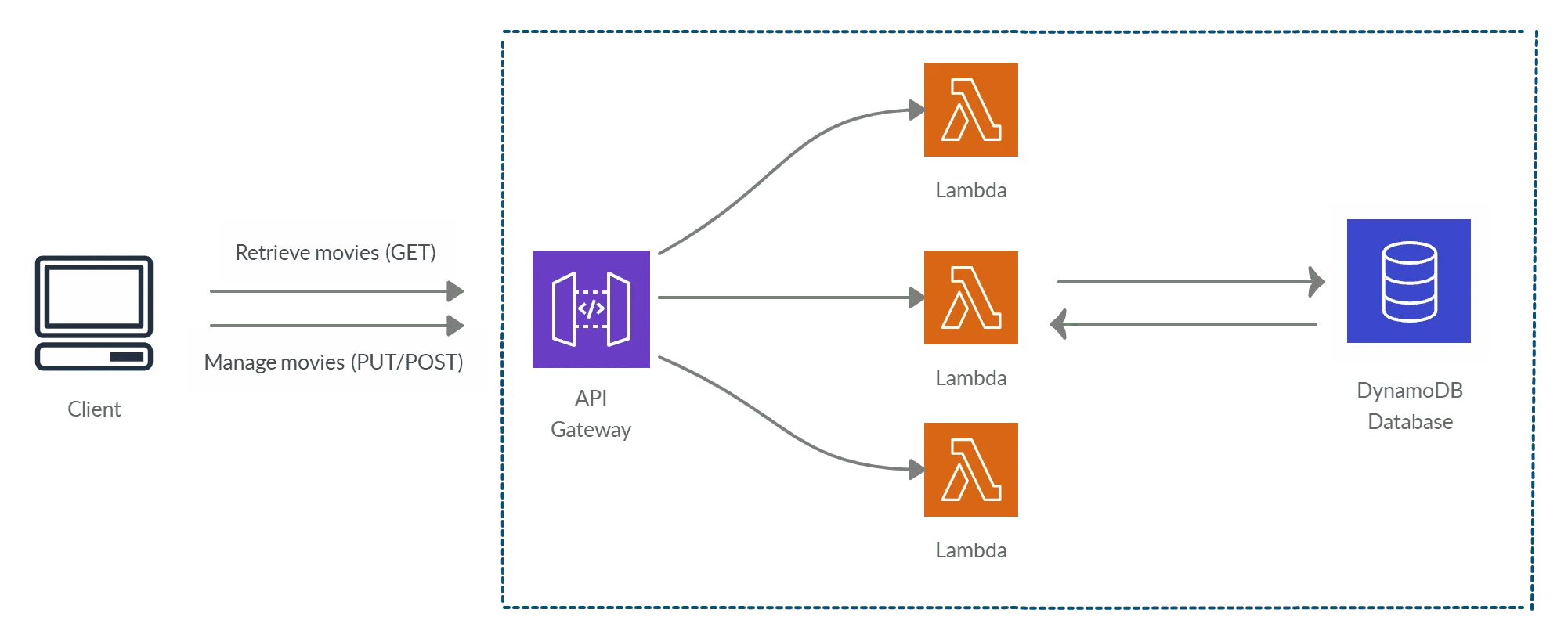

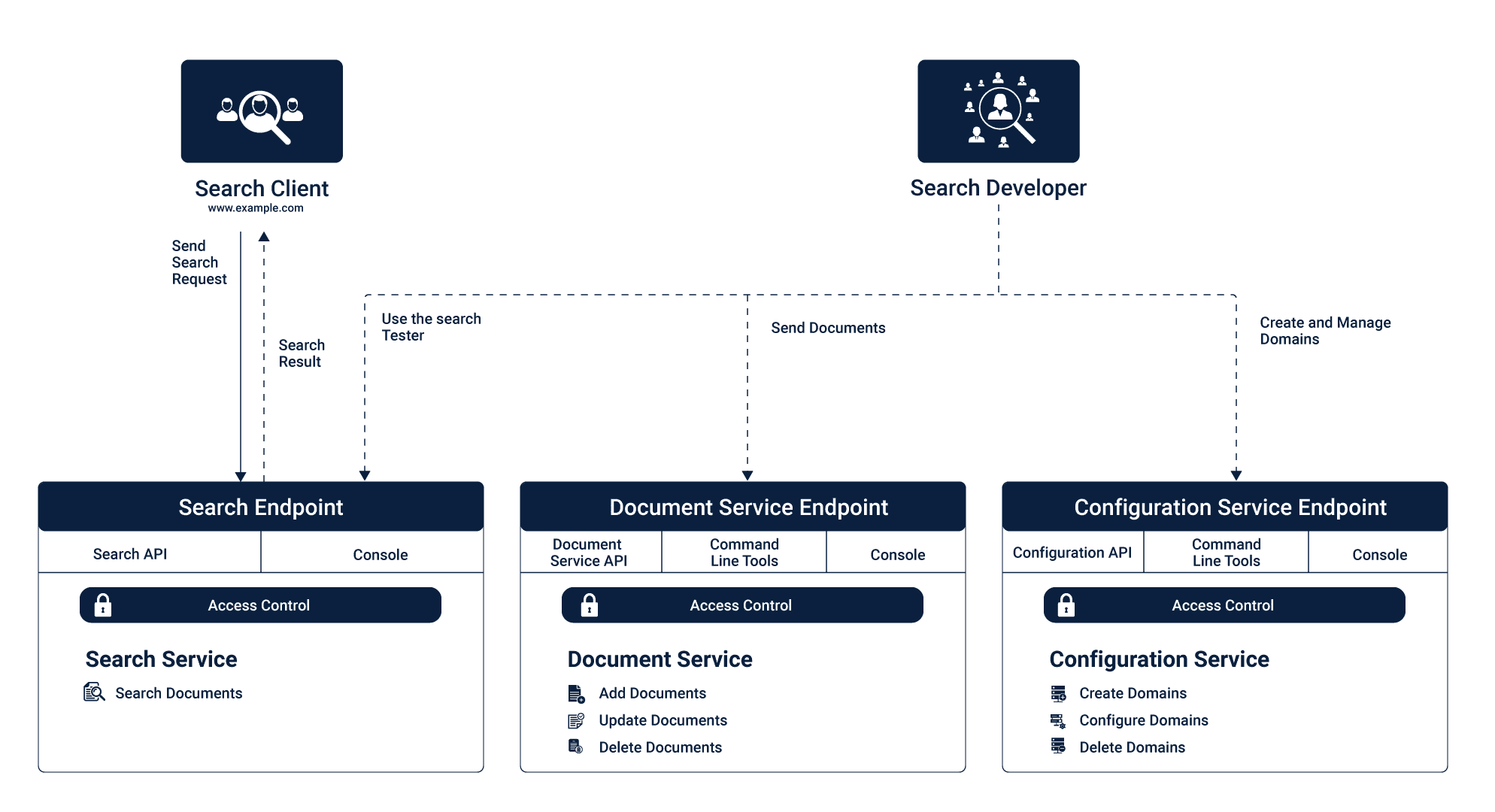

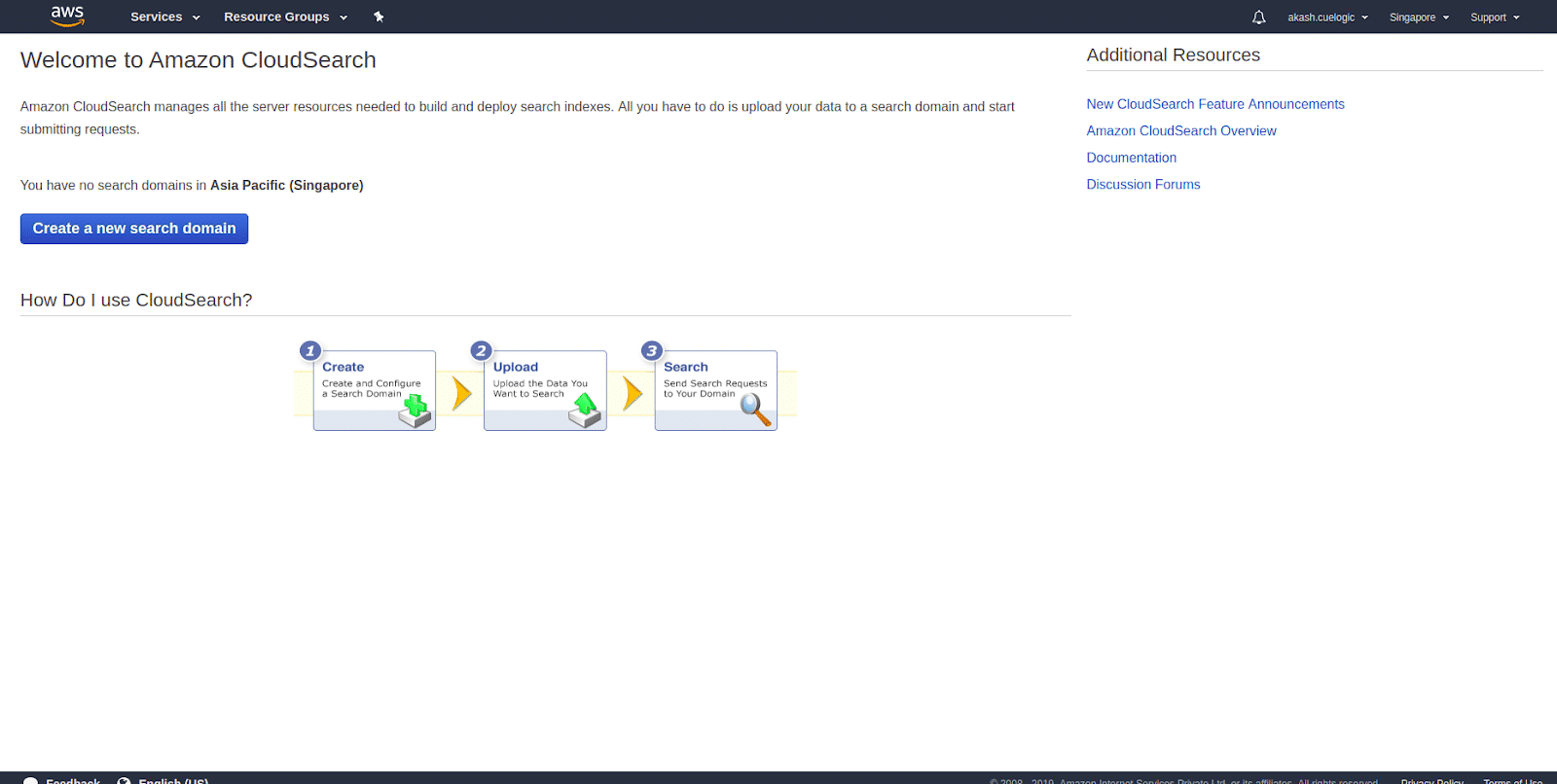

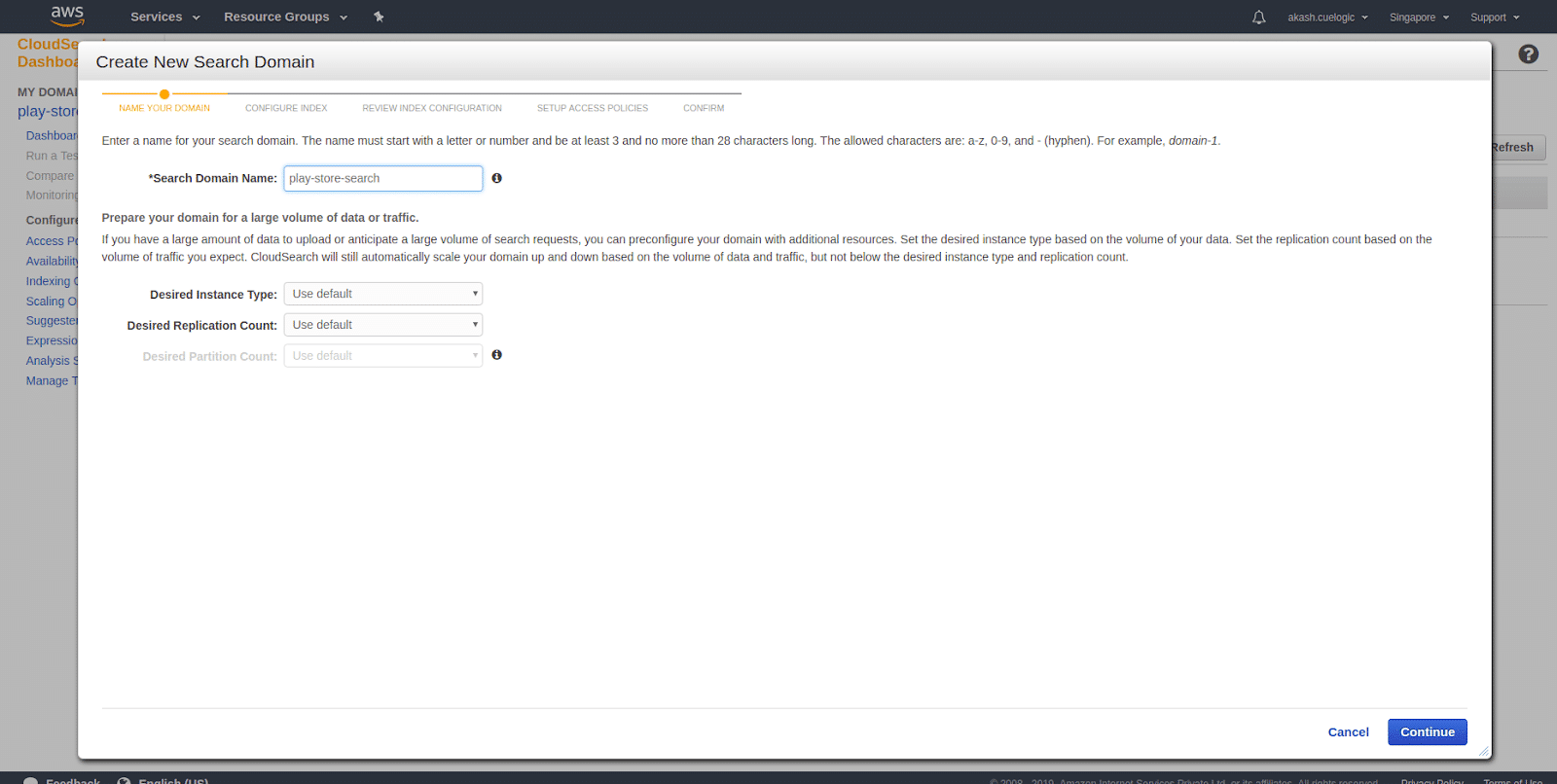

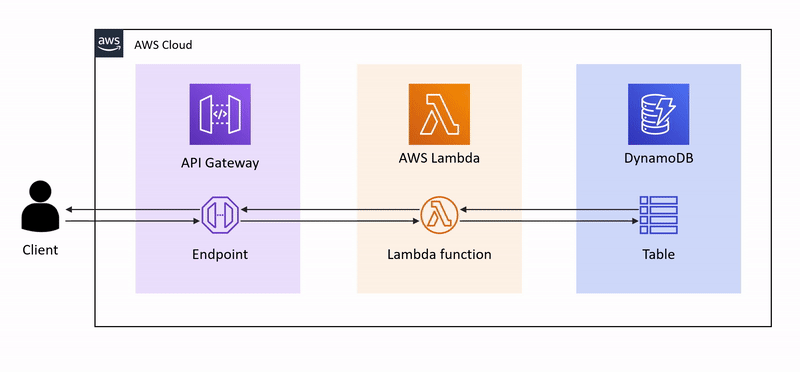

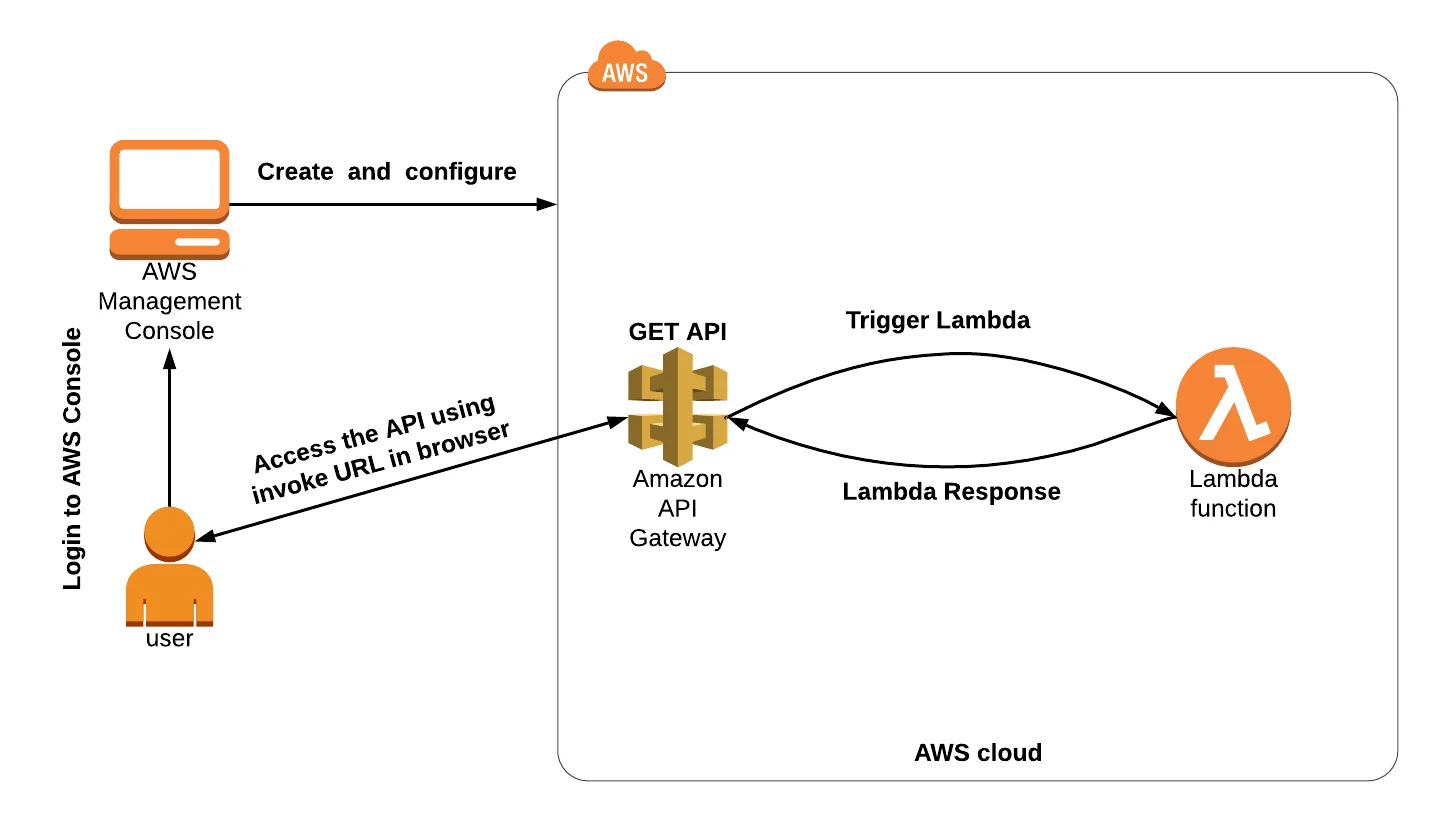

Lab 32: Build API Gateway with Lambda Integration

Lab 32: Build API Gateway with Lambda Integration

Create a robust API Gateway and integrate it with AWS Lambda for efficient and scalable API management. This lab equips you with the skills to build and manage APIs seamlessly.

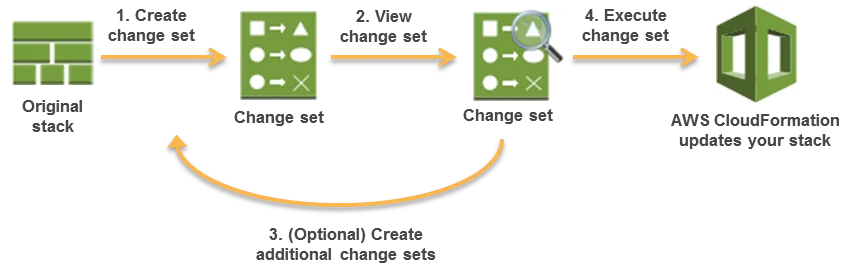

Lab 33: Create and Update Stacks using CloudFormation

Lab 33: Create and Update Stacks using CloudFormation

Enter the realm of Infrastructure as Code (IaC) with AWS CloudFormation. Learn to create and update stacks to provision and manage AWS resources in a repeatable and automated fashion.

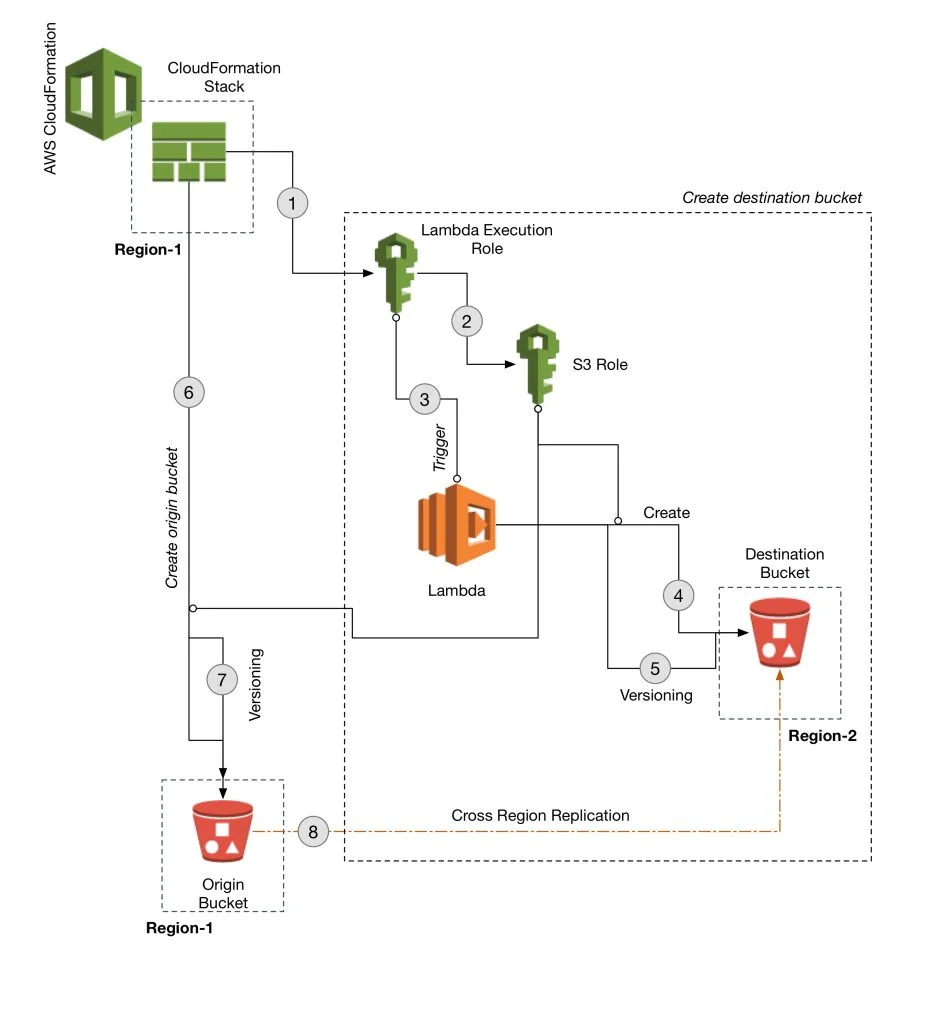

Lab 34: Create S3 Bucket Using CloudFormation

Lab 34: Create S3 Bucket Using CloudFormation

Automate S3 bucket creation with AWS CloudFormation, streamlining your infrastructure management and ensuring consistency across deployments.

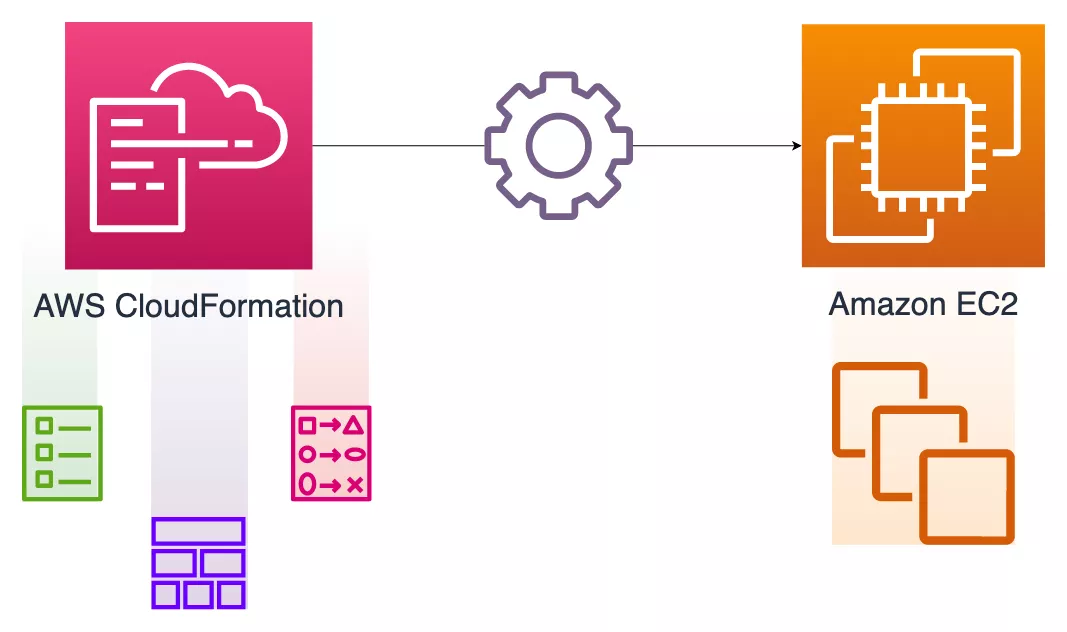

Lab 35: Create & Configure EC2 with Helper-Scripts

Lab 35: Create & Configure EC2 with Helper-Scripts

Leverage helper scripts to automate the creation and configuration of EC2 instances. This lab provides hands-on experience in scripting for efficient and reproducible infrastructure deployment.

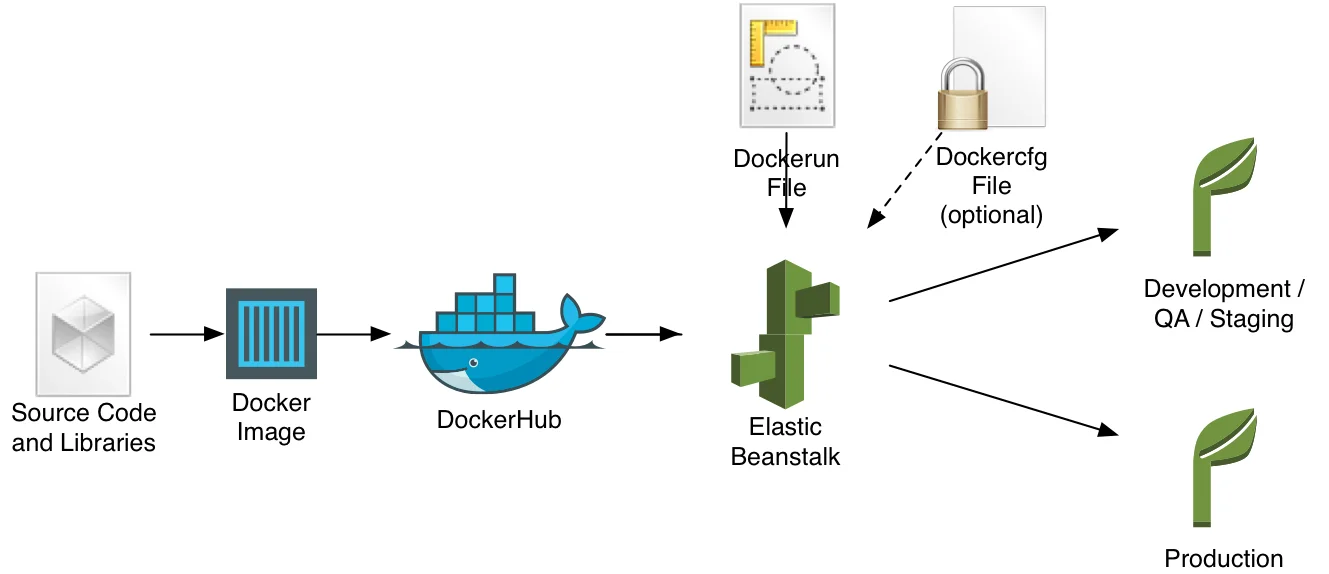

Lab 36: Deploy An Application In Beanstalk Using Docker

Lab 36: Deploy An Application In Beanstalk Using Docker

Explore AWS Elastic Beanstalk for simplified application deployment. This lab focuses on deploying applications using Docker containers, providing scalability and ease of management.

Lab 37: Immutable Deployment on Beanstalk Environment

Lab 37: Immutable Deployment on Beanstalk Environment

Implement immutable deployments on Elastic Beanstalk environments for enhanced reliability and consistency. This lab guides you through deploying applications with minimal downtime and risk.

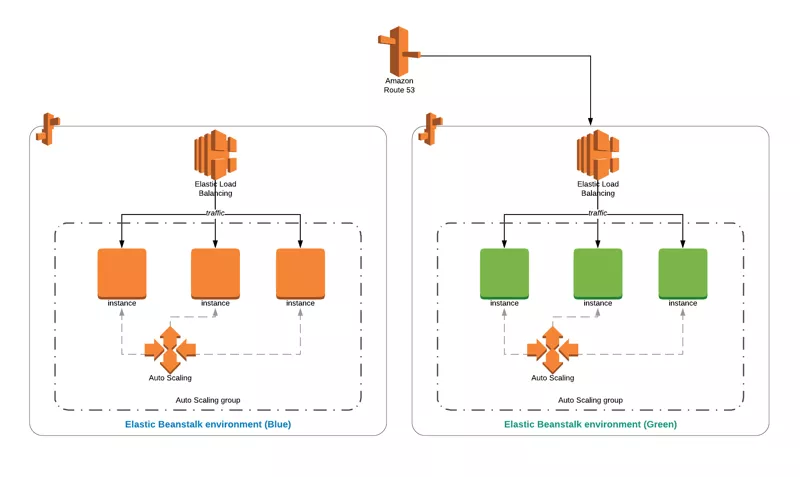

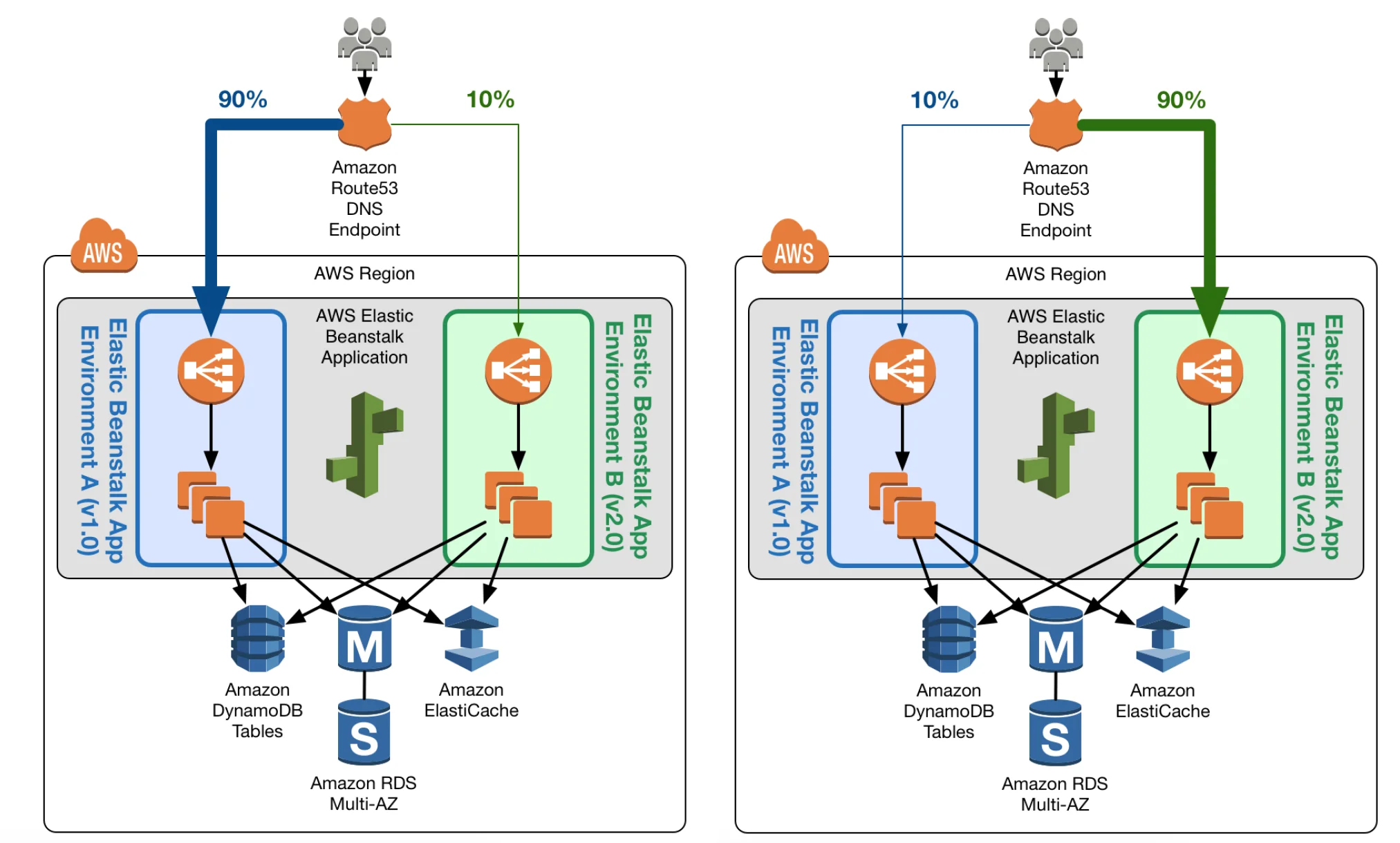

Lab 38: Blue-Green Deployments Using Elastic Beanstalk

Optimize your deployment strategies with blue-green deployments on Elastic Beanstalk. This lab explores techniques for minimizing downtime and risk during application updates.

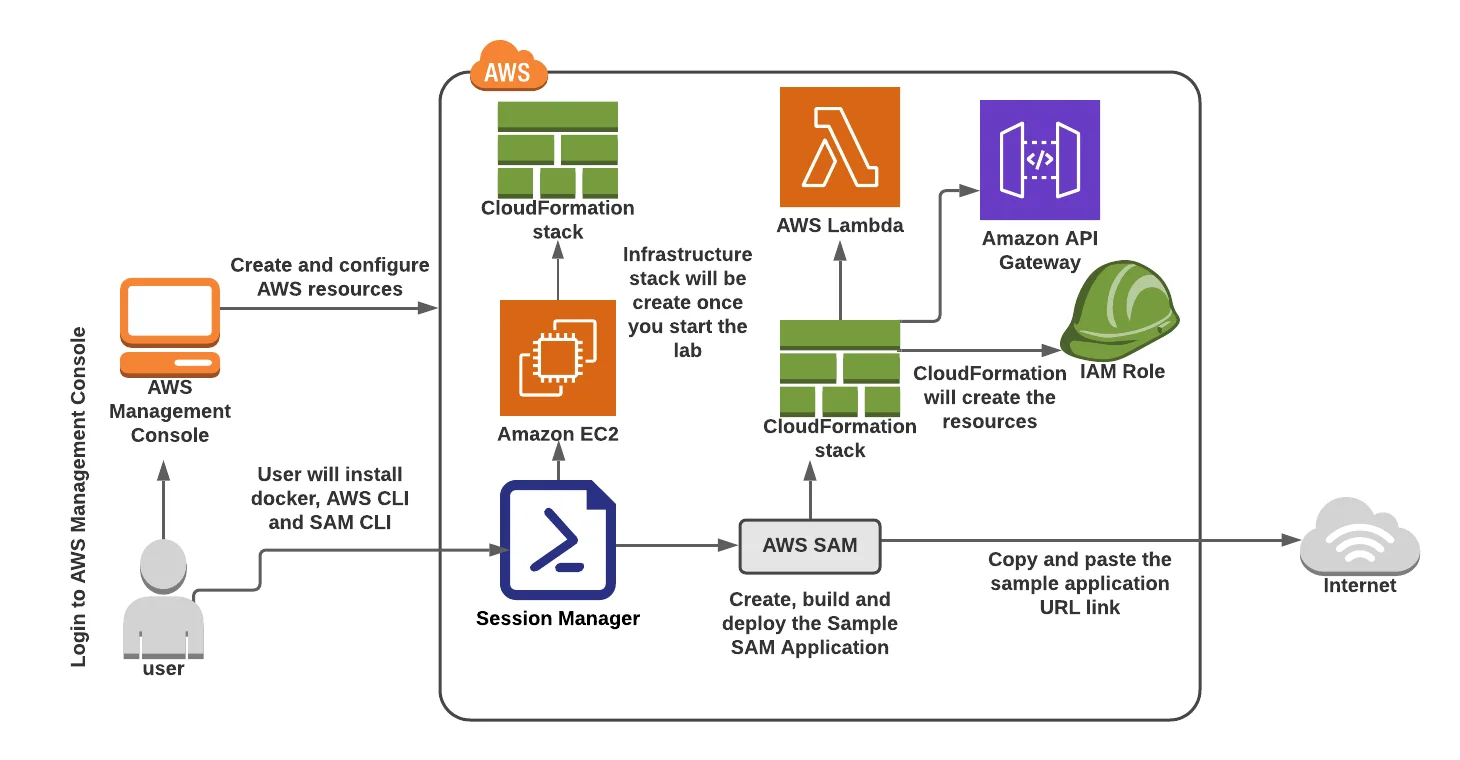

Lab 39: AWS Serverless Application Model

Lab 39: AWS Serverless Application Model

Enter the world of serverless architecture with the AWS Serverless Application Model (AWS SAM). This lab provides a hands-on introduction to building and deploying serverless applications efficiently.

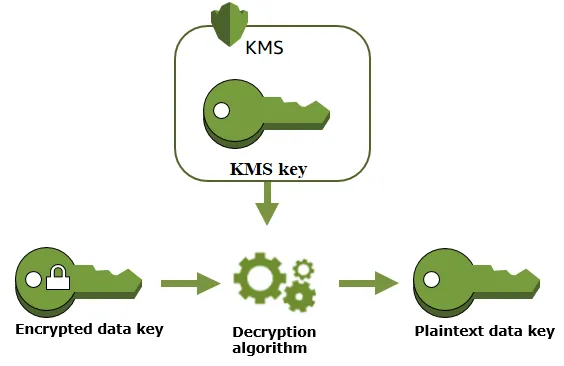

Lab 40: AWS KMS Create & Use

Lab 40: AWS KMS Create & Use

Explore AWS Key Management Service (KMS) for creating and using cryptographic keys to enhance data security. This lab guides you through the process of securing sensitive information with KMS.

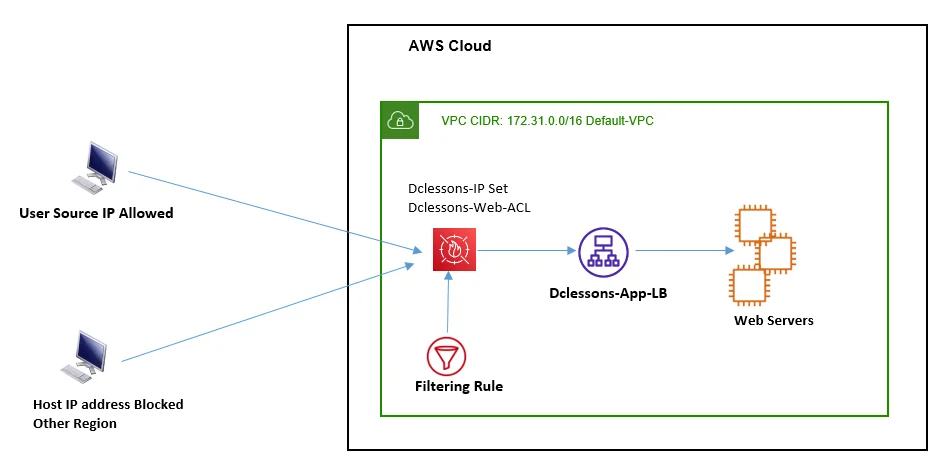

Lab 41: Block Web Traffic with WAF in AWS

Lab 41: Block Web Traffic with WAF in AWS

Fortify your web applications with AWS Web Application Firewall (WAF). This lab demonstrates how to block malicious web traffic and protect your applications from common security threats.

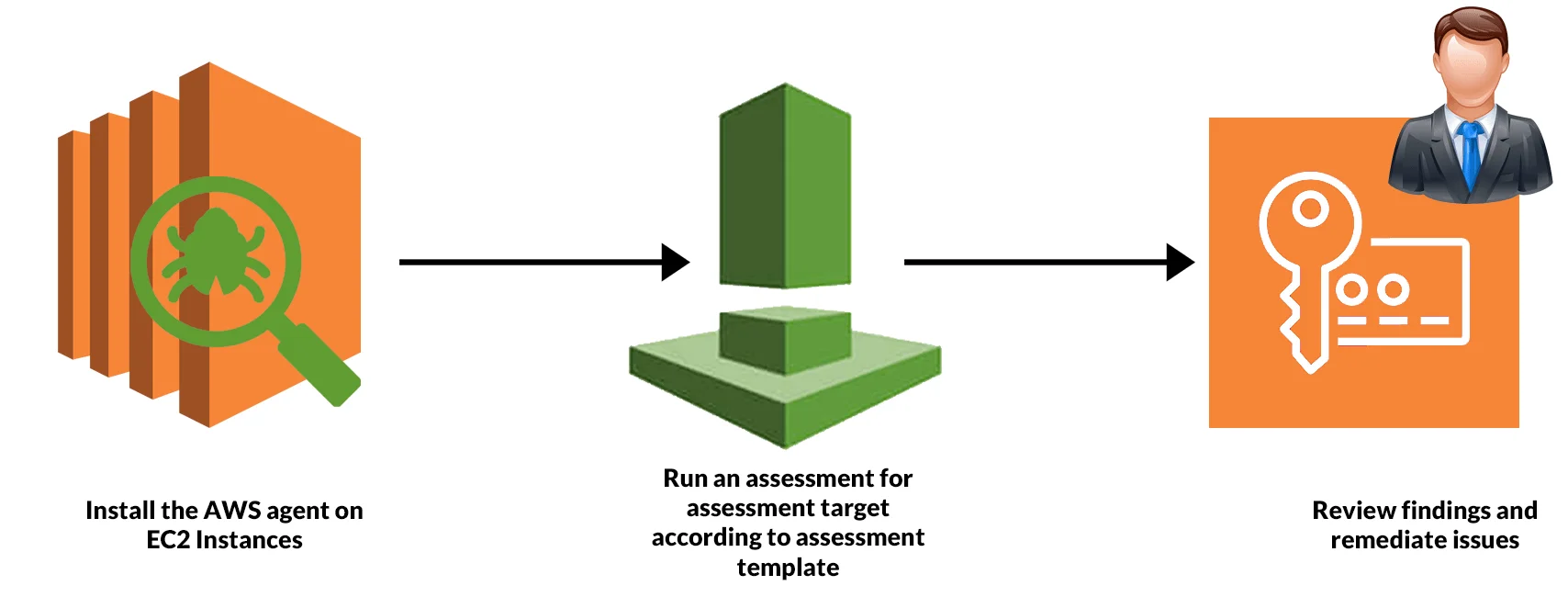

Lab 42: Amazon Inspector

Lab 42: Amazon Inspector

Enhance your security posture with Amazon Inspector, an automated security assessment service. This lab guides you through setting up and using Inspector to identify vulnerabilities in your AWS resources.

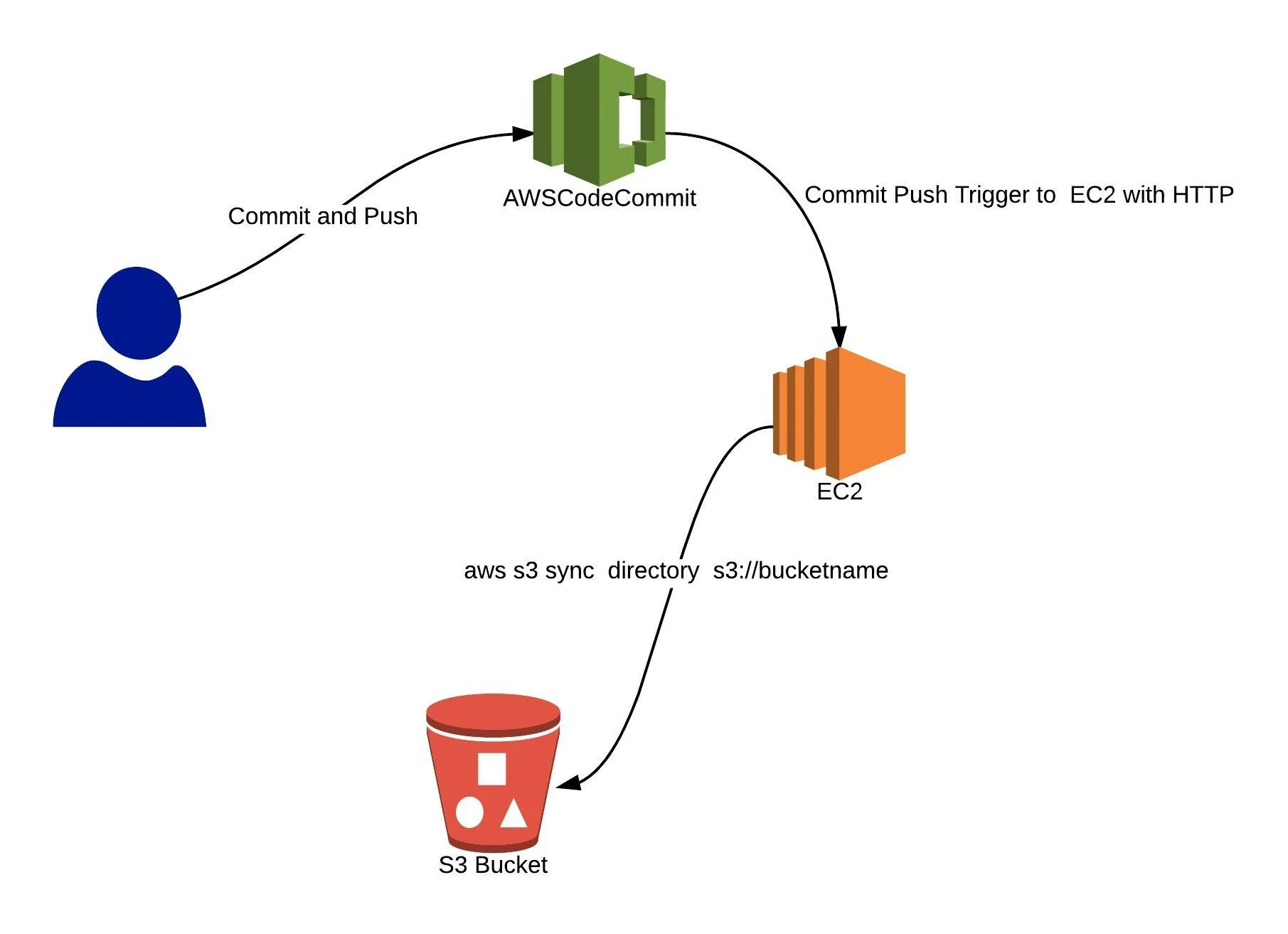

Lab 43: Working with AWS CodeCommit

Lab 43: Working with AWS CodeCommit

Collaborate effectively on software development projects with AWS CodeCommit. This lab provides hands-on experience in version control, code collaboration, and repository management.

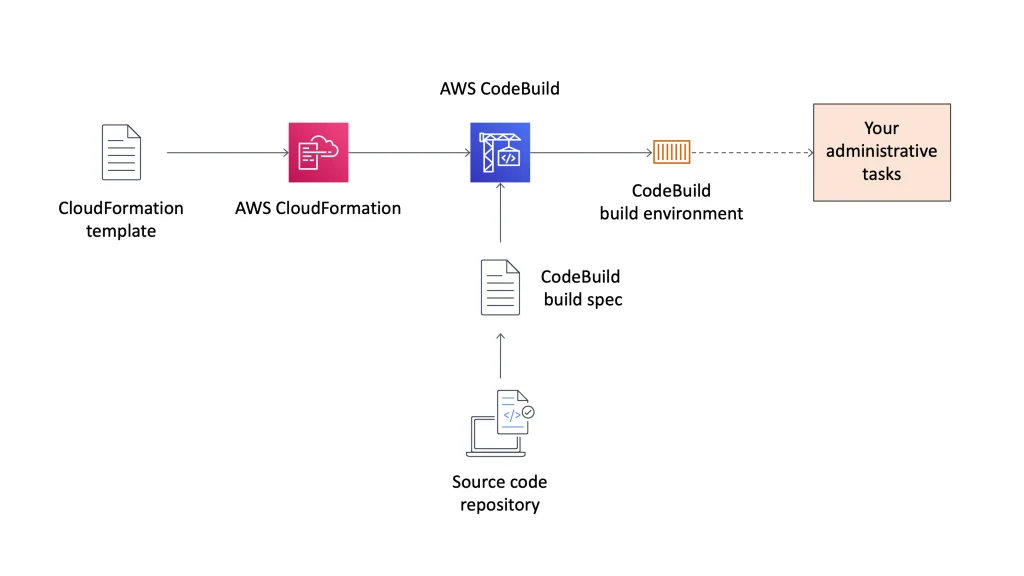

Lab 44: Build Application with AWS CodeBuild

Lab 44: Build Application with AWS CodeBuild

Automate your build processes with AWS CodeBuild. This lab guides you through setting up build projects, compiling code, and generating artifacts for deployment.

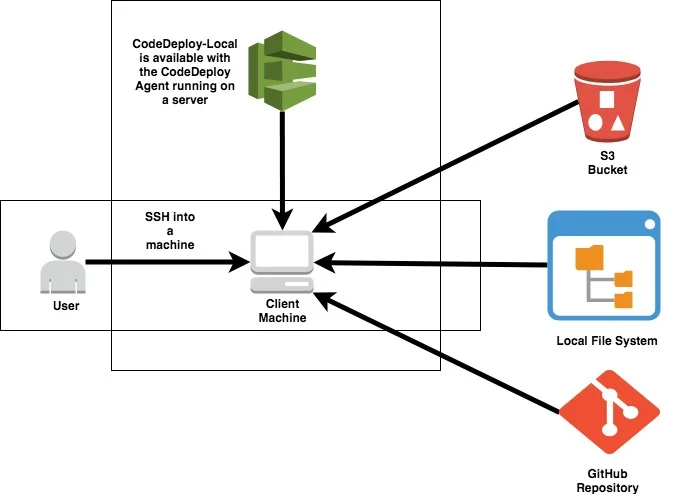

Lab 45: Deploy Sample Application using AWS CodeDeploy

Lab 45: Deploy Sample Application using AWS CodeDeploy

Streamline application deployment with AWS CodeDeploy. This lab demonstrates how to automate the deployment process, ensuring consistent and reliable releases.

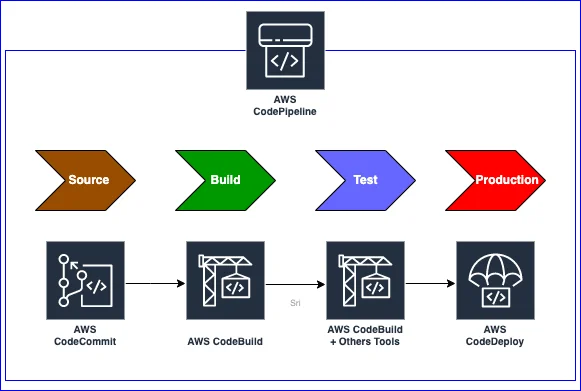

Lab 46: Create a Simple Pipeline (CodePipeline)

Lab 46: Create a Simple Pipeline (CodePipeline)

Build end-to-end continuous integration and continuous deployment (CI/CD) pipelines with AWS CodePipeline. This lab provides hands-on experience in orchestrating and automating your software delivery process.

Lab 47: Build Application with AWS CodeStar

Lab 47: Build Application with AWS CodeStar

Accelerate your development workflow with AWS CodeStar. This lab introduces you to the benefits of CodeStar for simplifying project setup, development, and deployment.

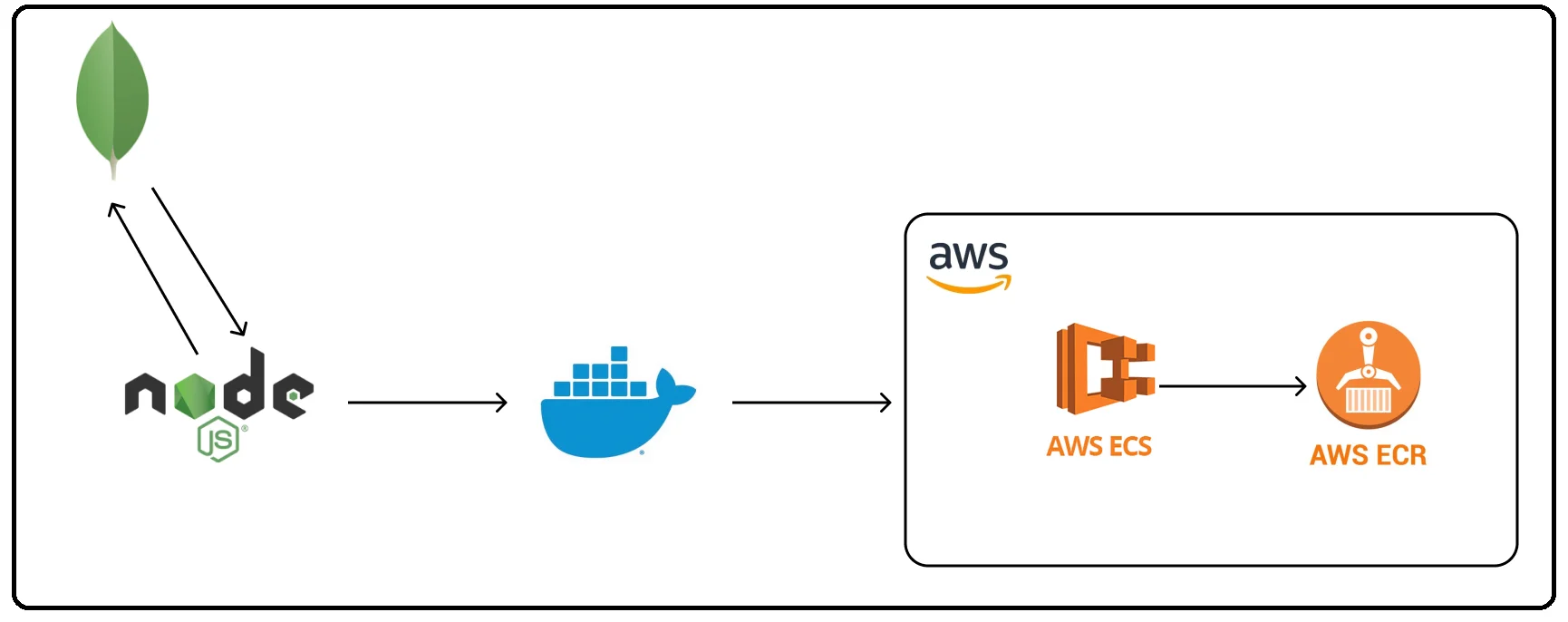

Lab 48: Create ECR, Install Docker, Create Image, and Push Image To ECR

Lab 48: Create ECR, Install Docker, Create Image, and Push Image To ECR

Dive into containerization with Amazon Elastic Container Registry (ECR). This lab covers the entire container lifecycle, from creating a repository to pushing Docker images to ECR for seamless container management.

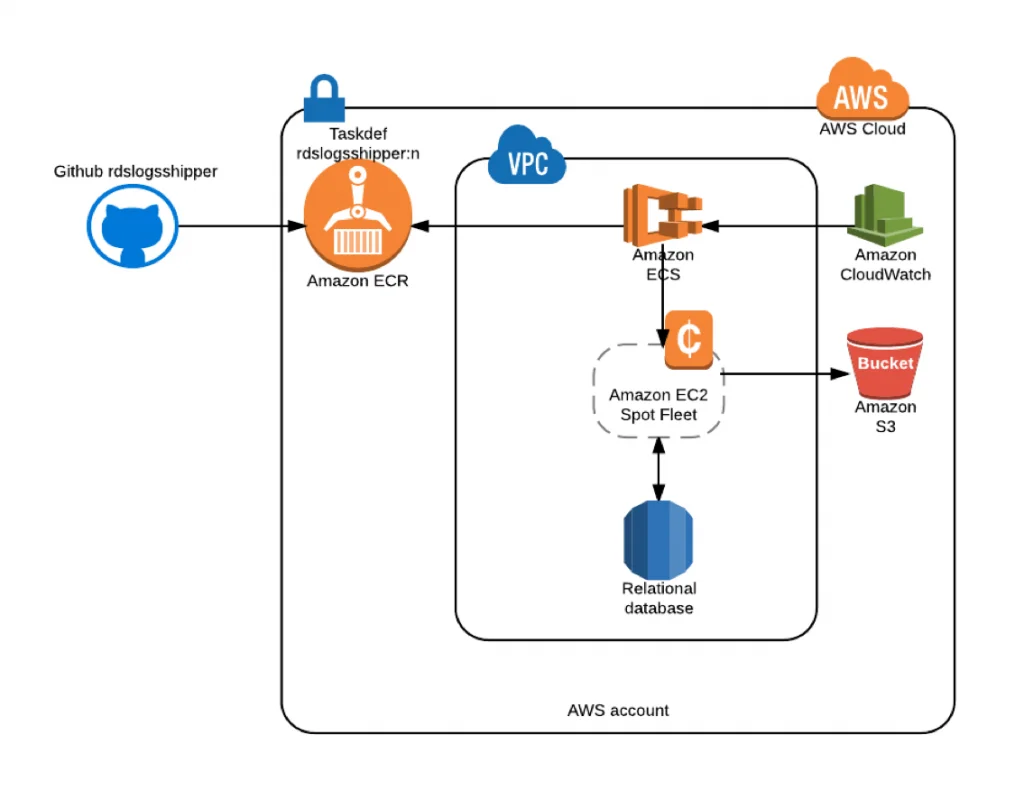

Lab 49: Create Task Definitions, Scheduling Tasks, Configuring Services and Cluster using EC2 Launch Types

Lab 49: Create Task Definitions, Scheduling Tasks, Configuring Services and Cluster using EC2 Launch Types

Explore Amazon ECS (Elastic Container Service) by creating task definitions, scheduling tasks, and configuring services and clusters. This lab provides hands-on experience in managing containerized applications using different EC2 launch types.

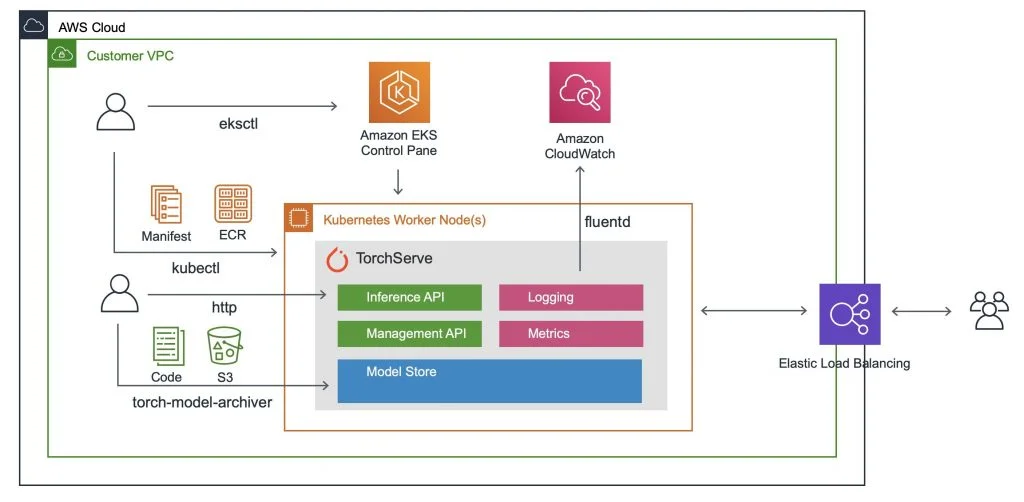

Lab 50: Create Elastic Kubernetes Service (EKS) Cluster on AWS

Lab 50: Create Elastic Kubernetes Service (EKS) Cluster on AWS

Delve into Kubernetes on AWS by creating an Elastic Kubernetes Service (EKS) cluster. This lab guides you through provisioning and managing Kubernetes clusters for container orchestration.

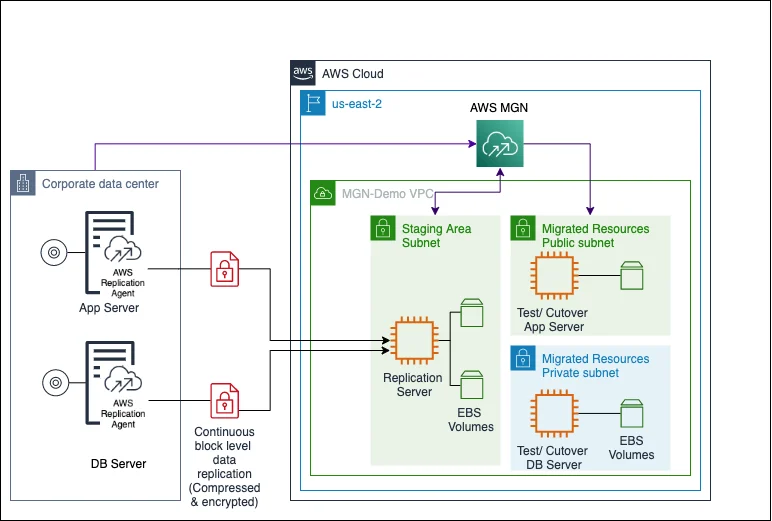

Lab 51: Application Migration to AWS

Lab 51: Application Migration to AWS

Learn the intricacies of migrating applications to AWS. This lab covers assessment, planning, and execution strategies to ensure a seamless transition to the cloud.

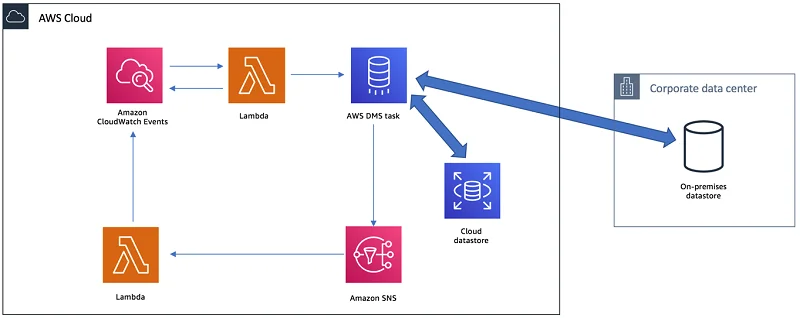

Lab 52: Database Migration To AWS

Lab 52: Database Migration To AWS

Explore strategies and tools for migrating databases to AWS. This lab provides practical guidance on minimizing downtime and ensuring data consistency during database migration.

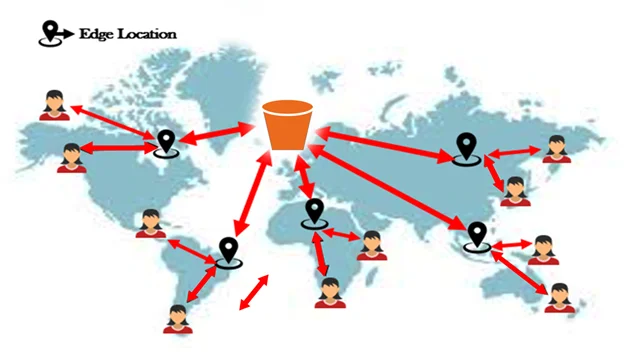

Lab 53: AWS Data Transfer Acceleration

Lab 53: AWS Data Transfer Acceleration

Optimize your data transfer speed with AWS Data Transfer Acceleration. This lab introduces you to acceleration techniques for efficient data movement within the AWS infrastructure.

Lab 54: Migrating a Monolithic Application to AWS with Docker

Lab 54: Migrating a Monolithic Application to AWS with Docker

Experience the process of migrating a monolithic application to AWS using Docker containers. This lab covers containerization strategies and best practices for achieving scalability and flexibility in a cloud environment.

Real-time Projects

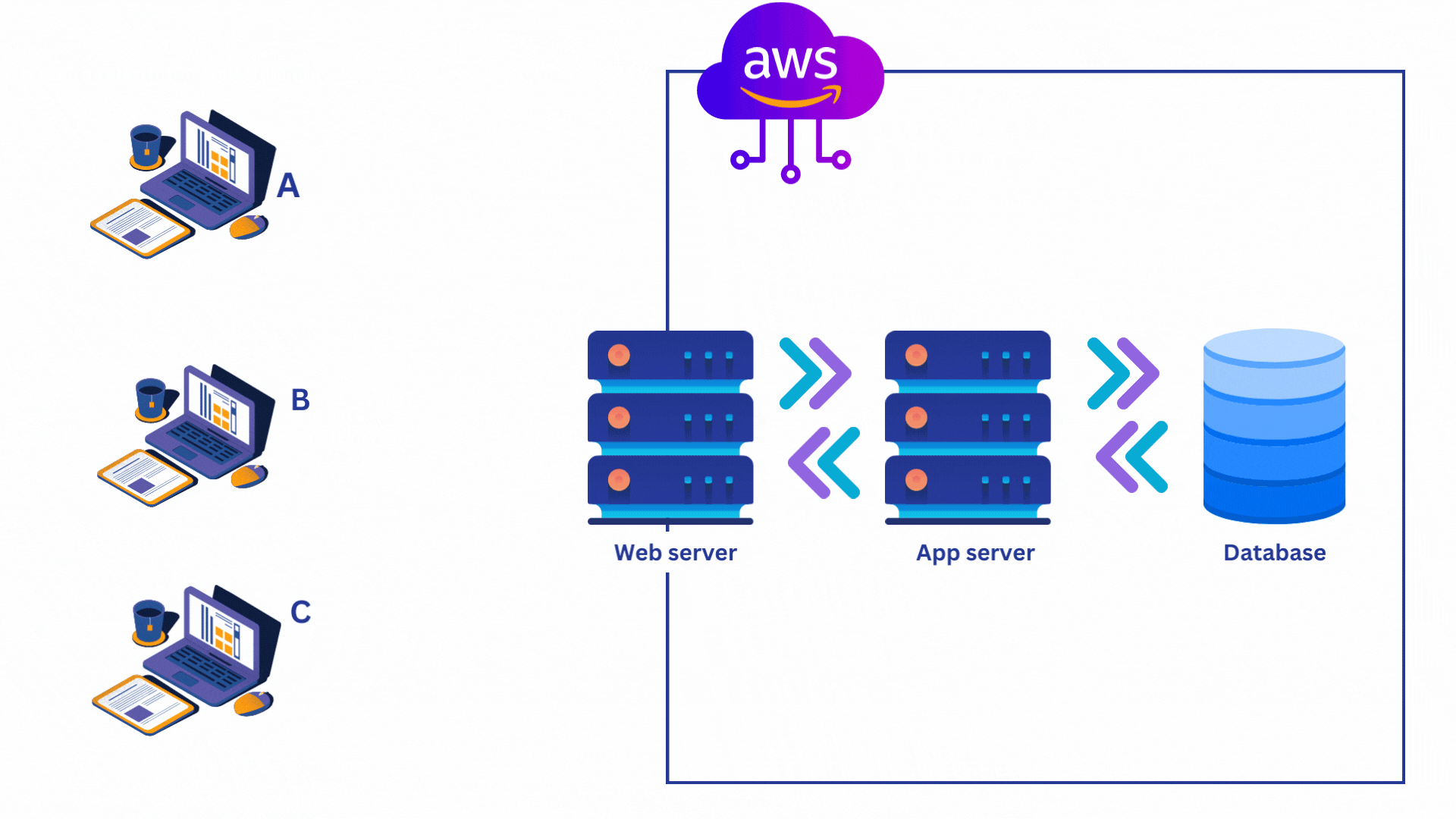

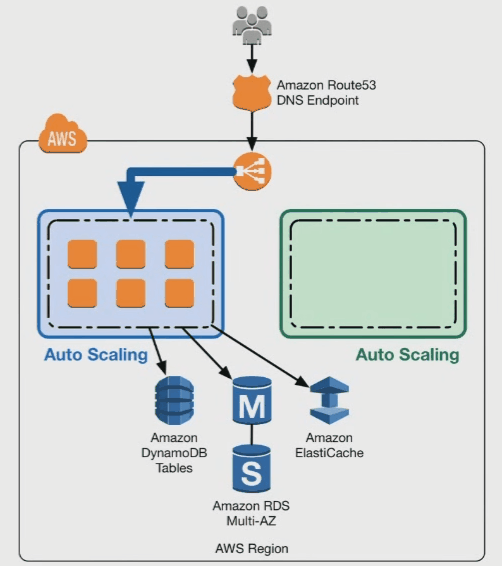

Project 1: 3-Tier Architecture Deployment (Web, App, Database)

Create a well-architected 3-tier system. The web layer serves as the user interface, the app layer processes business logic, and the database layer stores and manages data. This project ensures a scalable and modular infrastructure, fostering efficient resource utilization.

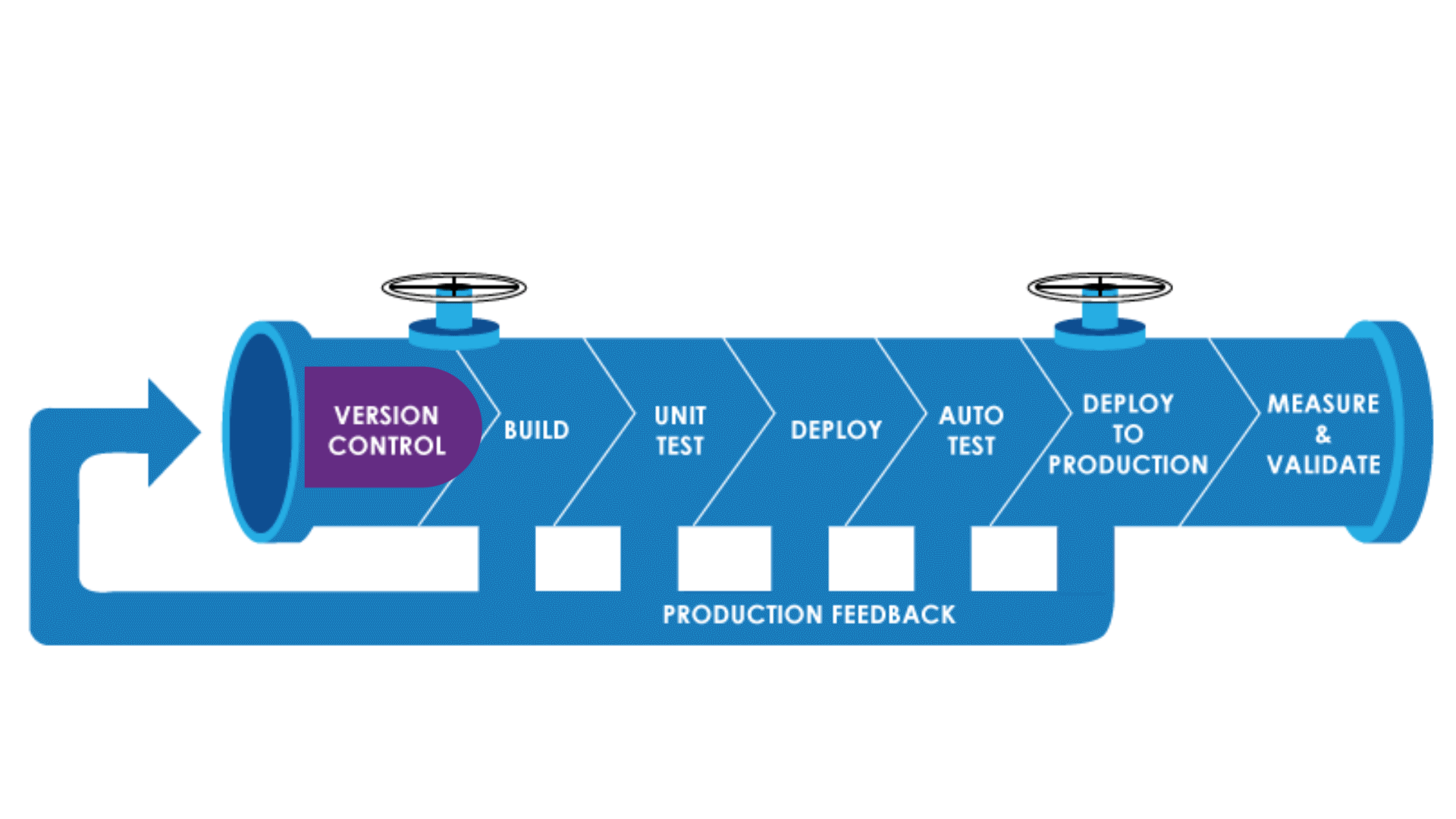

Project 2: DevOps: End-to-End CI/CD Pipeline

Project 2: DevOps: End-to-End CI/CD Pipeline

Establish an end-to-end CI/CD pipeline for your development workflow. This includes automating code builds, running tests, and deploying applications seamlessly. The goal is to enhance collaboration among developers, reduce manual errors, and accelerate the release of high-quality software.

Project 3: Blue/Green Deployment with ECS (Serverless)

Implement a blue/green deployment strategy using AWS Elastic Container Service (ECS), a serverless container orchestration service. This project enables you to deploy new versions of your application without downtime, allowing for instant rollback in case of issues.

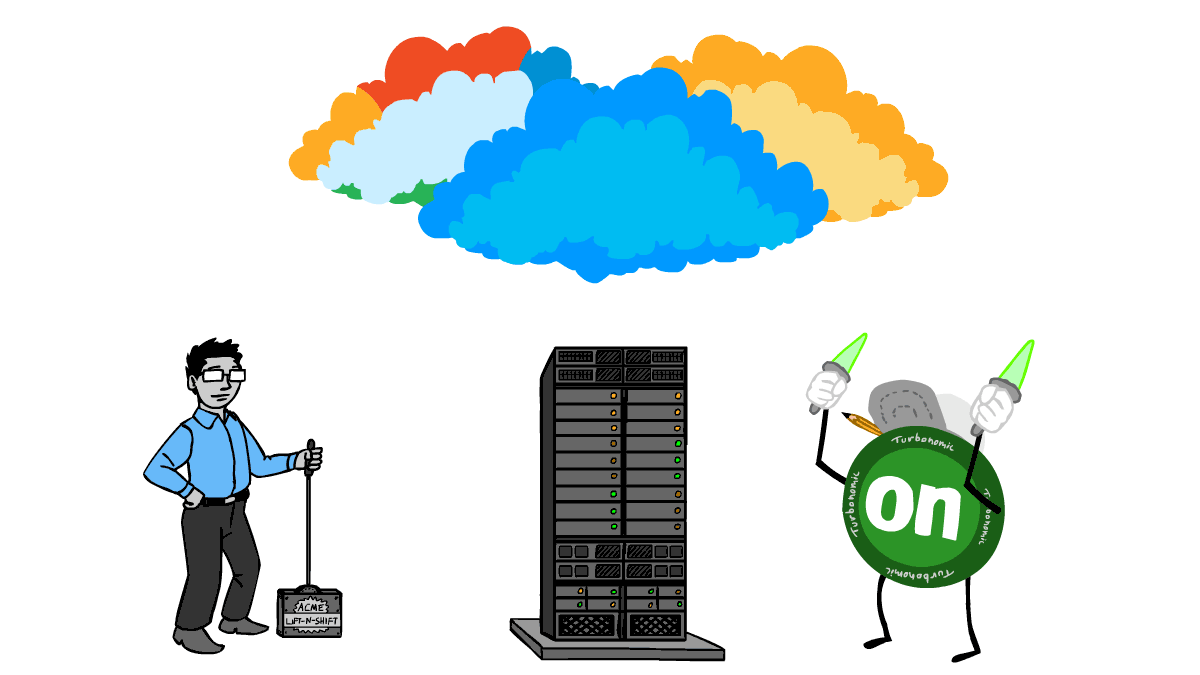

Project 4: Migration: On-Premise to Cloud (App, Database, Data)

Project 4: Migration: On-Premise to Cloud (App, Database, Data)

Execute a comprehensive migration from on-premise infrastructure to the AWS cloud. This involves moving applications, databases, and data to the cloud, optimizing for scalability, cost efficiency, and improved reliability.

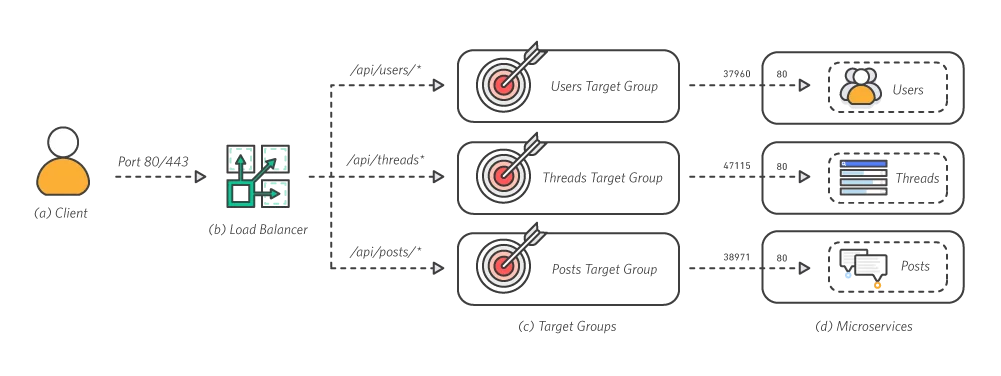

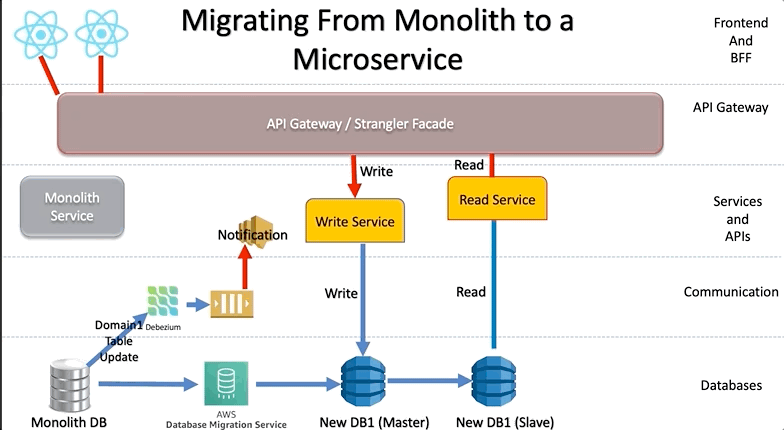

Project 5: Migration: Monolithic App to Microservices

Project 5: Migration: Monolithic App to Microservices

Transform a monolithic application into a microservices architecture on AWS. Break down the application into smaller, independent services, each with its own functionality. This project aims to enhance agility, scalability, and ease of maintenance.

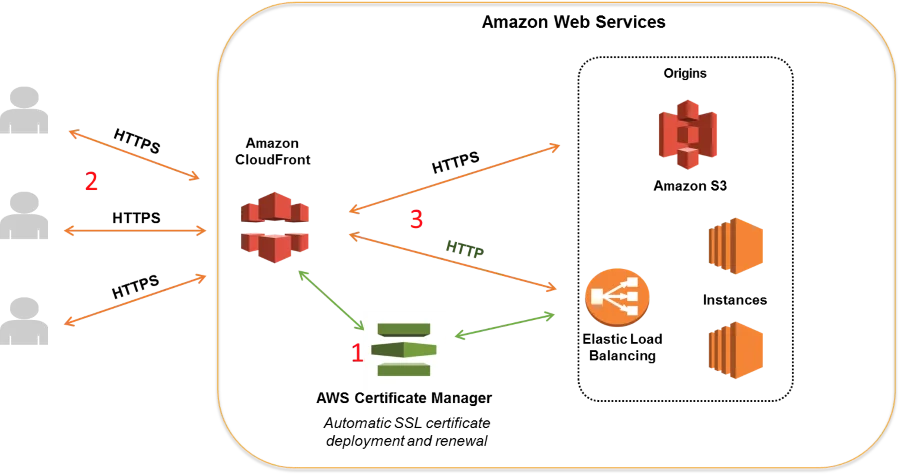

Project 6: Security: SSL/TLS/Keys Certificate Management System

Project 6: Security: SSL/TLS/Keys Certificate Management System

Implement a robust security system, including SSL/TLS protocols for encrypted communication and a key management system for secure key storage and access. This project ensures the confidentiality and integrity of data in transit.

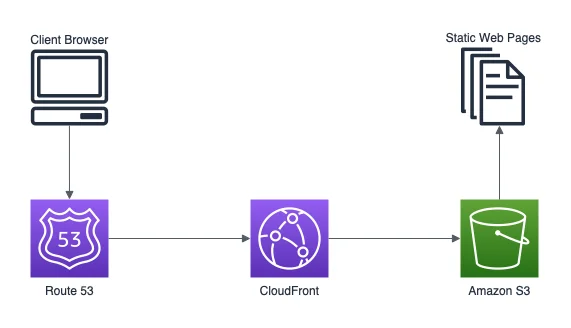

Project 7: Host Static Website on AWS using S3 & Route53

Host a static website using Amazon S3 for storage and Amazon Route 53 for domain management. This project includes setting up S3 buckets, configuring static website hosting, and managing domain routing, providing a reliable and scalable solution for static content.

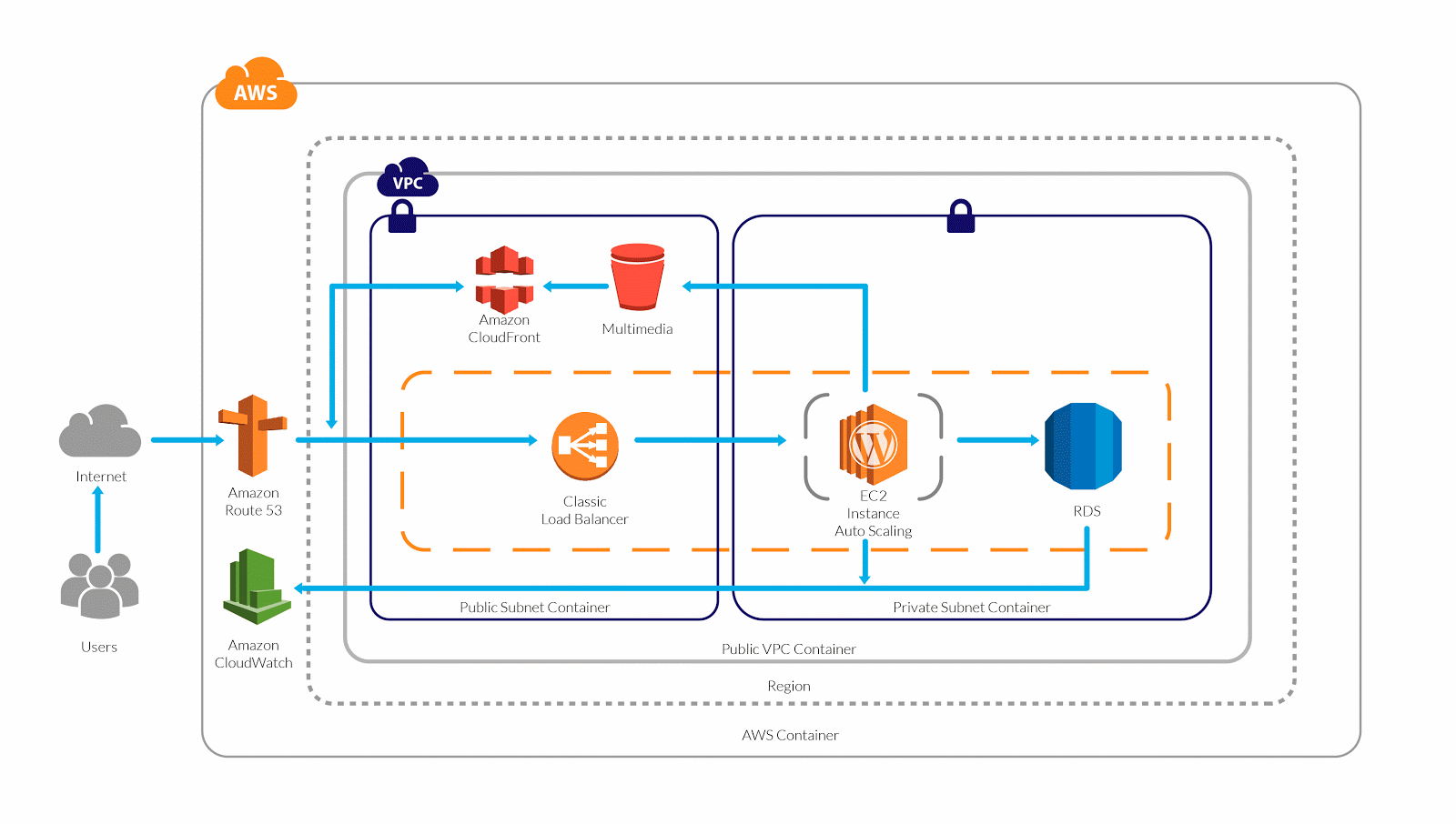

Project 8: Host Dynamic Website on AWS: Apache, MariaDB, PHP

Project 8: Host Dynamic Website on AWS: Apache, MariaDB, PHP

Deploy a dynamic website using a stack comprising an Apache web server, MariaDB database, and PHP scripting. This project involves configuring each component, ensuring seamless communication, and establishing a scalable environment for dynamic web applications.

Project 9: Deploy API Gateway, Application & Database

Project 9: Deploy API Gateway, Application & Database

Build and deploy a comprehensive solution that includes an API Gateway, application layer, and database. This project focuses on designing RESTful APIs, connecting them to backend services, and managing data storage effectively.

Project 10: Deploy React App using Amplify, Lambda & AppConfig

Project 10: Deploy React App using Amplify, Lambda & AppConfig

Develop and deploy a React.js application using AWS Amplify for hosting, AWS Lambda for serverless functions, and AppConfig for configuration management. This project showcases modern front-end development practices, serverless architecture, and efficient application configuration in the AWS cloud.

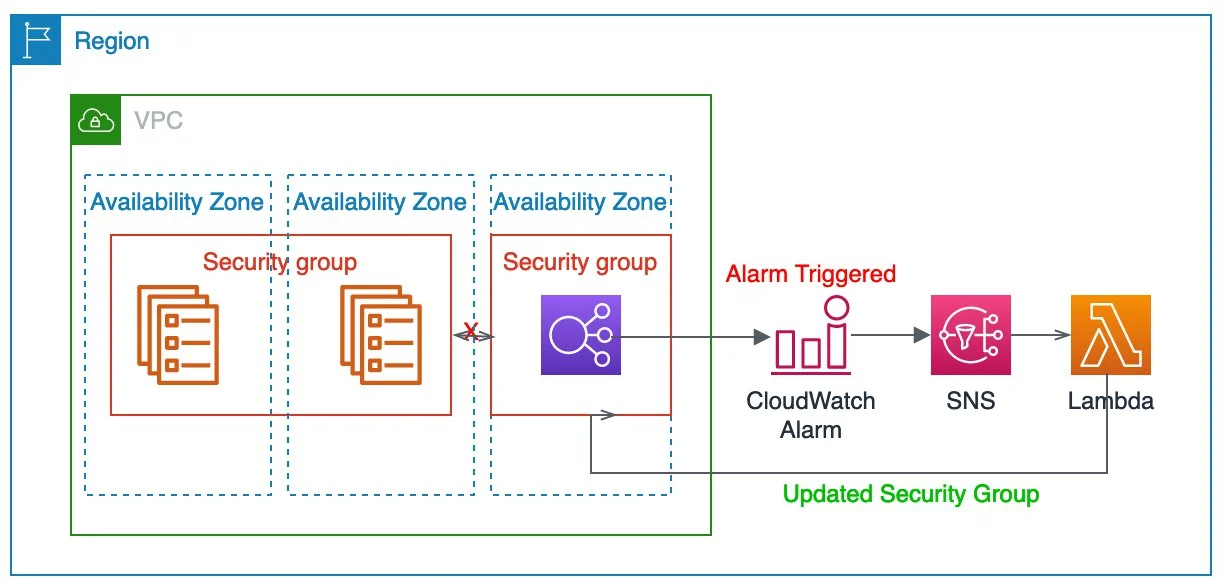

Lab 17: Work with VPC Peering Connection

Lab 17: Work with VPC Peering Connection

Lab 21: Configure Amazon CloudWatch to Notify Change In EC2 CPU Utilization

Lab 21: Configure Amazon CloudWatch to Notify Change In EC2 CPU Utilization

Lab 23: Setting Up AWS Config to Assess Audit & Evaluate AWS Resources

Lab 23: Setting Up AWS Config to Assess Audit & Evaluate AWS Resources Lab 24: Create & Query with Amazon DynamoDB

Lab 24: Create & Query with Amazon DynamoDB Lab 25: Configure a MySQL DB Instance via Relational Database Service (RDS)

Lab 25: Configure a MySQL DB Instance via Relational Database Service (RDS) Lab 26: Create A Redis Cache and connect It To EC2 Instance

Lab 26: Create A Redis Cache and connect It To EC2 Instance Lab 27: Amazon Athena

Lab 27: Amazon Athena Lab 28: Introduction to AWS Glue

Lab 28: Introduction to AWS Glue Lab 29: Visualize Web Traffic Using Kinesis Data Streams

Lab 29: Visualize Web Traffic Using Kinesis Data Streams Lab 30: Send An E-mail Through AWS SES

Lab 30: Send An E-mail Through AWS SES Lab 31: Event-Driven Architectures Using AWS Lambda, SES, SNS and SQS

Lab 31: Event-Driven Architectures Using AWS Lambda, SES, SNS and SQS Lab 32: Build API Gateway with Lambda Integration

Lab 32: Build API Gateway with Lambda Integration Lab 33: Create and Update Stacks using CloudFormation

Lab 33: Create and Update Stacks using CloudFormation Lab 34: Create S3 Bucket Using CloudFormation

Lab 34: Create S3 Bucket Using CloudFormation Lab 35: Create & Configure EC2 with Helper-Scripts

Lab 35: Create & Configure EC2 with Helper-Scripts Lab 36: Deploy An Application In Beanstalk Using Docker

Lab 36: Deploy An Application In Beanstalk Using Docker Lab 37: Immutable Deployment on Beanstalk Environment

Lab 37: Immutable Deployment on Beanstalk Environment

Lab 39: AWS Serverless Application Model

Lab 39: AWS Serverless Application Model Lab 40: AWS KMS Create & Use

Lab 40: AWS KMS Create & Use Lab 41: Block Web Traffic with WAF in AWS

Lab 41: Block Web Traffic with WAF in AWS Lab 42: Amazon Inspector

Lab 42: Amazon Inspector Lab 43: Working with AWS CodeCommit

Lab 43: Working with AWS CodeCommit Lab 44: Build Application with AWS CodeBuild

Lab 44: Build Application with AWS CodeBuild Lab 45: Deploy Sample Application using AWS CodeDeploy

Lab 45: Deploy Sample Application using AWS CodeDeploy Lab 46: Create a Simple Pipeline (CodePipeline)

Lab 46: Create a Simple Pipeline (CodePipeline) Lab 47: Build Application with AWS CodeStar

Lab 47: Build Application with AWS CodeStar Lab 48: Create ECR, Install Docker, Create Image, and Push Image To ECR

Lab 48: Create ECR, Install Docker, Create Image, and Push Image To ECR Lab 49: Create Task Definitions, Scheduling Tasks, Configuring Services and Cluster using EC2 Launch Types

Lab 49: Create Task Definitions, Scheduling Tasks, Configuring Services and Cluster using EC2 Launch Types Lab 50: Create Elastic Kubernetes Service (EKS) Cluster on AWS

Lab 50: Create Elastic Kubernetes Service (EKS) Cluster on AWS Lab 51: Application Migration to AWS

Lab 51: Application Migration to AWS Lab 52: Database Migration To AWS

Lab 52: Database Migration To AWS Lab 53: AWS Data Transfer Acceleration

Lab 53: AWS Data Transfer Acceleration Lab 54: Migrating a Monolithic Application to AWS with Docker

Lab 54: Migrating a Monolithic Application to AWS with Docker

Project 2: DevOps: End-to-End CI/CD Pipeline

Project 2: DevOps: End-to-End CI/CD Pipeline

Project 4: Migration: On-Premise to Cloud (App, Database, Data)

Project 4: Migration: On-Premise to Cloud (App, Database, Data) Project 5: Migration: Monolithic App to Microservices

Project 5: Migration: Monolithic App to Microservices Project 6: Security: SSL/TLS/Keys Certificate Management System

Project 6: Security: SSL/TLS/Keys Certificate Management System

Project 8: Host Dynamic Website on AWS: Apache, MariaDB, PHP

Project 8: Host Dynamic Website on AWS: Apache, MariaDB, PHP