Azure Container Instances Tutorial

Let’s get started! I’m going to show you how to run an application in a container locally and then publish that to Azure Container Instances. I’ll do this using Docker containers. To get started on Windows 10, you need the following:

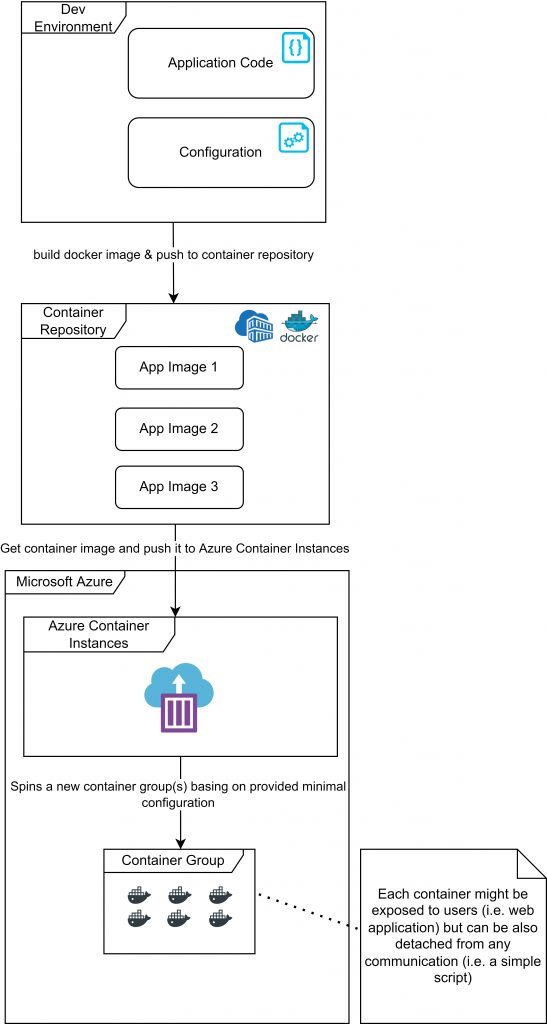

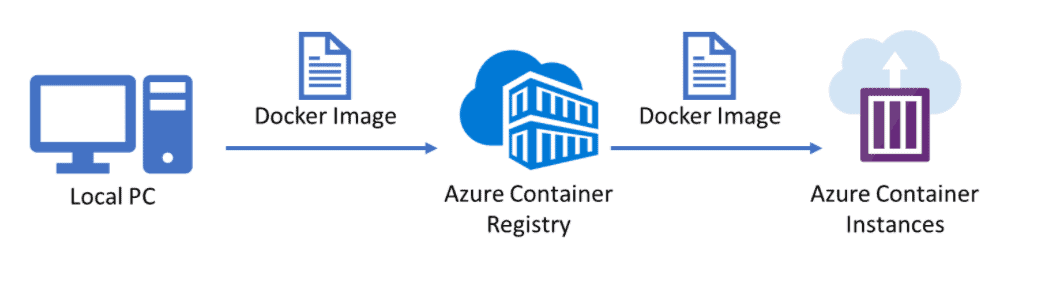

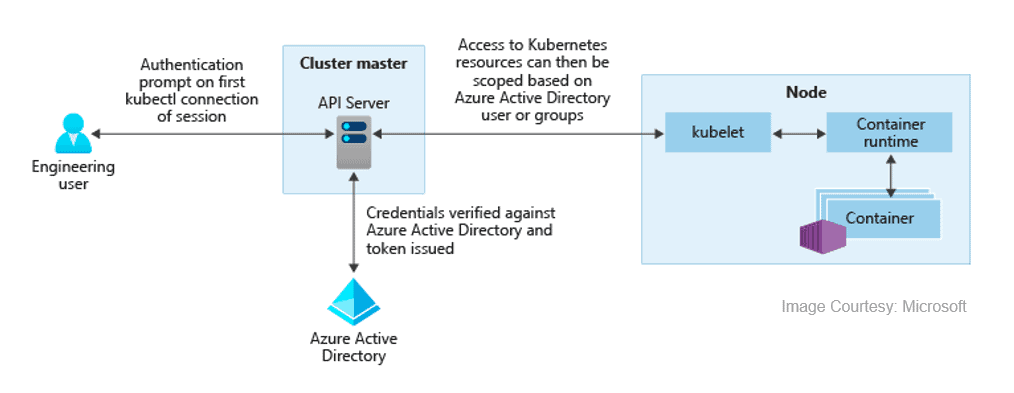

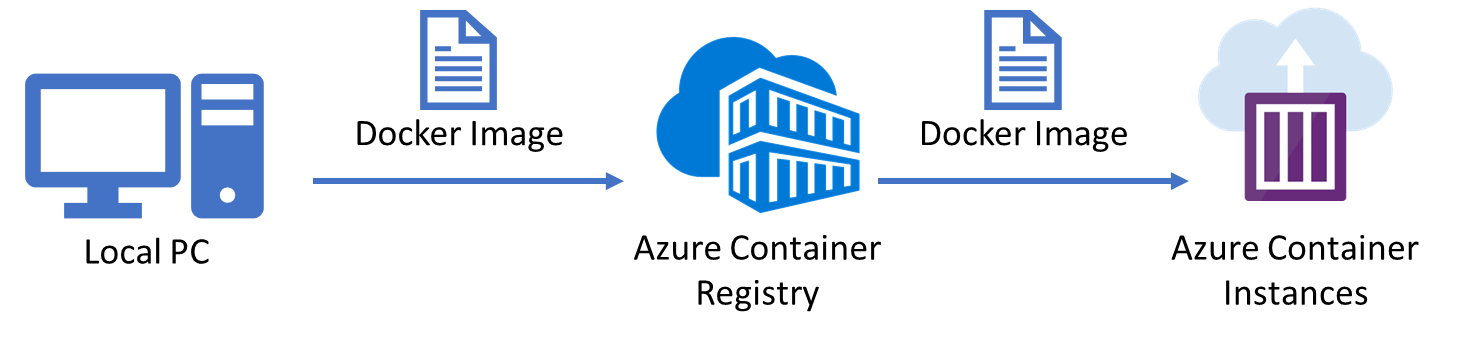

To get a local container into Azure Container Instances, I need to first put it into an intermediary repository that can be accessed by Container Instances. This can be Docker Hub or something else, like the Azure Container Registry. This is a place that houses container images. This would make our workflow look like this.

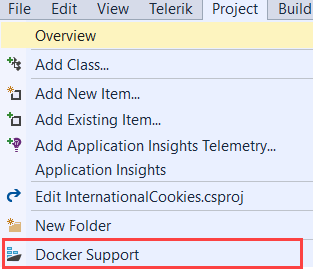

Step 1: Adding Docker support to an ASP.NET Core application

I already have an ASP.NET Core application and want to run it in a Docker container. Visual Studio makes this easy for us. Once you have your ASP.NET Core project opened, you can just add Docker support by selecting it from the project menu in Visual Studio. Alternatively, there is a checkbox to enable Docker support for new ASP.NET Core projects.

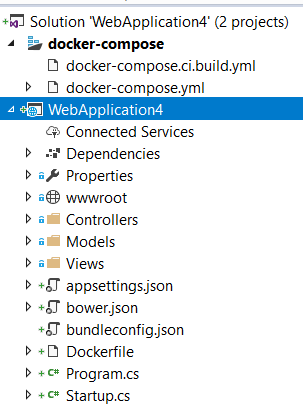

Once you do, the resulting solution looks like this:

There’s now a new project, called docker-compose. This contains the Docker compose files that will build the image for your web application. Also, there is a Dockerfile file in the web application. This is the actual Docker file that is used to build the Docker image. When you open it, you see that it is based on the microsoft/aspnetcore:2.0 image and adds the application to it. Also, you’ll see that the application is exposed to port 80.

FROM microsoft/aspnetcore:2.0 ARG source WORKDIR /app EXPOSE 80 COPY ${source:-obj/Docker/publish} . ENTRYPOINT ["dotnet", "WebApplication4.dll"]

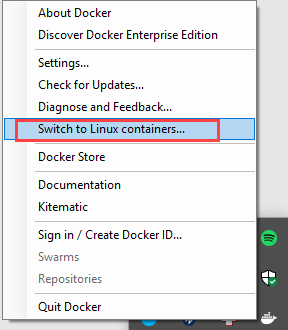

You need to do one more thing in order to build and run the application locally. You need to change the local Docker server to run Windows-based containers. Find the Docker server running on your machine in your applications tray (near the clock), right click on it and click Switch to Windows containers, which I already did (that’s why it says switch to Linux containers in the image below). Unfortunately, this will result in a restart of your system.

That’s it! Now build and run your solution and you’ll see that the web application will be built, the docker image will be composed, and the application will run locally in a Docker container.

Step 2: Push the local image to Azure Container Registry

We now have the application running in a container on our local machine. Now, we need to get this image somewhere so that it can be used by the Azure Container Instances service. We’ll put it in Azure Container Registry, which is a private container repository, hosted in Azure. It is nothing more than a storage space for containers.

First, we’ll create an Azure Container Registry:

- In the Azure Portal, click the Create a resource button (green plus in the left-upper corner)

- Next, search for azure container registry

- Pick the Azure Container registry from the search results and click Create

The Create container registry wizard appears:

- Fill in a name

- Create a new resource group or pick an existing one

- Pick a location

- Select Enable for the Admin user setting (this enables us to easily authenticate to the registry later on)

- Leave the SKU to Standard

- Click Create

And now the registry will be created. Once it is done, we can move on to the next steps.

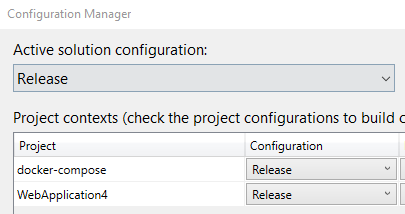

In order to publish the local Docker image to the registry, we want it to be a release version of the application. So first of all, change the build configuration of the application to Release. A handy way of making sure this works, is to navigate to the Configuration Manager when right-clicking the solution file, and selecting the release configuration. Once that is done, build the solution again.

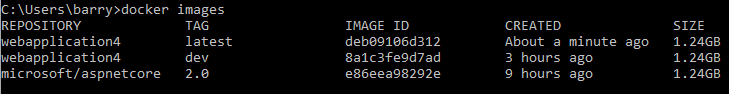

Open a command prompt to see which images are available after the build. Type in the following in the command prompt:

docker imagesThis results in a list of local docker images, like this:

In my case, the webapplication4 image is the one we want. The one tagged latest was just added after the release build. Now we need to push this image to the Azure Container Registry. In order to do that, we first need to login to the container registry, like this:

docker login CONTAINERREGISTRYURL -u CONTAINERREGISTRYNAME -p CONTAINERREGISTRYPASSWORDYou can find this information in the Access Keys tab of the Container Registry. Here is what this looks like for me (don’t bother to try these credentials, I’ve deleted the repository already).

docker login barriescontainerregistry.azurecr.io -u barriescontainerregistry -p DEen2rwlgnYM2sT7W/y57MKNU8iyTqZk+After logging in, we need to tag the image before pushing it:

docker tag webapplication4 barriescontainerregistry.azurecr.io/demos/webapp4Now that we have created the tag, we can push the image to the registry:

docker push barriescontainerregistry.azurecr.io/demos/webapp4That’s it. The image is now in the Azure Container Registry and can now be used by the Azure Container Instance service.

[adinserter block=”33″]

Step 3: Use the image in Azure Container Instances

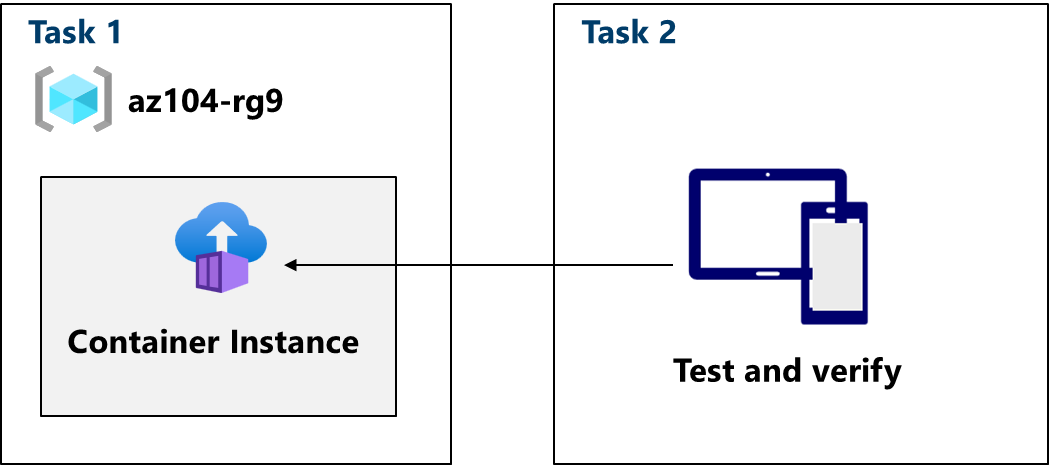

The image is now in the Azure Container Registry. Let’s create a new Azure Container Instance with the image to see if it will run in the cloud.

- In the Azure Portal, click the Create a resource button (green plus in the left-upper corner)

- Next, search for azure container instance and click Create

- Now, fill in the first step of the wizard like in the image below. Again, you can find the information for the Container Registry in the Access Keys tab of the Container Registry

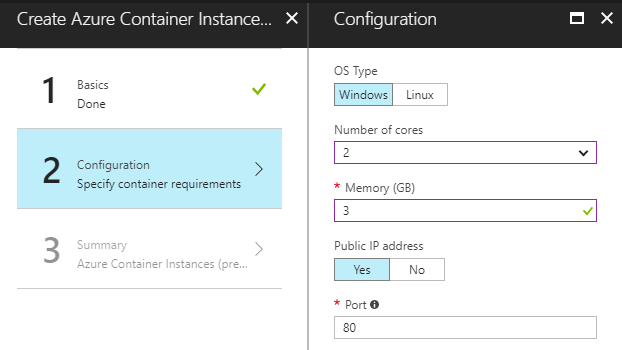

- Now for step 2 of the wizard. Fill it in like in the image below. Be sure to select Windows for the OS type

- In step 3 of the wizard, everything will be summarized.

- Click Create

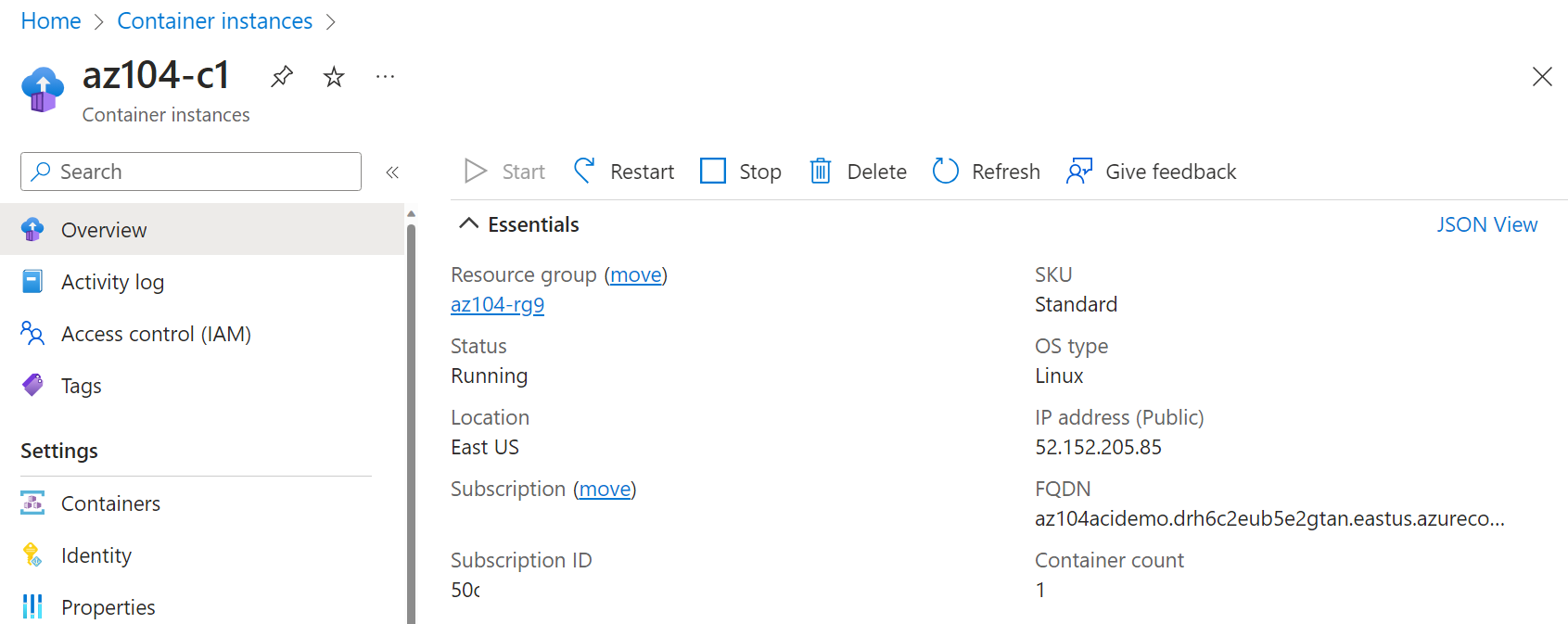

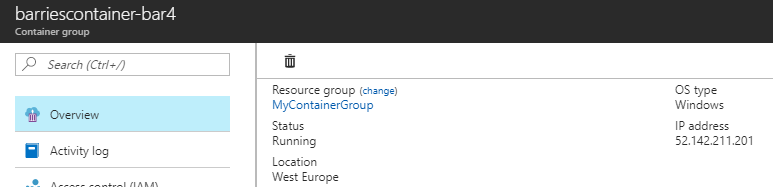

The Container Instance will now be created. When it is done, you can find an IP address in its overview in the Azure Portal.

When you navigate to that address, you’ll see the ASP.NET Core application that is exposed on port 80.

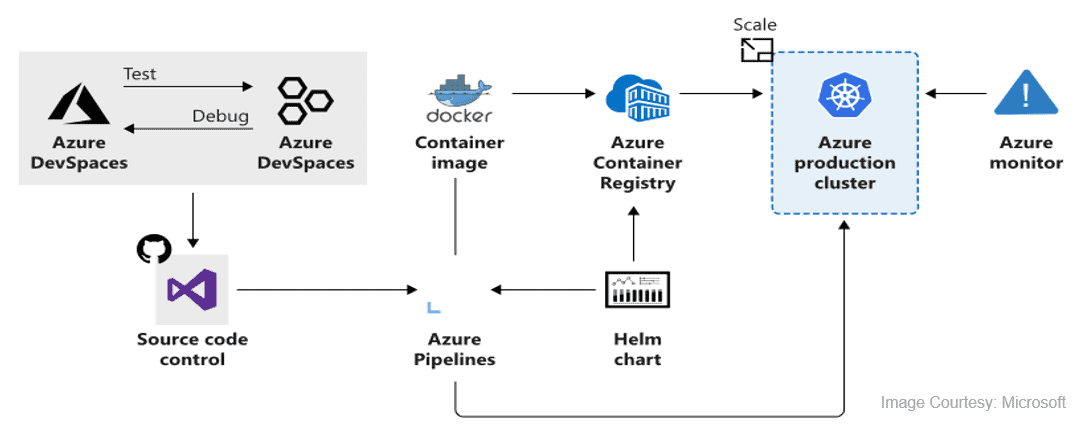

Something to consider: automation

The steps I took to build the container image, push it to Azure Container Registry and get it running in Azure Container Instances are a bit much, if you ask me. First of all, I would love to see the tooling evolve to provide us a first-class Visual Studio experience to build images locally and push them to where they need to be – I’m confident that Microsoft will deliver on that.

And secondly, these steps should ideally be automated. You can use something like Visual Studio Team Services to perform the application build steps and compose the image and then push it to Azure Container Registry and to Container Instances. You can read how to do this in the tutorial here. The article describes pushing to Azure Container Services instead of Container Instances, but the concepts remain the same.