Getting Started with Azure Functions: A Step-by-Step Guide

Introduction

Azure Functions is a serverless computing service provided by Microsoft Azure that allows developers to build and deploy event-driven applications without the need to manage infrastructure. With Azure Functions, developers can focus solely on writing code to handle specific events, leaving the underlying infrastructure and scaling aspects to the Azure platform. This enables faster development, reduced operational costs, and enhanced scalability.

Key Features of Azure Functions

- Event-driven Computing: Azure Functions are triggered by various events, such as HTTP requests, timers, messages in queues, file uploads, or changes to data in databases. This event-driven approach allows developers to respond to specific events in real-time without the need to maintain persistent server instances.

- Serverless Architecture: Azure Functions follows the serverless computing paradigm, meaning there are no servers to provision or manage. The platform automatically handles infrastructure scaling, ensuring resources are allocated based on the actual demand, making it highly cost-efficient.

- Language and Platform Support: Azure Functions supports multiple programming languages, including C#, JavaScript, Java, Python, and TypeScript. This flexibility allows developers to work with their preferred language and leverage existing codebases seamlessly.

- Pay-as-You-Go Pricing: Azure Functions offer a pay-as-you-go billing model. You only pay for the compute resources used during the execution of your functions. This cost-effective approach ensures you are charged only for the actual usage without any upfront commitments.

- Integration with Azure Services: Azure Functions seamlessly integrate with various Azure services like Azure Storage, Azure Cosmos DB, Azure Service Bus, Azure Event Grid, and more. This integration allows developers to build sophisticated applications by combining the power of different services.

Benefits of Using Azure Functions

- Faster Time to Market: With the ability to focus solely on business logic and event handling, developers can quickly build and deploy applications, reducing development time and accelerating time to market.

- Cost Savings: As Azure Functions automatically scales based on demand, there’s no need to pay for idle server resources, resulting in cost savings, especially for applications with varying workloads.

- Scalability and Elasticity: Azure Functions scales automatically to handle a large number of events concurrently. This elasticity ensures that your applications can handle high traffic and sudden spikes without any performance degradation.

- Serverless Management: Microsoft Azure handles all the server management, including updates, security patches, and scaling, freeing developers from operational overhead and allowing them to focus on code development.

- Event-Driven Architecture: The event-driven nature of Azure Functions enables the decoupling of application components, making it easier to build scalable and resilient microservices architectures.

Types of Azure Functions

- HTTP Trigger: This type of function is triggered by an HTTP request. It can be used to build web APIs and handle HTTP-based interactions.

- Timer Trigger: A timer trigger executes a function on a predefined schedule or at regular intervals. It is useful for performing tasks such as data synchronization, periodic processing, or generating reports.

- Blob Trigger: This type of function is triggered when a new or updated blob is added to an Azure Storage container. It enables you to automate processes based on changes in storage blobs.

- Queue Trigger: A queue trigger is triggered when a message is added to an Azure Storage queue. It provides a way to process messages in a queue-based architecture, allowing you to build event-driven applications.

- Event Grid Trigger: An event grid trigger is invoked when an event is published to an Azure Event Grid topic or domain. It enables reactive processing of events and can be used to build event-driven architectures.

- Cosmos DB Trigger: This type of function is triggered when there are changes to a document in an Azure Cosmos DB container. It allows you to build real-time data processing and synchronization scenarios.

- Service Bus Trigger: A service bus trigger is invoked when a new message arrives in an Azure Service Bus queue or topic subscription. It is suitable for building decoupled messaging-based systems.

- Event Hub Trigger: An event hub trigger is invoked when new events are published to an Azure Event Hub. It enables high-throughput event ingestion and processing scenarios.

Let’s dive into real-world example using HTTP Trigger function in Azure Functions:

Assume that we have data in azure data lake gen 2(ADLS Gen2), read the data from azure function and write back to the ADLS Gen2 storage account.

To accomplish the task of reading data from Azure Data Lake Gen2 (ADLS Gen2) using an Azure Function and then writing it back to the same storage account, we will follow a two-step approach: Firstly, we’ll create an Azure Function in the Azure Cloud, and secondly, we’ll access and deploy the code using Visual Studio Code (VS Code) from your local development environment.

Pre-Requisites:

- Azure Account (Free or pay-as-you-go).

- Azure Data Lake Gen2 Storage Account.

- Azure Function App.

- Azure Data Factory.

Step 1: Create an Azure function in Azure cloud

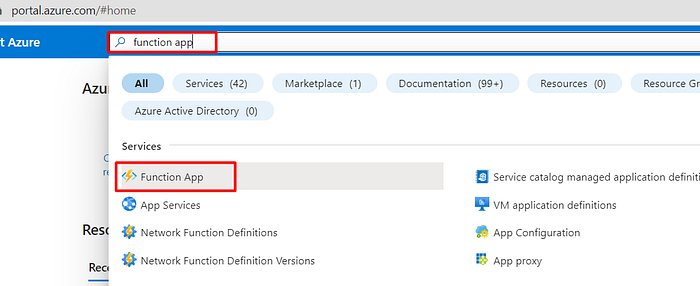

- Login to your Azure account, on top you can find search, search for function app.

2. Select the Function app and click ‘+ Create’ to create a new Azure function. Provide the necessary details to create an Azure function for your requirements.

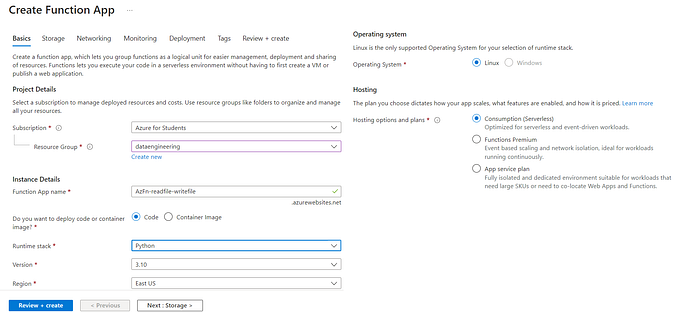

select Subscription, Resource group, Function App Name, Deploy method code, or container.

In Runtime stack multiple options are available they are: .Net, Node.js, Python, Java, PowerShell core, and custom handler. Then select the version of the runtime stack and Region.

I am using Python for this example.

And Azure function only supports the Linux operating system for the Python Runtime stack. That doesn’t mean, we can’t implement it in Windows, it will be run on Linux, and that will take care of by Azure.

Hosting options and plans: For better understanding refer plans

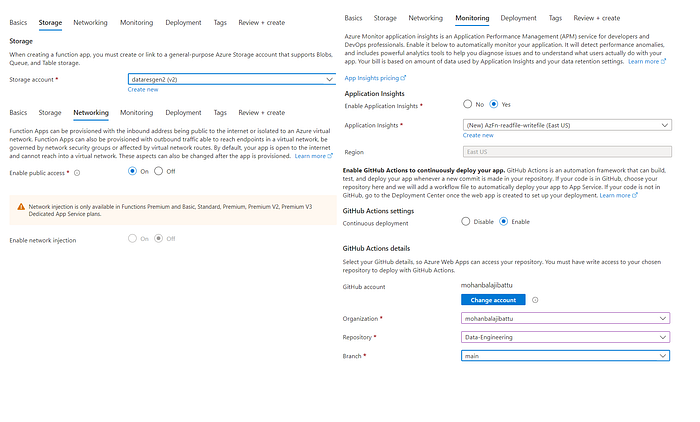

3. Click Next: Storage, select the existing storage account or it creates new storage for storing the Azure function details.

Networking: Access to the function app

Monitoring: As a beginner, you need this feature to check the error while deploying the code. But it everything costs, don’t forgot to delete all resources after use.

Deployment: I am using my GitHub account to maintain this code as a backup.

After all these details, click on “Review + create”, to create a function app. It will run a validation on the details we provided and enables the “create” option if all details are correct. Click on “create”.

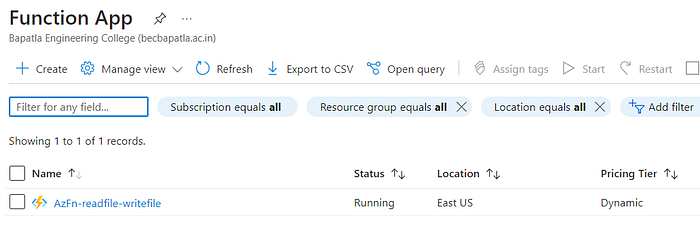

4. After deployment is done, got function app and check whether the resource is created or not.

Step-2: Create an Azure function template and deploy the code to the function app.

Pre-Requisites:

- Azure Function App.

- Visual Studio Code.

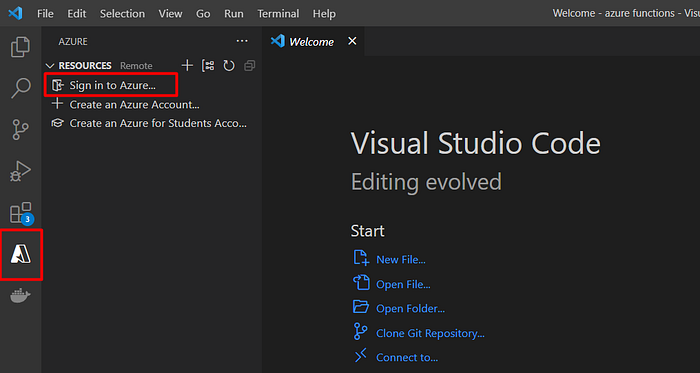

- open the vs code, click on “Azure” on the left side bar, and sign in to your Azure account.

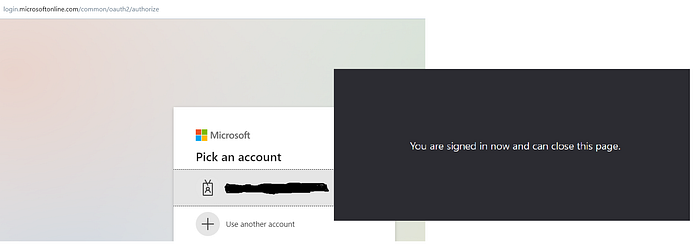

It will open a login page to your azure account in browser, sign in.

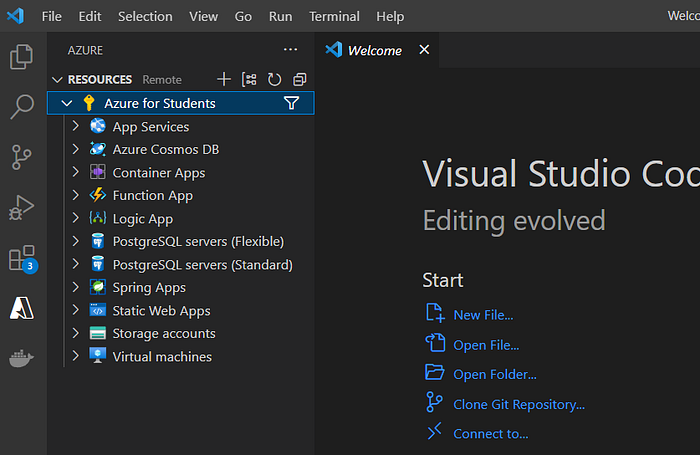

After Successful sign in, can able to see the subscriptions and resources.

2. Install Extensions which is above Azure, Azure Functions Extension & Azure Account Extension.

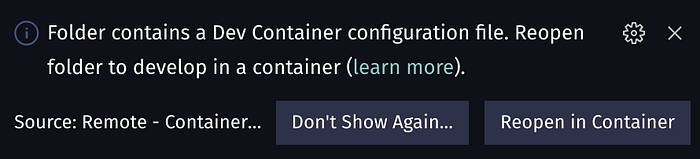

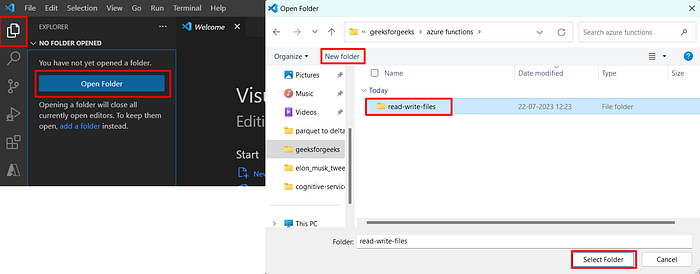

Click on Explorer, the first one from the top, and click on Open folder, to create our Azure function in that folder. Select the location where you want to create the function then select a folder.

After this you can see your folder name in explorer.

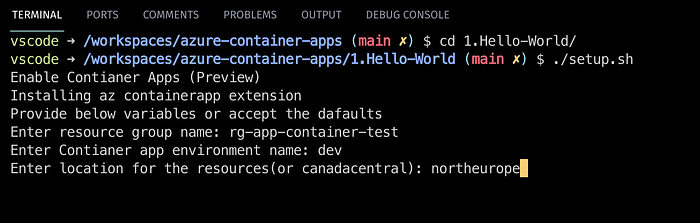

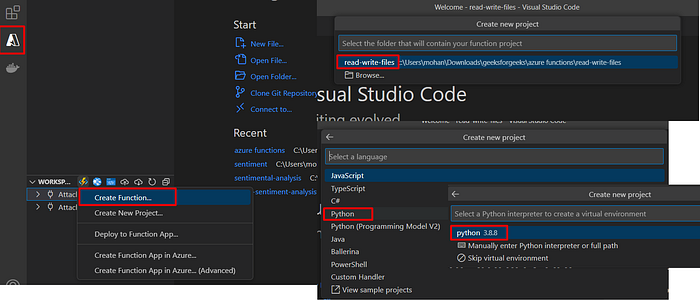

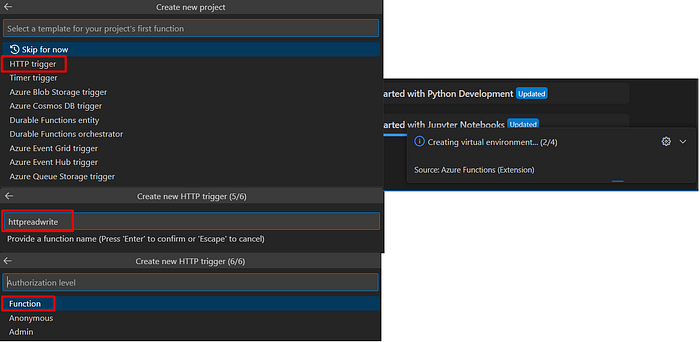

3. In the Azure tab(vs code), click on the function app symbol, and select create function. Then select Folder, language, version.

Select the type of trigger we want to create, function name, and authorization level. After this, you can see the progress for creating the azure function on the bottom right of vs code.

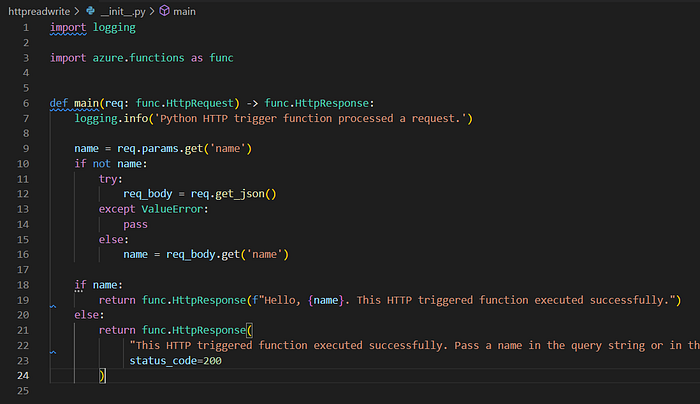

After this, it will create a Python file with the name __init__.py, some default code will be there inside it. It looks like this.

For our example, we don’t need all the code. main is the actual function that receives and responds to a request.

3. Read the file from ADLS Gen2 and write back to ADLS Gen2.

For this, am uploading a sample data file into the ADLS Gen2 account and it will be available in my Git Hub, will provide a link to all code and functions at the end.

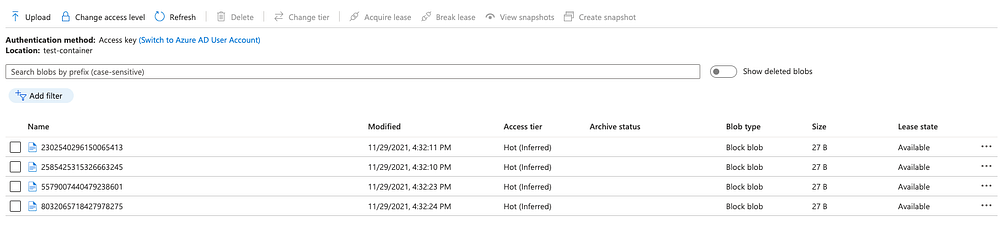

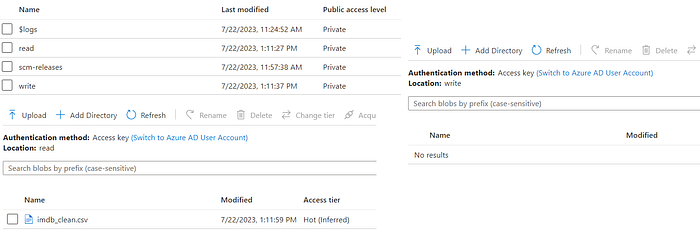

Have created 2 containers read and write, below image shows the containers and what it contains before running the Azure function.

I have developed the code to read from ADLS Gen2 and write back to it in a different container.

Also tested in local, it’s working fine. Let’s see the deployment process.

Deployment:

Note:

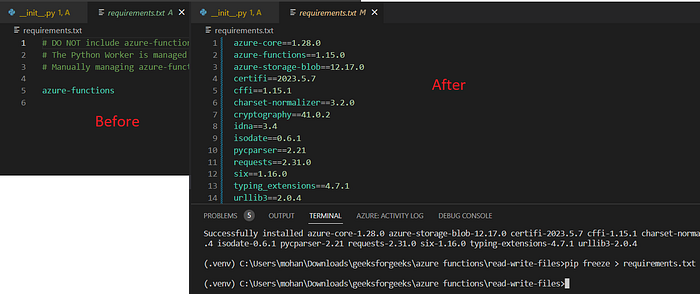

Before deployment, you need to make sure that the requirements.txt file has all the modules that we used in the function.

To auto-generate these module names, can use the below command in the command prompt.

pip freeze > requirements.txt

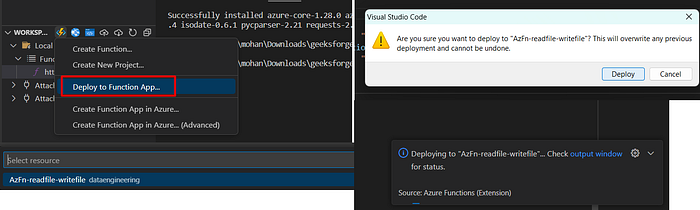

Go to Azure tab in vs code, click on the function app symbol, select Deploy to Function App, then select the function app that we created in Azure account.

click “Deploy” on the pop-up and deployment to the azure cloud is started.

Testing:

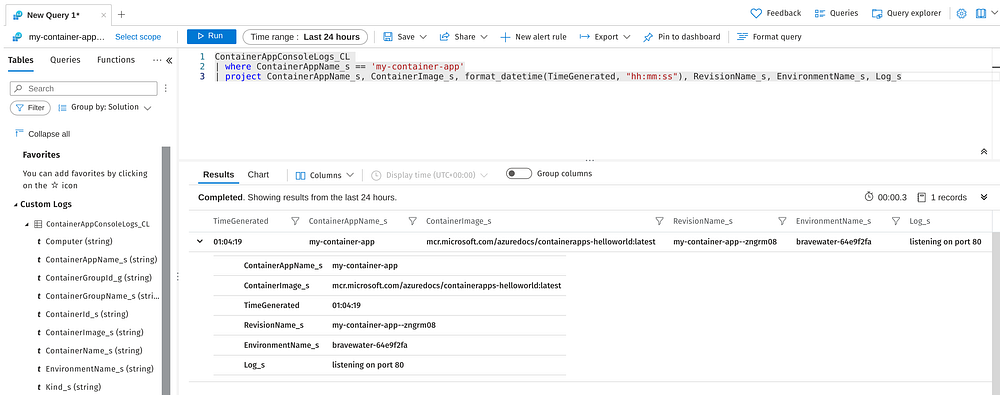

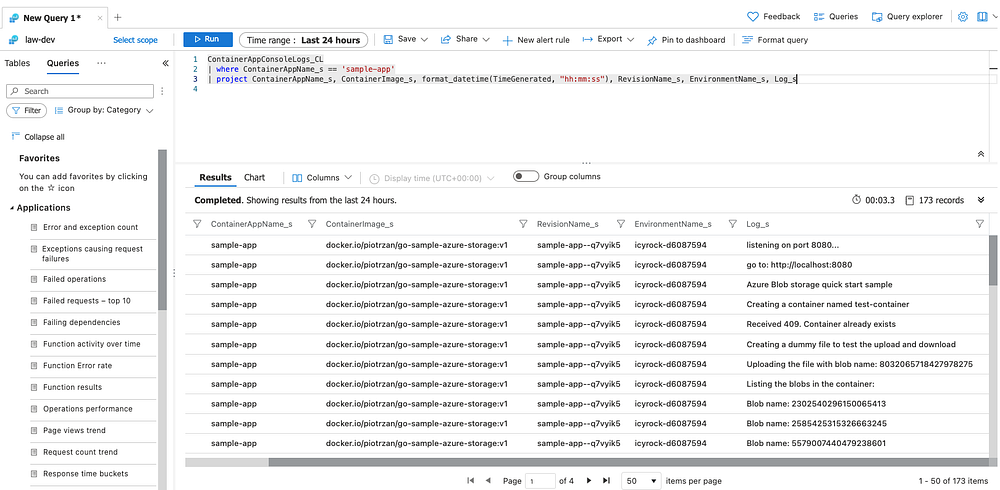

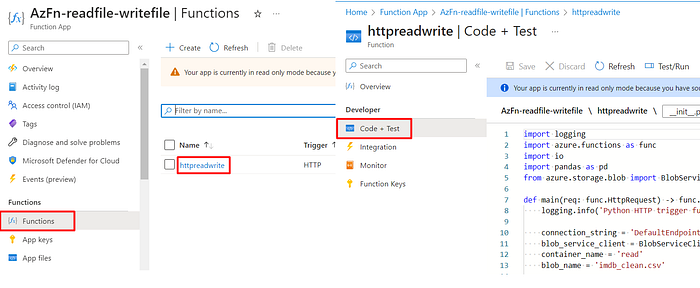

After deployment is done, time for testing. Go to the Azure account, open the Azure function, then to the Functions, there you can find the function name that we developed in vs code.

Open the Azure function, click on the function, and click on “Code + Test”.

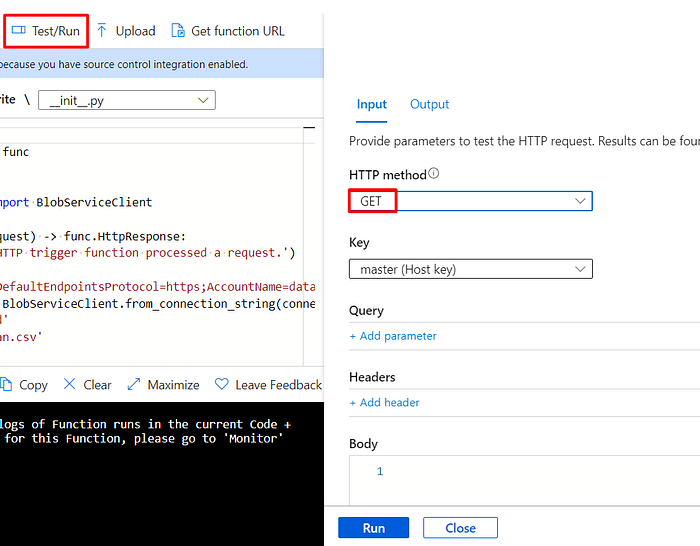

Click Test/Run, and provide the information, using Get Method, click on Run.

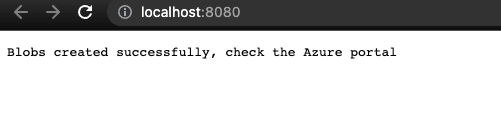

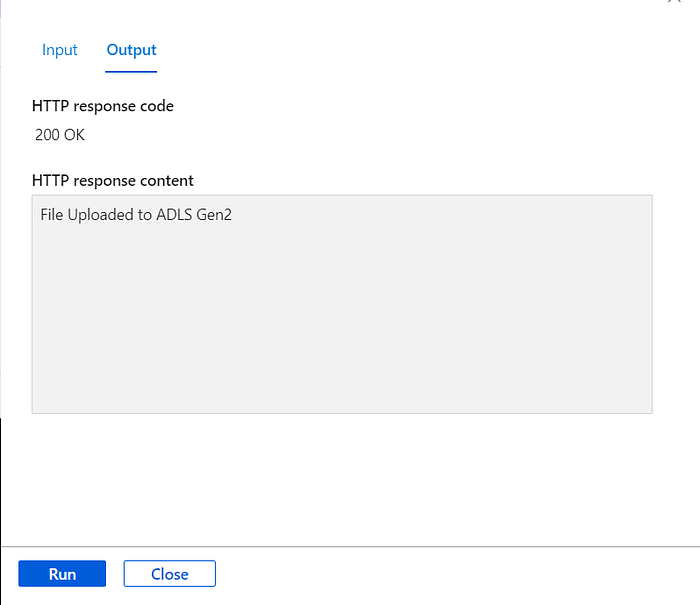

The output will be like the below if it is executed successfully with out errors.

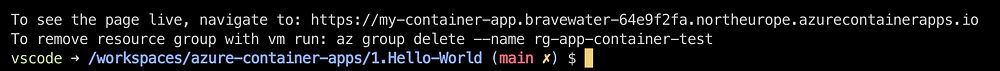

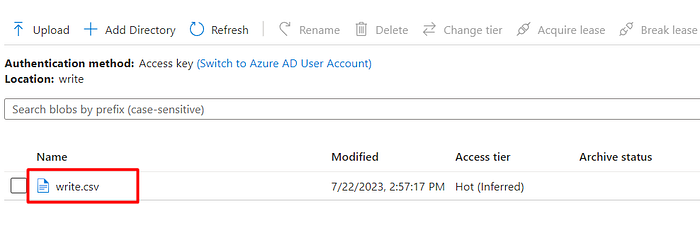

Let’s check the ADLS Gen2 account, write container, and whether the file is created or not.

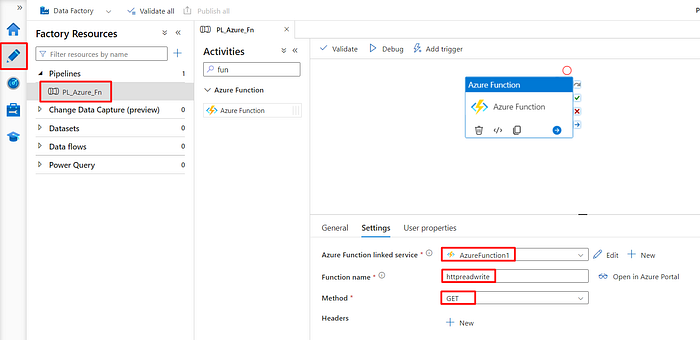

Now that we have successfully developed and tested the Azure Function locally and in Azure, it’s time to explore how we can automate the deployment and testing process using Azure Data Factory (ADF) pipelines.

pre-requisites:

- Linked service to storage account and Azure function.

- Open your Azure data factory and go to the author, create a new pipeline, on the left side under activities search for Azure function. Drag and drop to the pipeline, and configure the activity by providing the necessary details.

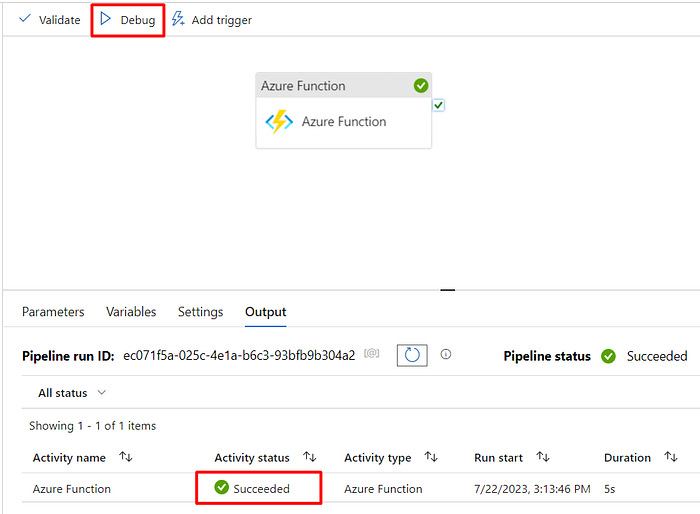

2. Click on debug to execute the function.