The AWS S3(simple storage service ) and AWS EBS(elastic block storage) are two different types of storage services provided by Amazon Web Services. This article highlights some major differences between Amazon S3 and Amazon EBS.

AWS Storage Options:

Amazon S3: Amazon S3 is a simple storage service offered by Amazon and it is useful for hosting website images and videos, data analytics, etc. S3 is an object-level data storage that distributes the data objects across several machines and allows the users to access the storage via the internet from any corner of the world.

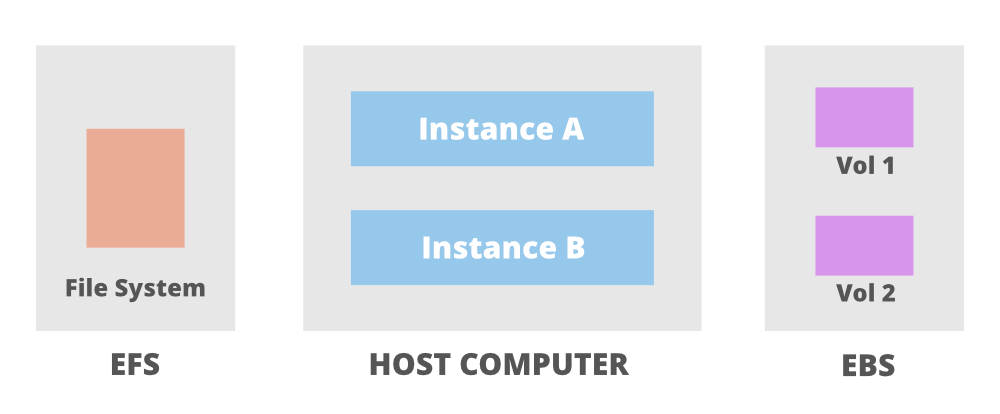

Amazon EBS: Unlike Amazon S3, Amazon EBS is a block-level data storage offered by Amazon. Block storage stores files in multiple volumes called blocks, which act as separate hard drives, and this storage is not accessible via the internet. Use cases include business continuity, transactional and NO SQL database, software testing, etc.

Comparison based on Characteristics:

1. Storage type

Amazon Simple Storage Service is object storage designed for storing large numbers of user files and backups whereas Elastic block storage is block storage for Amazon EC2 compute instances and it is just similar to hard drives attached to your computers or laptops, but the only difference is that it is used for virtualized instances.

2. Accessibility

The files within an S3 bucket are stored in an unstructured manner and can be retrieved using HTTP protocols and even with BitTorrent but the data stored in EBS is only accessible by the instance to which it is connected to.

3. Availability

Both S3 and EBS gives the availability of 99.99%, but the only difference that occurs is that S3 is accessed via the internet using API’s and EBS is accessed by the single instance attached to EBS.

4. Durability

Amazon S3 provides durability by redundantly storing the data across multiple Availability Zones whereas EBS provides durability by redundantly storing the data in a single Availability Zone.

5. Security, Compliance, and Audit features

Amazon S3 can prevent unauthorized accessing of data using its access management tools and encryption policies but no such feature is present in EBS. In EBS, if any user gets unauthorized access to the instance then he/she can easily access the attached EBS. Also, S3 has got some features that make it easier to comply with regulatory requirements.

6. Size of data

Simple storage service (S3) can store large amounts as compared to EBS. With S3, the standard limit is of100 buckets and each bucket has got an unlimited data capacity whereas EBS has a standard limit of 20 volumes and each volume can hold data up to 1TB. In EBS there occurs an upper limit on the data storage.

7. Usability

One major limitation of EBS(Elastic block storage) is that not all EBS types can be used by multiple instances at a single time. Multi-attach EBS volume option is available only for provisioned IPOPs SSD io1 and io2 volume types whereas S3 can have multiple images of its contents so it can be used by multiple instances at the same time.

8. Pricing

Amazon S3 storage service allows you to follow the utility-based model and prices as per your usage but in Elastic block storage, you need to pay for the provisioned capacity.

Amazon S3 cost parameters are:

- Free Tier – 5 GB

- First 50 TB/month – $0.023/GB

- 450 TB/month – $0.022/GB

- Over 500 TB / Month – $0.021 per GB

Amazon EBS cost parameters are:

- Free Tier – 30 GB, 1 GB snapshot storage

- General Purpose – $0.045/GB(1 month)

- Provisioned SSD – $0.125/GB(1 month)

9. Scalability

Amazon S3 offers rapid scalability to its users/clients, resources can be provisioned and de-provisioned in run time but no such scalability feature is present in EBS, here there is manual increasing or decreasing of storage resources.

10. Performance

- Amazon EBS is faster storage and offers high performance as compared to S3.

11. Latency

- As EBS storage is attached to the EC2 instance and is only accessible via that instance in the particular AWS region, it offers less latency than S3 which is accessed via the internet. Also, EBS uses SSD volumes which offers reliable I/O performance.

12. Geographic interchangeability

Amazon EBS has an upper hand in geographical interchangeability of data as here the user just requires EBS snapshots and then he/she can place resources and data in multiple locations.

13. Backup and restore

For backup purposes, Amazon S3 uses versioning and cross-region replication whereas the backup feature in EBS is supported by snapshots and automated backup.

14. Security

Both S3 and EBS support data at rest and data in transmission encryption, so both are equally secure and offer a good level of security.

Use cases:,

Amazon S3 use cases are:

- Data lake and big data analytics: Amazon S3 works with AWS Lake Formation to create data lakes that are basically used to hold raw data in its native format and then supports big data analytics by using some machine learning tools,query-in-place, etc and gets some useful insights from the raw data.

- Backup and restoration: Amazon S3 when combined with other AWS offerings(EBS, EFS, etc) can provide a secure and robust backup solution.

- Reliable disaster recovery: S3 service can provide reliable data recovering from any type of disaster such as power cut, system failure, human error, etc.

- Other use cases include entertainment, media, content management purposes, etc.

Amazon EBS use cases are:

- Software Testing and development: Amazon EBS is connected only to a particular instance, so it is best suited for testing and development purposes.

- Business continuity: Amazon EBS provides a good level of business consistency as users can run applications in different AWS regions and all they require is EBS Snapshots and Amazon machine images.

- Enterprise-wide applications: EBS provides block-level storage, so it allows users to run a wide variety of applications including Microsoft Exchange, Oracle, etc.

- Transactional and NoSQL databases: As EBS provides a low level of latency so it offers an optimum level of performance for transactional and NO SQL databases. It also helps in database management.

Let us see the differences in a tabular form as follows:

| AWS S3 | AWS EBS |

| The AWS S3 Full form is Amazon Simple Storage Service | The AWS EBS full form is Amazon Elastic Block Store |

| AWS S3 is an object storage service that helps the industry in scalability, data availability, security, etc. | It is easy to use. |

| AWS S3 is used to store and protect any amount of data for a range of use cases. | It has high-performance block storage at every scale |

| AWS S3 can be used to store data lakes, websites, mobile applications, backup and restore big data analytics. , enterprise applications, IoT devices, archive etc. | It is scalable. |

| AWS S3 also provides management features | It is also used to run relational or NoSQL databases |