Cloud Storage Overview

Cloud storage is a cloud computing model that stores data on the Internet through a cloud computing provider that manages and operates data storage as a service. It’s delivered on-demand with just-in-time capacity and costs and eliminates buying and managing your own data storage infrastructure.

Also Read: Our Blog post on AWS Secrets Manager

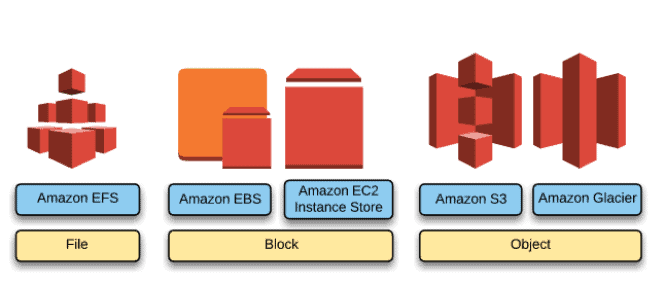

There Are 3 Types of Cloud Storage

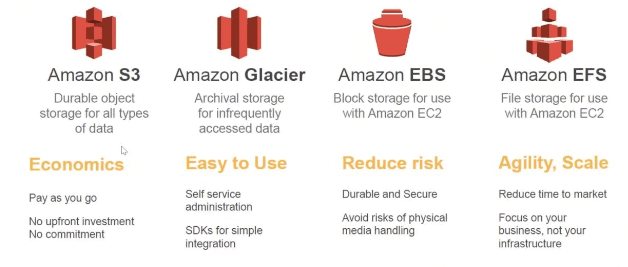

1. Object Storage – Applications developed within the cloud often cash in on object storage’s vast scalability and metadata characteristics. Object storage solutions like Simple Storage Service (Amazon S3) and Amazon Glacier are ideal for building modern applications from scratch that need scale and adaptability, and may even be wont to import existing data stores for analytics, backup, or archive.

2. File Storage – Many applications got to access shared files and need a filing system. this sort of storage is usually supported with a Network Attached Storage (NAS) server. File storage solutions like Elastic File System (Amazon EFS)are ideal to be used in cases like large content repositories, development environments, media stores, or user home directories.

3. Block Storage – Other enterprise applications like databases or ERP systems often require dedicated, low latency storage for every host. this is often analogous to direct-attached storage (DAS) or a cargo area Network (SAN). Block-based cloud storage solutions like Elastic Block Store (Amazon EBS) and EC2 Instance Storage

Storage Offered By Amazon Web Services (AWS)

Check out: AWS Free Tier Account Services

1. Simple Storage Service (Amazon S3)

Amazon S3 the oldest and most supported storage platform of AWS uses an object storage model that is built to store and retrieve any amount of data. Data can be accessed everywhere such as websites, mobile apps, corporate applications, and data from IoT sensors or devices that can be dumped onto S3.

Usage

S3 has been highly used for hosting web content with support for high bandwidth and demand. Scripts can also be stored in S3 making it possible to store static websites that use JavaScript.It supports the migration of data to Amazon Glacial for cold storage, by using lifecycle management rule for data stored in S3.

Features

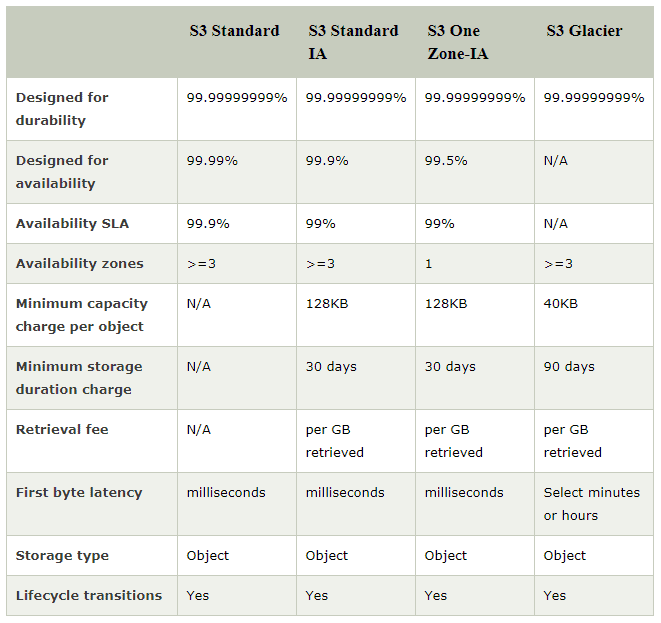

Amazon S3 runs upon the world’s largest global cloud infrastructure and was built from the ground up to deliver a customer promise of 99.999999999% durability. Data is automatically distributed across a minimum of three physical facilities that are geographically separated within an AWS Region, and also automatically replicates data to any other AWS Region.

Security

S3 supports 3 forms of encryption, including server-side-encryption and client-side-encryption. Data in S3 can only be accessed by other users or AWS account when they have been granted access by the admin by writing the access policy. With the support of Multi-Factor Authentication (MFA) another layer of security can be added for object operation.S3 supports multiple security standards and compliance certifications.

2. Amazon Glacier

Amazon Glacier is a secure, durable, and extremely low-cost storage service for data archiving and long-term storage. Glacier allows you to run powerful analytics directly on archived data. The glacier can also utilize other AWS storage services such as S3, CloudFront, etc. to move data in and out seamlessly for better and effective results.

Usage

Amazon Glacier is used to stores data in the form of archives. An archive can consist of a single file, or a combination of several files as a single archive, and the archives are organized in vaults. With support to querying on data to retrieve a particular subset of data that you need from within an archive.

Feature

Since AWS Glacier is an archiving service, durability must be of utmost priority. The glacier is designed to provide average annual durability of 99.999999999% for archives. Data is automatically distributed across a minimum of three physical facilities that are geographically separated within an AWS Region.

Security

Initially, Glacial data can only be access by the account owner/admin, however, access control can be set up for other people by defining access rules in AWS Identity and Access Management (IAM) service. Glacier uses server-side encryption to encrypt all data. Lockable policies can be defined to lock vaults for long-term records retention.

3. Elastic File System (Amazon EFS)

As the name suggests EFS delivers a scalable, elastic, highly available, and highly durable network file system as-a-service. The storage capacity of EFS is elastic and is capable of growing and shrinking automatically depending on the requirement. EFS supports Network File System versions 4 (NFSv4) and 4.1 (NFSv4.1).

Usage

EFS is a network file system that can expand to petabytes with parallel access from EC2 instances. Elastic File System EFS is mounted on Amazon EC2 instances. Multiple EC2 instances can even share a Single EFS file system, allowing access to the large application that grew beyond a single instance. EFS can be mounted on-premises data center connected with Amazon Virtual Private Cloud (VPC) with AWS Direct Connect service.

Feature

EFS stores data as objects and each object is stored across multiple availability zones within a region. More durable than S3. Ability to make API calls.

Security

There are three main levels of access controls to consider when it comes to the EFS file system.

- IAM permissions for API calls

- Security groups for EC2 instances and mount targets

- Network File System-level users, groups, and permissions.

AWS allows connectivity between EC2 instances and EFS file systems. You can associate one security group with an EC2 instance and another security group with an EFS mount target associated with the file system. These security groups act as firewalls and enforce rules that define the traffic flow between EC2 instances and EFS file systems.

4. Elastic Block Store (Amazon EBS)

Similar to EFS, EBS volumes are network file systems. Volumes get automatically replicated within Availability Zones for high availability and durability.

Usage

It is durable block-level storage to be used with EC2 instances in the AWS cloud. EBS Volumes are used by mounting them onto EC2 instance as you will do with a physical hard drive on-premise and then format the EBS volume to the desired file system. EBS allows for dynamically increasing capacity, performance tuning and you can even change the type of volume with any downtime or performance impact.

Features

EBS allows for save point-in-time snapshots of volumes to increases the durability of the data stored. Each separate volume can be configured as EBS General Purpose (SSD), Provisioned IOPS (SSD), Throughput Optimized (HDD), or Cold (HDD) as needed.EBS Volumes has a very low failure rate of about 0.1 to 0.2 percent.

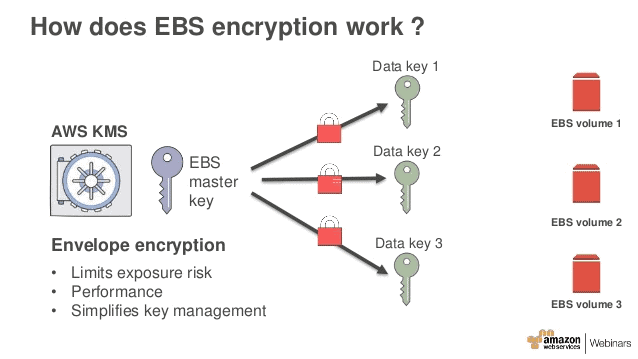

Security

IAM policy is needed to be defined to allow access to EBS volumes. Coupled with encryption for data-at-rest and data-in-motion security it offers a strong defense-in-depth security strategy for your data.

5. EC2 Instance Storage

EC2 Instance storage provides temporary block-level storage for EC2 instances.

Usage

Instance storage volumes are ideal for the temporary storage of data that changes frequently like buffers, queue caches, and scratch data. It can only be employed by one EC2 instance meaning volumes can’t be detached and attached to a different instance.

Features

Uses SSDs to deliver high random I/O performance, not intended to be used as durable disk storage. Data durability is provided through replication, or by periodically copying data to durable storageData on EC2 volume only persist during the lifetime of EC2 instance that it’s been related to

Security

IAM policy is required to be defined to permit secure control to users for performing operations like launch and termination of EC2 instances. When you stop or terminate an instance, the applications and data are erased and thus making the info inaccessible to a different instance within the future.

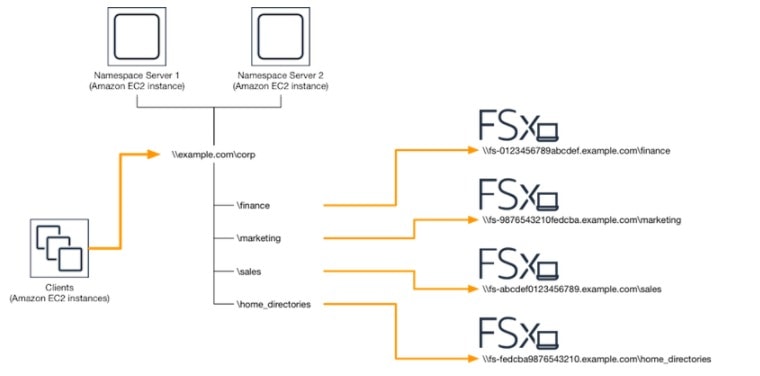

6. Amazon FSx

Amazon FSx is a completely managed third-party file system solution. Amazon FSx utilizes SSD storage to provide fast performance with low latency.

It provides two file systems to choose from:

- Amazon FSx for Windows File Server

- Amazon FSx for Lustre

Usage

With the use of Amazon FSx, you can utilize the rich feature sets and fast performance of widely-used open source and commercially licensed file systems, while avoiding time-consuming administrative tasks like hardware provisioning, software configuration, patching, and backups. FSx provides cost-efficient capacity with high levels of reliability and integrates with a broad portfolio of AWS services to enable faster innovation.

Features

Amazon FSx provides a wide range of Solid-State Disk (SSD) and Hard Disk Drive (HDD) storage options enabling you to optimize storage price and performance for your workload requirements. It delivers sustained high read and writes speeds and consistent low latency data access.

Security

It automatically encrypts your data at rest using AWS KMS and in-transit using SMB Kerberos session keys. It is designed to meet the highest security standards and has been assessed to comply with ISO, PCI-DSS, and SOC compliance, and is HIPAA eligible.

Benefits Of AWS Storage

- No upfront cost it is a pay-as-you-go model.

- Worldwide access: You can access all your data worldwide just using an internet connection

- Storage can be increased or decreased with changes in data size.

- Low-cost data storage with high durability and high availability

- Plenty of choices for backing/archiving data in case of disaster recovery.

Over the years, Amazon Web Services (AWS) storage has been diversified vastly to cater to varying needs. With the vastly increasing data, new data storage technologies have transformed and are still evolving day by day.