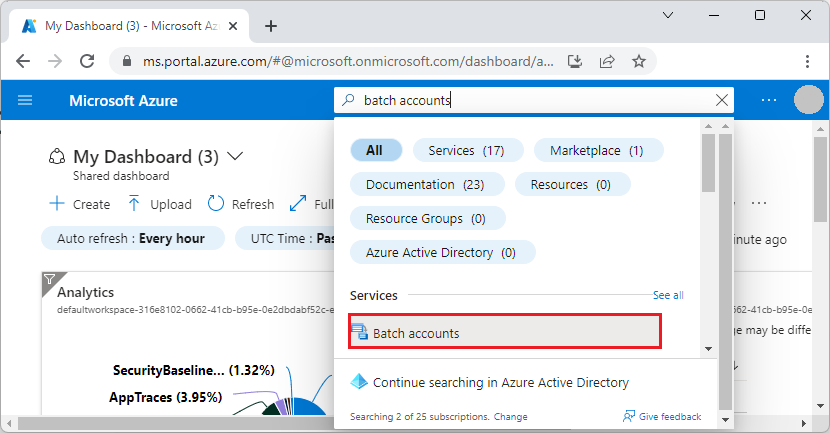

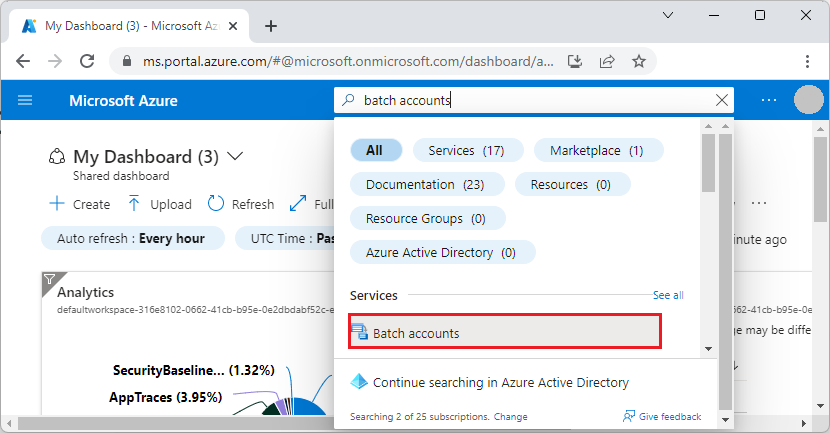

Create a Batch account

Follow these steps to create a sample Batch account for test purposes. You need a Batch account to create pools and jobs. You can also link an Azure storage account with the Batch account. Although not required for this quickstart, the storage account is useful to deploy applications and store input and output data for most real-world workloads.

In the Azure portal, select Create a resource.

Type "batch service" in the search box, then select Batch Service.

Select Create.

In the Resource group field, select Create new and enter a name for your resource group.

Enter a value for Account name. This name must be unique within the Azure Location selected. It can contain only lowercase letters and numbers, and it must be between 3-24 characters.

Optionally, under Storage account, you can specify a storage account. Click Select a storage account, then select an existing storage account or create a new one.

Leave the other settings as is. Select Review + create, then select Create to create the Batch account.

When the Deployment succeeded message appears, go to the Batch account that you created.

Create a pool of compute nodes

Now that you have a Batch account, create a sample pool of Windows compute nodes for test purposes. The pool in this quickstart consists of two nodes running a Windows Server 2019 image from the Azure Marketplace.

In the Batch account, select Pools > Add.

Enter a Pool ID called mypool.

In Operating System, use the following settings (you can explore other options).

| Setting | Value |

|---|

| Image Type | Marketplace |

| Publisher | microsoftwindowsserver |

| Offer | windowsserver |

| Sku | 2019-datacenter-core-smalldisk |

Scroll down to enter Node Size and Scale settings. The suggested node size offers a good balance of performance versus cost for this quick example.

| Setting | Value |

|---|

| Node pricing tier | Standard_A1_v2 |

| Target dedicated nodes | 2 |

Keep the defaults for remaining settings, and select OK to create the pool.

Batch creates the pool immediately, but it takes a few minutes to allocate and start the compute nodes. During this time, the pool's Allocation state is Resizing. You can go ahead and create a job and tasks while the pool is resizing.

After a few minutes, the allocation state changes to Steady, and the nodes start. To check the state of the nodes, select the pool and then select Nodes. When a node's state is Idle, it is ready to run tasks.

Create a job

Now that you have a pool, create a job to run on it. A Batch job is a logical group of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The job won't have tasks until you create them.

In the Batch account view, select Jobs > Add.

Enter a Job ID called myjob.

In Pool, select mypool.

Keep the defaults for the remaining settings, and select OK.

Create tasks

Now, select the job to open the Tasks page. This is where you'll create sample tasks to run in the job. Typically, you create multiple tasks that Batch queues and distributes to run on the compute nodes. In this example, you create two identical tasks. Each task runs a command line to display the Batch environment variables on a compute node, and then waits 90 seconds.

When you use Batch, the command line is where you specify your app or script. Batch provides several ways to deploy apps and scripts to compute nodes.

To create the first task:

Select Add.

Enter a Task ID called mytask.

In Command line, enter cmd /c "set AZ_BATCH & timeout /t 90 > NUL". Keep the defaults for the remaining settings, and select Submit.

Repeat the steps above to create a second task. Enter a different Task ID such as mytask2, but use the same command line.

After you create a task, Batch queues it to run on the pool. When a node is available to run it, the task runs. In our example, if the first task is still running on one node, Batch will start the second task on the other node in the pool.

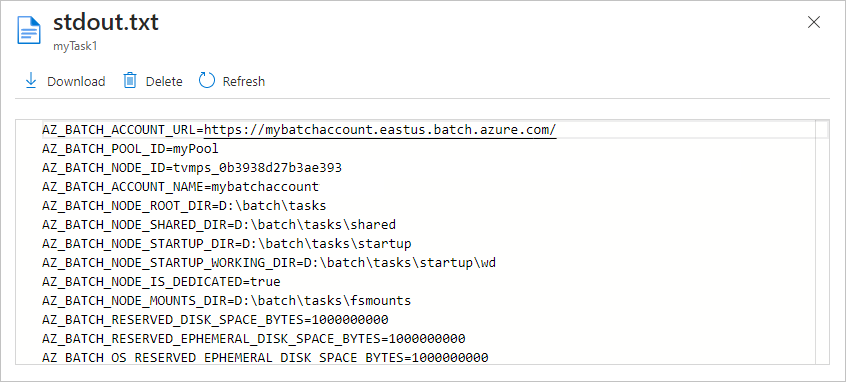

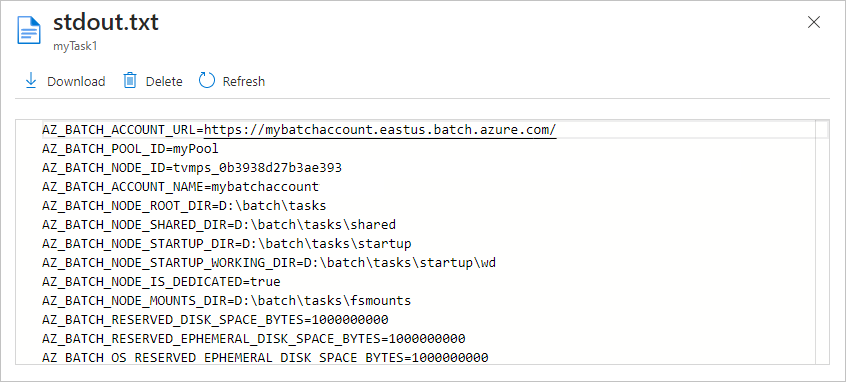

View task output

The example tasks you created will complete in a couple of minutes. To view the output of a completed task, select the task, then select the file stdout.txt to view the standard output of the task. The contents are similar to the following example:

The contents show the Azure Batch environment variables that are set on the node. When you create your own Batch jobs and tasks, you can reference these environment variables in task command lines, and in the apps and scripts run by the command lines.

Clean up resources

If you want to continue with Batch tutorials and samples, you can keep using the Batch account and linked storage account created in this quickstart. There is no charge for the Batch account itself.

You are charged for the pool while the nodes are running, even if no jobs are scheduled. When you no longer need the pool, delete it. In the account view, select Pools and the name of the pool. Then select Delete. After you delete the pool, all task output on the nodes is deleted.

When no longer needed, delete the resource group, Batch account, and all related resources. To do so, select the resource group for the Batch account and select Delete resource group.