- A service that uses the AWS Global Network to improve the availability and performance of your applications to your local and global users.

- It provides static IP addresses that act as a fixed entry point to your application endpoints in a single or multiple AWS Regions, such as your Application Load Balancers, Network Load Balancers or Amazon EC2 instances.

- AWS Global Accelerator continually monitors the health of your application endpoints and will detect an unhealthy endpoint and redirect traffic to healthy endpoints in less than 1 minute.

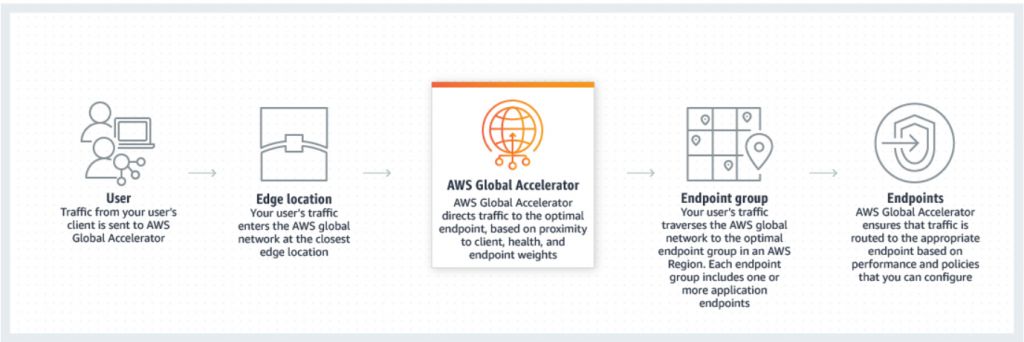

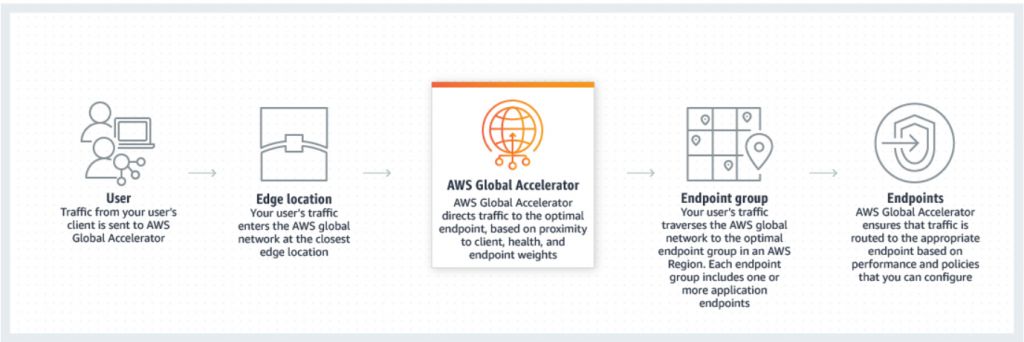

- How It Works

- Concepts

- An accelerator is the resource you create to direct traffic to optimal endpoints over the AWS global network.

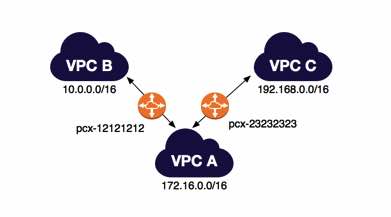

- Network zones are isolated units with their own set of physical infrastructure and service IP addresses from a unique IP subnet.

- AWS Global Accelerator provides you with a set of two static IP addresses that are anycast from the AWS edge network. It also assigns a default Domain Name System (DNS) name to your accelerator, similar to a1234567890abcdef.awsglobalaccelerator.com, that points to the static IP addresses.

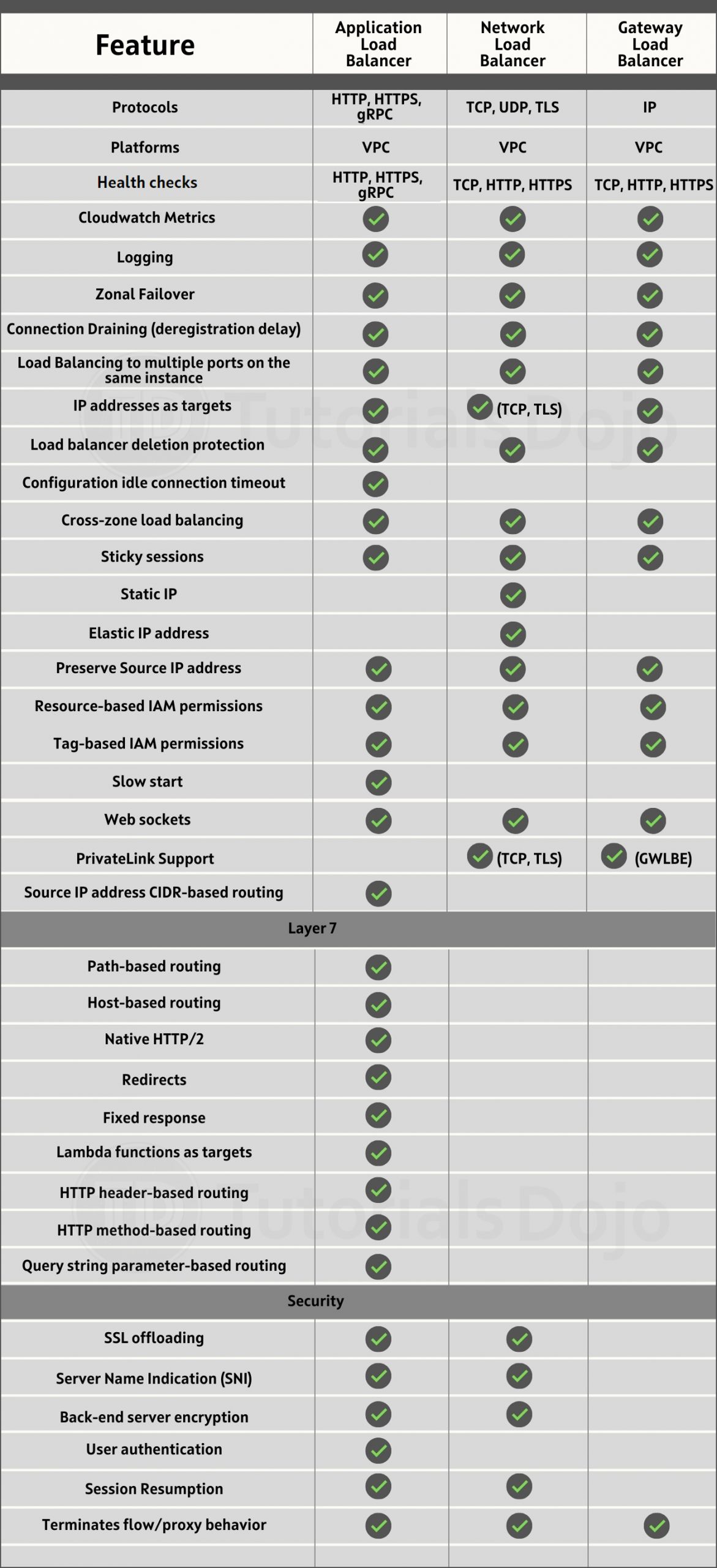

- A listener processes inbound connections from clients to Global Accelerator, based on the port (or port range) and protocol that you configure. Global Accelerator supports both TCP and UDP protocols.

- Each endpoint group is associated with a specific AWS Region. Endpoint groups include one or more endpoints in the Region.

- Endpoints can be Network Load Balancers, Application Load Balancers, EC2 instances, or Elastic IP addresses.

- Benefits

- Instant regional failover – AWS Global Accelerator automatically checks the health of your applications and routes user traffic only to healthy application endpoints. If the health status changes or you make configuration updates, AWS Global Accelerator reacts instantaneously to route your users to the next available endpoint. Monitors the health of your application endpoints by using TCP, HTTP, and HTTPS health checks.

- High availability – AWS Global Accelerator has a fault-isolating design that increases the availability of your application. When you create an accelerator, you are allocated two IPv4 static IP addresses that are serviced by independent network zones.

- No variability around clients that cache IP addresses – You do not have to rely on the IP address caching settings of client devices. Change propagation of a configuration update only takes a matter of seconds.

- Improved performance – AWS Global Accelerator ingresses traffic from the edge location that is closest to your end clients through anycast static IP addresses. Then traffic traverses the AWS global network, which optimizes the path to your application that is running in an AWS Region. AWS Global Accelerator chooses the optimal AWS Region based on the geography of end clients, which reduces first-byte latency and improves performance.

- Easy manageability – The static IP addresses provided by AWS Global Accelerator are fixed and provide a single entry point to your applications. This lets you easily move your endpoints between Availability Zones or between AWS Regions, without having to update your DNS configuration or client-facing applications.

- Fine-grained control – AWS Global Accelerator lets you set a traffic dial for your regional endpoint groups, to dial traffic up or down for a specific AWS Region when you conduct performance testing or application updates. In addition, if you have stateful applications, you can choose to direct all requests from a user to the same endpoint, regardless of the source port and protocol, to maintain client affinity. These features give you fine-grained control. By default, the traffic dial is set to 100% for all regional endpoint groups.

- Also supports bring your own IP (BYOIP) so you can use your own IPs on the AWS edge network.

- Steps in deploying an accelerator

- Create an accelerator

- Configure endpoint groups

- Register endpoints for endpoint groups

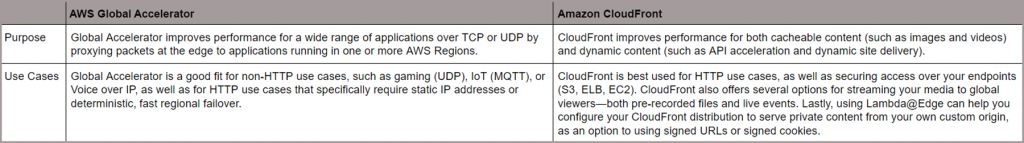

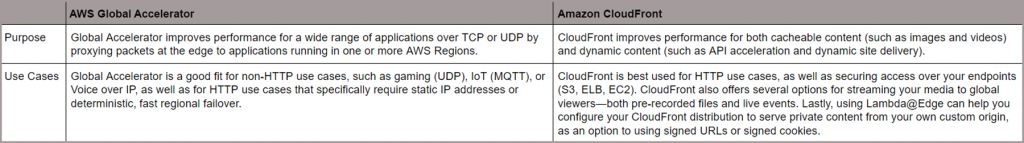

- Global Accelerator vs CloudFront

Both services integrate with AWS Shield for DDoS protection.

- Security

- SOC, PCI, HIPAA, GDPR, and ISO compliant.

- AWS Global Accelerator Flow Logs provide detailed records about traffic that flows through an accelerator to an endpoint.

- As a managed service, AWS Global Accelerator is protected by the AWS global network security procedures.

- Pricing

- You are charged for each accelerator that is provisioned and the amount of traffic in the dominant direction that flows through the accelerator.

- For every accelerator that is provisioned (both enabled and disabled), you are charged a fixed hourly fee and an incremental charge over your standard Data Transfer rates per GB, also called a Data Transfer-Premium fee.