- Enables you to run batch computing workloads on the AWS Cloud.

- It is a regional service that simplifies running batch jobs across multiple AZs within a region.

Features

- Batch manages compute environments and job queues, allowing you to easily run thousands of jobs of any scale using EC2 and EC2 Spot.

- Batch chooses where to run the jobs, launching additional AWS capacity if needed.

- Batch carefully monitors the progress of your jobs. When capacity is no longer needed, it will be removed.

- Batch provides the ability to submit jobs that are part of a pipeline or workflow, enabling you to express any interdependencies that exist between them as you submit jobs.

Components

- Jobs

- Job Definitions

- Job Queues

- Compute Environment

Jobs

- A unit of work (such as a shell script, a Linux executable, or a Docker container image) that you submit to Batch.

- Jobs can reference other jobs by name or by ID, and can be dependent on the successful completion of other jobs.

- Job types

- Single for single Job

- Array for array Job of size 2 to 10,000

- An array job shares common job parameters, such as the job definition, vCPUs, and memory. It runs as a collection of related, yet separate, basic jobs that may be distributed across multiple hosts and may run concurrently.

- Multi-node parallel jobs enable you to run single large-scale, tightly coupled, high performance computing applications and distributed GPU model training jobs that span multiple Amazon EC2 instances.

- Batch lets you specify up to five distinct node groups for each job. Each group can have its own container images, commands, environment variables, and so on.

- Each multi-node parallel job contains a main node, which is launched first. After the main node is up, the child nodes are launched and started. If the main node exits, the job is considered finished, and the child nodes are stopped.

- Not supported on compute environments that use Spot Instances.

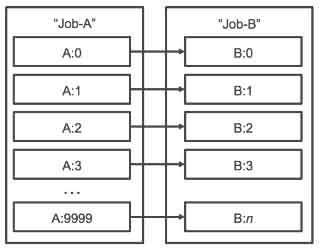

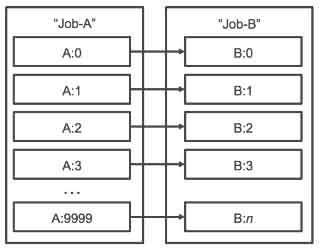

- Dependencies

- A job may have up to 20 dependencies.

- For Job depends on, enter the job IDs for any jobs that must finish before this job starts.

- (Array jobs only) For N-To-N job dependencies, specify one or more job IDs for any array jobs for which each child job index of this job should depend on the corresponding child index job of the dependency.

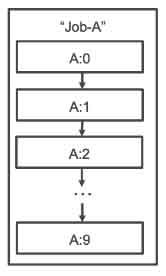

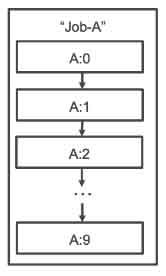

- (Array jobs only) Run children sequentially creates a SEQUENTIAL dependency for the current array job. This ensures that each child index job waits for its earlier sibling to finish.

- States

- SUBMITTED – a job that has been submitted to the queue, and has not yet been evaluated by the scheduler.

- PENDING – a job that resides in the queue and is not yet able to run due to a dependency on another job or resource.

- RUNNABLE – a job that resides in the queue, has no outstanding dependencies, and is therefore ready to be scheduled to a host. Jobs in this state are started as soon as sufficient resources are available in one of the compute environments that are mapped to the job’s queue.

- STARTING – jobs have been scheduled to a host and the relevant container initiation operations are underway.

- RUNNING – the job is running as a container job on an Amazon ECS container instance within a compute environment. When the job’s container exits, the process exit code determines whether the job succeeded or failed. An exit code of 0 indicates success, and any non-zero exit code indicates failure.

- SUCCEEDED – the job has successfully completed with an exit code of 0. The job state for SUCCEEDED jobs is persisted for 24 hours.

- FAILED – the job has failed all available attempts. The job state for FAILED jobs is persisted for 24 hours.

- You can apply a retry strategy to your jobs and job definitions that allows failed jobs to be automatically retried.

- You can configure a timeout duration for your jobs so that if a job runs longer than that, Batch terminates the job. If a job is terminated for exceeding the timeout duration, it is not retried. If it fails on its own, then it can retry if retries are enabled, and the timeout countdown is started over for the new attempt.

Job Definitions

- Specifies how jobs are to be run.

- The definition can contain:

- an IAM role to provide programmatic access to other AWS resources.

- memory and CPU requirements for the job.

- controls for container properties, environment variables, and mount points for persistent storage.

Job Queues

- This is where a Batch job resides until it is scheduled onto a compute environment.

- You can associate one or more compute environments with a job queue.

- You can assign priority values for the compute environments and even across job queues themselves.

- The Batch Scheduler evaluates when, where, and how to run jobs that have been submitted to a job queue. Jobs run in approximately the order in which they are submitted as long as all dependencies on other jobs have been met.

Compute Environment

- A set of managed or unmanaged compute resources (such as EC2 instances) that are used to run jobs.

- Compute environments contain the Amazon ECS container instances that are used to run containerized batch jobs.

- A given compute environment can be mapped to one or many job queues.

- Managed Compute Environment

- Batch manages the capacity and instance types of the compute resources within the environment, based on the compute resource specification that you define when you create the compute environment.

- You can choose to use EC2 On-Demand Instances or Spot Instances in your managed compute environment.

- ECS container instances are launched into the VPC and subnets that you specify when you create the compute environment.

- Unmanaged Compute Environment

- You manage your own compute resources in this environment.

- After you have created your unmanaged compute environment, use the DescribeComputeEnvironments API operation to view the compute environment details and find the ECS cluster that is associated with the environment and manually launch your container instances into that cluster.

Security

- By default, IAM users don’t have permission to create or modify AWS Batch resources, or perform tasks using the AWS Batch API.

- Take advantage of IAM policies, roles, and permissions.

- You can control access to AWS Batch resources based on the tag values.

Monitoring

- You can use the AWS Batch event stream for CloudWatch Events to receive near real-time notifications regarding the current state of jobs that have been submitted to your job queues.

- Events from the AWS Batch event stream are ensured to be delivered at least one time.

- CloudTrail captures all API calls for AWS Batch as events.

Pricing

- There is no additional charge for AWS Batch. You pay for resources you create to store and run your application.

- A cloud-based virtual private server (VPS) solution.

- Lightsail includes everything you need for your websites and web applications – a virtual machine (choose either Linux or Windows OS), SSD-based storage, data transfer, DNS management, and a static IP address.

Features

- Lightsail Instances and Volumes

- Lightsail offers virtual servers (instances) where you can launch your website, web application, or project. Manage your instances from the Lightsail console or API.

- You can choose from a variety of hardware configurations to suit your workload. See pricing section below for details.

- Lightsail uses SSD for its virtual servers. Each attached disk can be up to 16 TB, and you can attach up to 15 disks per Lightsail instance.

- Lightsail offers automatic and manual snapshots for your instances and SSD volumes.

- Lightsail Load Balancers

- Lightsail’s load balancers route web traffic across your instances so that your websites and applications can accommodate variations in traffic, be better protected from outages, and deliver a better experience to your visitors.

- During load balancer creation, you will be asked to specify a path for Lightsail to ping. If the target instance can be reached using this path, then Lightsail will route traffic there. If one of your target instances is unresponsive, Lightsail will not route traffic to that instance.

- Lightsail load balancers direct traffic to your healthy target instances based on a round robin algorithm.

- Lightsail supports session persistence for applications that require visitors to hit the same target instances for data consistency.

- Lightsail Certificates

- Lightsail load balancers include integrated certificate management, providing free SSL/TLS certificates that can be quickly provisioned and added to a load balancer. AWS handles your certificate renewals automatically.

- Lightsail certificates are domain validated, meaning that you need to provide proof of identity by validating that you own or have access to your website’s domain before the certificate can be provisioned by the certificate authority.

- You can add up to 10 domains or subdomains per certificate. Lightsail does not currently support wild card domains.

- Managed Databases

- You can launch a fully configured MySQL or PostgreSQL managed database in minutes and leave the maintenance to Lightsail.

- Managed databases are available in Standard and High Availability plans. High Availability plans add redundancy and durability to your database, by automatically creating standby database in a separate AZ from your primary database, synchronously replicating data to the standby database, and providing failover to the standby database in case of infrastructure failure and during maintenance.

- Lightsail automatically backs up your database and allows point in time restore from the past 7 days using the database restore tool.

- You can scale up your database by taking a snapshot of your database and creating a new, larger database plan from snapshot or by creating a new, larger database using the emergency restore feature. You can also switch from Standard to High Availability plans and vice versa using either method. You cannot scale down your database.

- Lightsail also automatically encrypts your data at rest and in motion for increased security and stores your database password for easy and secure connections to your database.

- You can easily migrate your projects to Amazon EC2 by taking snapshots of your virtual servers and SSD volumes, and launching them in the EC2 console.

- Lightsail CDN, backed by Amazon CloudFront, lets you create and manage content delivery network (CDN) distributions for your Lightsail applications.

- Lightsail resources operate in dual-stack mode, accepting both IPv4 and IPv6 client connections. IPv6 is supported on Lightsail resources such as instances, containers, load balancers and CDN. In addition to this, Lightsail also supports firewall rules for IPv6 traffic.

- Supported Operating Systems

- Ubuntu

- Debian

- FreeBSD

- OpenSUSE

- CentOS

- Windows Server

- Pre-configured Application Stacks

- WordPress

- Magento

- Drupal

- Joomla

- Ghost

- Redmine

- Plesk

- cPanel & WHM

- Django

- Development Stacks

- Node JS

- Gitlab

- LAMP (Linux, MySQL, Apache, PHP)

- MEAN (MongoDB, ExpressJS, Angular JS, Node JS)

- Nginx

Pricing

- Included in all Lightsail plans

- Static IP address

- Intuitive management console

- DNS management

- 1-click SSH terminal access (Linux/Unix)

- 1-click RDP access (Windows)

- Powerful Lightsail API

- Highly available SSD storage

- Server monitoring

- You don’t pay for a static IP if it is attached to an instance.

- Your Lightsail instances are charged only when they’re in the running or stopped state.

- Your cost will be based on the virtual server package you select. Windows packages cost more than Linux. Outbound data transfer beyond the cap of package will incur additional costs.

- For Linux virtual servers, you have the following configurations:

|

1 | 512 MB | 1 | 20 GB | 1 TB |

2 | 1 GB | 1 | 40 GB | 2 TB |

3 | 2 GB | 1 | 60 GB | 3 TB |

4 | 4 GB | 2 | 80 GB | 4 TB |

5 | 8 GB | 2 | 160 GB | 5 TB |

6 | 16 GB | 4 | 320 GB | 6 TB |

7 | 32 GB | 8 | 640 GB | 7 TB |

- For Windows virtual servers, you have the following configurations:

|

1 | 512 MB | 1 | 30 GB | 1 TB |

2 | 1 GB | 1 | 40 GB | 2 TB |

3 | 2 GB | 1 | 60 GB | 3 TB |

4 | 4 GB | 2 | 80 GB | 4 TB |

5 | 8 GB | 2 | 160 GB | 5 TB |

6 | 16 GB | 4 | 320 GB | 6 TB |

7 | 32 GB | 8 | 640 GB | 7 TB |

- You can also opt for managed databases, which has the following available configurations:

|

1 | 1 GB | 1 | 40 GB | 100 GB | No |

2 | 2 GB | 1 | 80 GB | 100 GB | Yes |

3 | 4 GB | 2 | 120 GB | 100 GB | Yes |

4 | 8 GB | 2 | 240 GB | 200 GB | Yes |

- You can modify your managed database to be highly available for twice the price of the package.

- If you choose to run load balancers for your virtual servers, you are charged a fixed price per month no matter which package you select.

- Your load balancer does not consume your data transfer allowance. Traffic between the load balancer and the target instances is metered and counts toward your data transfer allowance for your instances, in the same way that traffic in from and out to the internet is counted toward your data transfer allowance for Lightsail instances that are not behind a load balancer.

- You can purchase additional EBS SSD storage for your Linux/Windows servers. Available options are 8 GB, 32 GB, 64 GB, 128 GB or 256 GB. Costs 0.10 USD per allocated GB, per month.

- If you have point-in-time snapshots for your Lightsail instances, managed databases or block storage, you are charged an additional USD $0.05 per GB of backup data, per month.

Limits

- You can currently create up to 20 Lightsail instances, 5 static IPs, 3 DNS zones, 20 TB of attached block storage, and 5 load balancers in a Lightsail account.

- You can also generate up to 20 certificates during each calendar year.

- A container management service to run, stop, and manage Docker containers on a cluster.

- ECS can be used to create a consistent deployment and build experience, manage, and scale batch and Extract-Transform-Load (ETL) workloads, and build sophisticated application architectures on a microservices model.

- Amazon ECS is a regional service.

Features

- You can create ECS clusters within a new or existing VPC.

- After a cluster is up and running, you can define task definitions and services that specify which Docker container images to run across your clusters.

- AWS Compute SLA guarantees a Monthly Uptime Percentage of at least 99.99% for Amazon ECS.

- Amazon ECS Exec is a way for customers to execute commands in a container running on Amazon EC2 instances or AWS Fargate. ECS Exec gives you interactive shell or single command access to a running container.

Components

- Containers and Images

- Your application components must be architected to run in containers ー containing everything that your software application needs to run: code, runtime, system tools, system libraries, etc.

- Containers are created from a read-only template called an image.

- Images are typically built from a Dockerfile, a plain text file that specifies all of the components that are included in the container. These images are then stored in a registry from which they can be downloaded and run on your cluster.

- When you launch a container instance, you have the option of passing user data to the instance. The data can be used to perform common automated configuration tasks and even run scripts when the instance boots.

- Docker Volumes can be a local instance store volume, EBS volume or EFS volume. Connect your Docker containers to these volumes using Docker drivers and plugins.

- Task Components

- Task definitions specify various parameters for your application. It is a text file, in JSON format, that describes one or more containers, up to a maximum of ten, that form your application.

- Task definitions are split into separate parts:

- Task family – the name of the task, and each family can have multiple revisions.

- IAM task role – specifies the permissions that containers in the task should have.

- Network mode – determines how the networking is configured for your containers.

- Container definitions – specify which image to use, how much CPU and memory the container are allocated, and many more options.

- Volumes – allow you to share data between containers and even persist the data on the container instance when the containers are no longer running.

- Task placement constraints – lets you customize how your tasks are placed within the infrastructure.

- Launch types – determines which infrastructure your tasks use.

- Tasks and Scheduling

- A task is the instantiation of a task definition within a cluster. After you have created a task definition for your application, you can specify the number of tasks that will run on your cluster.

- Each task that uses the Fargate launch type has its own isolation boundary and does not share the underlying kernel, CPU resources, memory resources, or elastic network interface with another task.

- The task scheduler is responsible for placing tasks within your cluster. There are several different scheduling options available.

- REPLICA — places and maintains the desired number of tasks across your cluster. By default, the service scheduler spreads tasks across Availability Zones. You can use task placement strategies and constraints to customize task placement decisions.

- DAEMON — deploys exactly one task on each active container instance that meets all of the task placement constraints that you specify in your cluster. When using this strategy, there is no need to specify a desired number of tasks, a task placement strategy, or use Service Auto Scaling policies.

- You can upload a new version of your application task definition, and the ECS scheduler automatically starts new containers using the updated image and stop containers running the previous version.

- Amazon ECS tasks running on both Amazon EC2 and AWS Fargate can mount Amazon Elastic File System (EFS) file systems.

- Clusters

- When you run tasks using ECS, you place them in a cluster, which is a logical grouping of resources.

- Clusters are Region-specific.

- Clusters can contain tasks using both the Fargate and EC2 launch types.

- When using the Fargate launch type with tasks within your cluster, ECS manages your cluster resources.

- When using the EC2 launch type, then your clusters are a group of container instances you manage. These clusters can contain multiple different container instance types, but each container instance may only be part of one cluster at a time.

- Before you can delete a cluster, you must delete the services and deregister the container instances inside that cluster.

- Enabling managed Amazon ECS cluster auto scaling allows ECS to manage the scale-in and scale-out actions of the Auto Scaling group. On your behalf, Amazon ECS creates an AWS Auto Scaling scaling plan with a target tracking scaling policy based on the target capacity value that you specify.

- Services

- ECS allows you to run and maintain a specified number of instances of a task definition simultaneously in a cluster.

- In addition to maintaining the desired count of tasks in your service, you can optionally run your service behind a load balancer.

- There are two deployment strategies in ECS:

- This involves the service scheduler replacing the current running version of the container with the latest version.

- The number of tasks ECS adds or removes from the service during a rolling update is controlled by the deployment configuration, which consists of the minimum and maximum number of tasks allowed during a service deployment.

- Blue/Green Deployment with AWS CodeDeploy

- This deployment type allows you to verify a new deployment of a service before sending production traffic to it.

- The service must be configured to use either an Application Load Balancer or Network Load Balancer.

- Container Agent

- The container agent runs on each infrastructure resource within an ECS cluster.

- It sends information about the resource’s current running tasks and resource utilization to ECS, and starts and stops tasks whenever it receives a request from ECS.

- Container agent is only supported on Amazon EC2 instances.

- Service Load Balancing

- Amazon ECS services support the Application Load Balancer, Network Load Balancer, and Classic Load Balancer ELBs. Application Load Balancers are used to route HTTP/HTTPS (or layer 7) traffic. Network Load Balancers are used to route TCP or UDP (or layer 4) traffic. Classic Load Balancers are used to route TCP traffic.

- You can attach multiple target groups to your Amazon ECS services that are running on either Amazon EC2 or AWS Fargate. This allows you to maintain a single ECS service that can serve traffic from both internal and external load balancers and support multiple path based routing rules and applications that need to expose more than one port.

- The Classic Load Balancer doesn’t allow you to run multiple copies of a task on the same instance. You must statically map port numbers on a container instance. However, an Application Load Balancer uses dynamic port mapping, so you can run multiple tasks from a single service on the same container instance.

- If a service’s task fails the load balancer health check criteria, the task is stopped and restarted. This process continues until your service reaches the number of desired running tasks.

- Services with tasks that use the awsvpc network mode, such as those with the Fargate launch type, do not support Classic Load Balancers. You must use NLB instead for TCP.

AWS Fargate

- You can use Fargate with ECS to run containers without having to manage servers or clusters of EC2 instances.

- You no longer have to provision, configure, or scale clusters of virtual machines to run containers.

- Fargate only supports container images hosted on Elastic Container Registry (ECR) or Docker Hub.

Task Definitions for Fargate Launch Type

- Fargate task definitions require that the network mode is set to awsvpc. The awsvpc network mode provides each task with its own elastic network interface.

- Fargate task definitions require that you specify CPU and memory at the task level.

- Fargate task definitions only support the awslogs log driver for the log configuration. This configures your Fargate tasks to send log information to Amazon CloudWatch Logs.

- Task storage is ephemeral. After a Fargate task stops, the storage is deleted.

- Amazon ECS tasks running on both Amazon EC2 and AWS Fargate can mount Amazon Elastic File System (EFS) file systems.

- Put multiple containers in the same task definition if:

- Containers share a common lifecycle.

- Containers are required to be run on the same underlying host.

- You want your containers to share resources.

- Your containers share data volumes.

- Otherwise, define your containers in separate tasks definitions so that you can scale, provision, and deprovision them separately.

Task Definitions for EC2 Launch Type

- Create task definitions that group the containers that are used for a common purpose, and separate the different components into multiple task definitions.

- After you have your task definitions, you can create services from them to maintain the availability of your desired tasks.

- For EC2 tasks, the following are the types of data volumes that can be used:

- Docker volumes

- Bind mounts

- Private repositories are only supported by the EC2 Launch Type.

Monitoring

- You can configure your container instances to send log information to CloudWatch Logs. This enables you to view different logs from your container instances in one convenient location.

- With CloudWatch Alarms, watch a single metric over a time period that you specify, and perform one or more actions based on the value of the metric relative to a given threshold over a number of time periods.

- Share log files between accounts, monitor CloudTrail log files in real time by sending them to CloudWatch Logs.

Tagging

- ECS resources, including task definitions, clusters, tasks, services, and container instances, are assigned an Amazon Resource Name (ARN) and a unique resource identifier (ID). These resources can be tagged with values that you define, to help you organize and identify them.

Pricing

- With Fargate, you pay for the amount of vCPU and memory resources that your containerized application requests. vCPU and memory resources are calculated from the time your container images are pulled until the Amazon ECS Task terminates.

- There is no additional charge for EC2 launch type. You pay for AWS resources (e.g. EC2 instances or EBS volumes) you create to store and run your application.

Features

- ECR supports Docker Registry HTTP API V2 allowing you to use Docker CLI commands or your preferred Docker tools in maintaining your existing development workflow.

- ECR stores both the containers you create and any container software you buy through AWS Marketplace.

- ECR stores your container images in Amazon S3.

- ECR supports the ability to define and organize repositories in your registry using namespaces.

- You can transfer your container images to and from Amazon ECR via HTTPS.

Components

- Registry

- A registry is provided to each AWS account; you can create image repositories in your registry and store images in them.

- The URL for your default registry is https://aws_account_id.dkr.ecr.region.amazonaws.com.

- You must be authenticated before you can use your registry.

- Authorization token

- Your Docker client needs to authenticate to ECR registries as an AWS user before it can push and pull images. The AWS CLI get-login command provides you with authentication credentials to pass to Docker.

- Repository

- An image repository contains your Docker images.

- ECR uses resource-based permissions to let you specify who has access to a repository and what actions they can perform on it.

- ECR lifecycle policies enable you to specify the lifecycle management of images in a repository.

- Repository policy

- You can control access to your repositories and the images within them with repository policies.

- Image

- You can push and pull Docker images to your repositories. You can use these images locally on your development system, or you can use them in ECS task definitions.

- You can replicate images in your private repositories across AWS regions.

Security

- By default, IAM users don’t have permission to create or modify Amazon ECR resources, or perform tasks using the Amazon ECR API.

- Use IAM policies to grant or deny permission to use ECR resources and operations.

- ECR partially supports resource-level permissions.

- ECR supports the use of customer master keys (CMK) managed by AWS Key Management Service (KMS) to encrypt container images stored in your ECR repositories.

Pricing

- You pay only for the amount of data you store in your repositories and data transferred to the Internet.